46,99 €

Mehr erfahren.

- Herausgeber: John Wiley & Sons

- Kategorie: Wissenschaft und neue Technologien

- Serie: Sybex Study Guide

- Sprache: Englisch

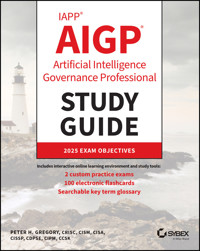

An accurate and up-to-date guide to success on the AIGP certification exam and an essential resource for technology and business professionals with an interest in artificial intelligence governance

In the IAPP AIGP Artificial Intelligence Governance Professional Study Guide, tech educator and author of more than 50 cybersecurity and technology books, Peter H. Gregory, delivers a from-scratch guide to preparing for the 2025 Artificial Intelligence Governance Professional (AIGP) certification exam. It’s an essential resource for technology professionals taking the test for the first time, as well as those seeking to maintain or expand their skillset.

This up-to-date Study Guide mirrors the content published by the International Association of Privacy Professionals (IAPP) in their AIGP Job Practice guidance. It covers every domain relevant to the certification exam, including AI governance foundations, the application of AI laws, standards, and frameworks, AI development governance, and the governance of AI deployment and use. The Study Guide is a comprehensive walkthrough of the skills that professionals need to establish and manage AI governance functions, understand AI models, and manage privacy and intellectual property concerns in AI-enabled environments.

Inside the book:

- Detailed exam information for test-takers, as well as guidance for maintaining your certification

- Concise summaries for each chapter, review questions to test your progress, and Exam Essentials that focus your attention on critical subjects

- Clearly organized content reflecting the structure of the AIGP certification exam, making the book an ideal desk reference for working AI governance professionals

- Includes 1-year free access to the Sybex online learning center, with chapter review questions, full-length practice exams, hundreds of electronic flashcards, and a glossary of key terms, all supported by Wiley's support agents who are available 24x7 via email or live chat to assist with access and login questions

Perfect for every technology professional interested in obtaining an in-demand certification in a rapidly growing field, the IAPP AIGP Artificial Intelligence Governance Professional Study Guide is also a comprehensive, on-the-job reference for IT, information security, and audit professionals with an interest in the management and governance of AI technologies.

Sie lesen das E-Book in den Legimi-Apps auf:

Seitenzahl: 652

Veröffentlichungsjahr: 2026

Ähnliche

Table of Contents

Cover

Table of Contents

Series Page

Title Page

Copyright

Dedication

Acknowledgments

About the Author

About the Technical Editor

Introduction

Assessment Test

Answers to Assessment Test

Part I: Foundations of AI and Governance

Chapter 1: AI and AI Governance

The Types of AI

Risks and Harms Posed by AI

Characteristics of AI Requiring Governance

Principles of Responsible AI

Summary

Exam Essentials

Review Questions

Chapter 2: Organizational Readiness

Roles and Responsibilities for AI Governance Stakeholders

Cross-functional Collaboration in the AI Governance Program

Training and Awareness Program on AI Terminology, Strategy, and Governance

Tailoring AI Governance to Organizational Context

Developers, Deployers, and Users in AI Governance

Summary

Exam Essentials

Review Questions

Chapter 3: Updating Policies for AI

Oversight in the Age of Autonomous Decision-making

Evaluate and Update Existing Data Privacy and Security Policies for AI

Policies to Manage Third-party Risk

Summary

Exam Essentials

Review Questions

Part II: Legal and Regulatory Obligations

Chapter 4: Privacy and Data Protection Law

Notice, Choice, Consent, and Purpose Limitation in AI

Data Minimization and Privacy by Design in AI

Practical Implications and Governance

Data Controller Obligations in the AI Context

Understanding the Requirements That Apply to Sensitive or Special Categories of Data

Summary

Exam Essentials

Review Questions

Chapter 5: Sectoral and Civil Laws

Intellectual Property Laws and AI

Non-discrimination Laws and AI

How Consumer Protection Laws Apply to AI

How Product Liability Laws Apply to AI

Summary

Exam Essentials

Review Questions

Chapter 6: The EU AI Act

Risk Classification

Classification Requirements

Requirements for General-purpose AI

Enforcement and Penalties

Organizational Context

EU AI Act Implementation

Summary

Exam Essentials

Review Questions

Chapter 7: AI Standards and Frameworks

OECD Principles

NIST AI Risk Management Framework (AI RMF)

NIST ARIA: Layered AI Model Evaluation

ISO Standards

Other AI-related Standards

Summary

Exam Essentials

Review Questions

Part III: Governing the AI Lifecycle

Chapter 8: AI System Design

Documenting the Lifecycle

Business Context and Use Cases

Impact Assessments

AI System Design Considerations

Identifying and Managing Design Risks

Proprietary Model Considerations

Summary

Exam Essentials

Review Questions

Chapter 9: Data Governance and Model Training

Data Governance

Data Lineage and Provenance

AI System Testing

Identifying and Managing Testing Risks

Summary

Exam Essentials

Review Questions

Chapter 10: Deployment and Monitoring

Pre-release Readiness

Deployment Considerations

Continuous Monitoring

Summary

Exam Essentials

Review Questions

Chapter 11: AI Risk Management and Assurance

AI Risk Management and Forecasting

AI System Audits and Testing

Incident Management and Disclosures

Summary

Exam Essentials

Review Questions

Chapter 12: Ongoing AI Operations

Managing Business Records

External Communications

AI System Retirement

Summary

Exam Essentials

Review Questions

Appendix: Answers to the Review Questions

Chapter 1: AI and AI Governance

Chapter 2: Organizational Readiness

Chapter 3: Updating Policies for AI

Chapter 4: Privacy and Data Protection Law

Chapter 5: Sectoral and Civil Laws

Chapter 6: The EU AI Act

Chapter 7: AI Standards and Frameworks

Chapter 8: AI System Design

Chapter 9: Data Governance and Model Training

Chapter 10: Deployment and Monitoring

Chapter 11: AI Risk Management and Assurance

Chapter 12: Ongoing AI Operations

Index

Advertisement 1

Advertisement 2

End User License Agreement

List of Illustrations

Introduction

Figure 1 Bloom’s Taxonomy.

Chapter 7

Figure 7.1 NIST AI RMF functions. Image from the U.S. National Institute of Standards and T...

Chapter 9

Figure 9.1 Data provenance and data lineage.

List of Tables

Introduction

Table 1 AIGP Domains, Competencies, and Performance Indicators Mapping to This Book

Chapter 2

Table 2.1 AI Responsibilities Across the AI Lifecycle

Table 2.2 Example RACI Matrix for Governance and Operational Roles

Table 2.3 Mapping Audiences to Training Needs and Topics

Table 2.4 Mapping Audiences to Training Needs and Topics

Table 2.5 Learning Styles and Their Strengths

Table 2.6 Avoiding Training Pitfalls

Table 2.7 AI Developer Responsibilities

Table 2.8 AI Deployer Responsibilities

Table 2.9 AI User Responsibilities

Chapter 4

Table 4.1 Privacy Activities in the AI System Development Lifecycle

Table 4.2 Selected Privacy Regulations Concerning Sensitive Data

Chapter 5

Table 5.1 Differences in IP Laws in Selected Jurisdictions

Chapter 6

Table 6.1 EU AI Act Risk Classifications

Table 6.2 Key Milestones in the EU AI Act

Chapter 7

Table 7.1 Differences Between NIST AI RMF and NIST CSF

Table 7.2 When to Use the AI RMF vs. the CSF

Table 7.3 Selected AI Governance Definitions in ISO/IEC 22989

Chapter 8

Table 8.1 Typical Risk Matrix

Chapter 10

Table 10.1 Comparison of AI System Deployment Options

Table 10.2 AI Model Adaptation Options

Table 10.3 Cross-functional Governance for AI System Deployment

Chapter 12

Table 12.1 Example AI Record Keeping RACI Matrix

Guide

Cover

Table of Contents

Series Page

Title Page

Copyright

Dedication

Acknowledgments

About the Author

About the Technical Editor

Introduction

Assessment Test

Answers to Assessment Test

Begin Reading

Appendix: Answers to the Review Questions

Index

Advertisement 1

Advertisement 2

End User License Agreement

Pages

i

ii

iii

iv

v

vi

vii

viii

ix

x

xxxiii

xxxiv

xxxv

xxxvi

xxxvii

xxxviii

xxxix

xl

xli

xlii

xliii

xliv

xlv

xlvi

xlvii

xlviii

xlix

l

li

lii

liii

liv

lv

lvi

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

Other Information Security and Governance Study Guides and References from Sybex and Wiley

CRISC Certified in Risk and Information Systems Control Study Guide

— ISBN 978-1-394-37366-6 (coming May 2026)

Information Security and Privacy Quick Reference: The Essential Handbook for Every CISO, CSO, and Chief Privacy Officer

— ISBN 978-1-394-35331-6, May 2025

IAPP CIPP / US Certified Information Privacy Professional Study Guide, 2nd Edition

— ISBN 978-1-394-28490-0, January 2025

IAPP CIPM Certified Information Privacy Manager Study Guide

— ISBN 978-1-394-15380-0, January 2023

ISC2 CISSP Certified Information Systems Security Professional Official Study Guide, 10th Edition

— ISBN 978-1-394-25469-9, June 2024

ISC2 CCSP Certified Cloud Security Professional Official Study Guide, 3rd Edition

— ISBN 978-1-119-90937-8, October 2022

CISA Certified Information Systems Auditor Study Guide Covering 2024–2029 Exam Objectives

— ISBN 978-1-394-28838-0, December 2024

CISM Certified Information Security Manager Study Guide

— ISBN 978-1-119-80193-1, May 2022

IAPP® AIGP®Artificial Intelligence Governance Professional Study Guide

2025 Exam Objectives

Peter H. Gregory, CRISC, CISM, CISA, CISSP, CDPSE, CIPM, CCSK

Copyright © 2026 by John Wiley & Sons, Inc. All rights reserved, including rights for text and data mining and training of artificial intelligence technologies or similar technologies.

Published by John Wiley & Sons, Inc., Hoboken, New Jersey.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 750-4470, or on the web at www.copyright.com. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201) 748-6008, or online at http://www.wiley.com/go/permission.

The manufacturer’s authorized representative according to the EU General Product Safety Regulation is Wiley-VCH GmbH, Boschstr. 12, 69469 Weinheim, Germany, e-mail: [email protected].

Trademarks: Wiley and the Wiley logo, and the Sybex logo, are trademarks or registered trademarks of John Wiley & Sons, Inc. and/or its affiliates in the United States and other countries and may not be used without written permission. All other trademarks are the property of their respective owners. John Wiley & Sons, Inc. is not associated with any product or vendor mentioned in this book.

Limit of Liability/Disclaimer of Warranty: While the publisher and the authors have used their best efforts in preparing this work, including a review of the content of the work, neither the publisher nor the authors make any representations or warranties with respect to the accuracy or completeness of the contents of this work and specifically disclaim all warranties, including without limitation any implied warranties of merchantability or fitness for a particular purpose. No warranty may be created or extended by sales representatives, written sales materials or promotional statements for this work. The fact that an organization, website, or product is referred to in this work as a citation and/or potential source of further information does not mean that the publisher and authors endorse the information or services the organization, website, or product may provide or recommendations it may make. This work is sold with the understanding that the publisher is not engaged in rendering professional services. The advice and strategies contained herein may not be suitable for your situation. You should consult with a specialist where appropriate. Further, readers should be aware that websites listed in this work may have changed or disappeared between when this work was written and when it is read. Neither the publisher nor authors shall be liable for any loss of profit or any other commercial damages, including but not limited to special, incidental, consequential, or other damages.

For general information on our other products and services or for technical support, please contact our Customer Care Department within the United States at (800) 762-2974, outside the United States at (317) 572-3993 or fax (317) 572-4002. For product technical support, you can find answers to frequently asked questions or reach us via live chat at https://sybexsupport.wiley.com.

Wiley also publishes its books in a variety of electronic formats. Some content that appears in print may not be available in electronic formats. For more information about Wiley products, visit our website at www.wiley.com.

Library of Congress Cataloging-in-Publication Data has been applied for:

Paperback ISBN: 9781394363940

ePDF ISBN: 9781394363964

ePub ISBN: 9781394363957

Obook ISBN: 9781394363971

Cover Design: Wiley

Cover Image: © Jeremy Woodhouse/Getty Images

To all business professionals who aspire to improve their organizations’ efficiency, integrity, and safety through the use of AI.

Acknowledgments

Books like this involve the work of many people, and as an author, I truly appreciate the hard work and dedication that the team at Wiley demonstrates. I want to extend special thanks to my acquisitions editor, Jim Minatel, who worked diligently to make this project possible.

I also greatly appreciate the editing and production team for the book, including Patrick Walsh, the project manager who kept the train on the tracks; Kezia Endsley, the copy editor who made sure all of the language and formatting were just right; Navin Vijayakumar, the managing editor, who managed the various phases of editing, and Maduramuthu Krishnaraj, the Content Refinement Specialist, who guided us through layouts, formatting, and final cleanup to produce a great book. Finally, special thanks to Ejona Preçi, the technical editor, who provided insightful advice and gave excellent improvement suggestions and feedback throughout the book. I would also like to thank the many behind-the-scenes contributors, including the graphic, production, and technical teams, who helped bring the book and companion materials to a finished product.

My long-time literary agent, Carole Jelen of Waterside Productions, continues to provide me with fantastic opportunities, advice, and assistance throughout my writing career.

I have difficulty expressing my gratitude to my wife and love of my life, Rebekah, for tolerating my frequent absences (in the home office) while I developed this manuscript. This project could not have been completed without her loyal and unfailing support and encouragement.

About the Author

Peter H. Gregory (CISSP, CISM, CISA, CRISC, CIPM, CDPSE, CCSK, A/CCRF, A/CCRP, A/CRMP) is the author of more than 60 books on security, privacy, and technology, including Solaris Security (Prentice Hall, 2000), The Art of Writing Technical Books (Waterside, 2022), CISA Certified Information Systems Auditor Study Guide (Wiley, 2025), Chromebook For Dummies (Wiley, 2023), and Elementary Information Security (Jones & Bartlett Learning, 2024).

Peter is a recently retired career technologist and cybersecurity executive. He has held security leadership positions at GCI Communications, Optiv Security, and Concur Technologies since the early 2000s, after a celebrated career in software, systems, and security engineering at Bally Gaming, World Vision, and AT&T Wireless. Peter serves as an advisory board member for the University of Washington and Seattle University, with a focus on cybersecurity education programs. He is a 2008 graduate of the FBI Citizens’ Academy.

Peter resides in Central Washington State and can be found at www.peterhgregory.com.

About the Technical Editor

Ejona Preçi (CISSP, CISM, CRISC, ITIL, ISO/IEC 42001 LI) is a renowned global CISO and a leading voice in cybersecurity and AI governance. She works at the forefront of advancing responsible technology, developing frameworks that guide the safe, ethical, and trustworthy use of AI systems. A multiple award winner, she has been honored as Cybersecurity Woman of the Year 2024 (Barrier Breaker), named among the Global 40 Under 40 in Cybersecurity, and recognized as one of the Top 20 Women in Cybersecurity 2025. As a thought leader, writer, and keynote speaker, she is dedicated to shaping global policy discourse and promoting trust, resilience, and accountability in the era of AI.

Introduction

Congratulations on choosing to become an AI Governance Professional (AIGP)! Whether you have worked for several years in AI or have just recently been introduced to AI governance, don’t underestimate the hard work and dedication required to obtain and maintain AIGP certification. Although ambition and motivation are essential, the rewards of being AIGP certified can far exceed the effort.

You probably never imagined you would find yourself working in AI or seeking a professional AI certification. The massive increase in AI across every profession, combined with many organizations adding AI capabilities, has led to your involvement in this field. Or, you noticed that AI-related career options are increasing exponentially, and you have decided to get ahead of the curve. Or perhaps you have responsibilities in your organization for governance, and you need to get a handle on the adoption of AI to ensure it’s done safely and responsibly. You aren’t alone! Welcome to the journey and the fantastic opportunities that await you.

I have compiled this information to help you understand the commitment needed, prepare for the exam, and maintain your certification. Not only do I wish you to prepare for and pass the exam with flying colors, but I also provide you with the information and resources to maintain your certification and proudly represent yourself and the professional world of AI with your new credentials.

The International Association of Privacy Professionals (IAPP) is a recognized leader in the field of privacy. Founded in 2000, this nonprofit organization has over 50,000 members and has several certifications, including:

Artificial Intelligence Governance Professional (AIGP)

Certified Information Privacy Professional (CIPP)

Certified Information Privacy Manager (CIPM)

Certified Information Privacy Technologist (CIPT)

Privacy Law Specialist (PLS)

Fellow of Information Privacy (FIP)

Some of IAPP’s certifications have been accredited under ISO/IEC 17024:2012, which means that IAPP’s procedures for accreditation meet international requirements for quality, continuous improvement, and accountability. For the current accreditation status of AIGP, consult the IAPP certification handbook or website.

If you’re new to IAPP, we recommend visiting the organization’s website (www.iapp.org) and familiarizing yourself with the available guides and resources. In addition, if you’re near one of the 150+ local IAPP chapters in dozens of countries worldwide, consider contacting a chapter for information on local and regional meetings, training days, conferences, or study sessions. You will meet other AI and privacy professionals who can give you additional insight into the AIGP certification and the privacy profession.

The AIGP certification primarily focuses on the governance of AI models and systems, with an emphasis on privacy. It certifies the individual’s knowledge of establishing and managing a governance function, AI models, and privacy. Better organizations that utilize governance structures will recognize the need to incorporate AI capabilities into their governance sphere, ensuring safe, ethical, and legal outcomes. An AIGP-certified individual is a great candidate for these positions.

If you’re preparing to take the AIGP exam, you’ll undoubtedly want to find as much information as possible about AI and governance. The more information you have, the better off you’ll be when attempting the exam. This study guide was written with that in mind. The goal was to provide enough information to prepare you for the test, but not so much that you’ll be overloaded with information outside the scope of the exam.

Bloom’s Taxonomy is a model for categorizing educational goals, as shown in Figure 1. IAPP exam questions focus primarily on the Remember, Understand, Apply, and Analyze levels.

FIGURE 1 Bloom’s Taxonomy.

Image courtesy Tidema via Wikimedia Commons CC license

This book presents the material at an intermediate technical level. Experience with and knowledge of AI, governance, privacy, and lifecycle processes will help you fully understand the challenges you’ll face as an AI governance professional.

I’ve included review questions at the end of each chapter to give you a taste of what taking the exam is like. I recommend you check out these questions first to gauge your level of expertise. You can then use the book mainly to fill in the gaps in your current knowledge. This study guide will help you expand your knowledge base before taking the exam.

If you can answer 80% or more of the review questions correctly for a given chapter, you can feel safe moving on to the next chapter. If you’re unable to answer that many correctly, reread the chapter and try the questions again. Your score should improve.

Don’t just study the questions and answers! The questions on the actual exam may differ from those included in this book as practice questions. The exam is designed to test your knowledge of a concept or objective, so use this book to learn the objectives behind the questions.

Certification Requirements

To earn your AIGP certification, you are required to register for the AIGP exam, pay the exam fee, and agree to various IAPP terms and conditions, including the IAPP code of professional conduct and a confidentiality agreement, which appear in the IAPP certification handbook.

The exam costs $649 for IAPP members and $799 for non-members (subject to change; always confirm in the IAPP certification handbook). More details about the AIGP exam and how to take it can be found at iapp.org/certify/aigp/

IAPP does not require AIGP candidates to document and prove relevant work experience. The AIGP certification is strictly knowledge-based. That said, if you do not have any IT, cybersecurity, or privacy experience, you’ll need to spend considerably more time studying for the exam, as you’ll need to learn the fundamentals of governance and the fundamentals of AI models and systems.

The AIGP Exam

The AIGP exam is a vendor-neutral certification for business and IT professionals responsible for (or supporting) AI governance. While no prior experience is explicitly required for this certification, a background in IT, privacy, or cybersecurity governance will be particularly helpful, as the concepts of governance in those domains closely align with AI governance.

The exam covers four major domains:

The foundations of AI governance

How laws, standards, and frameworks apply to AI

How to govern AI development

How to govern AI deployment and use

These four areas include understanding the types of AI models, measuring and managing AI systems in production, privacy, and the systems development lifecycle. The exam places a strong emphasis on various AI model scenarios. You’ll need to learn a lot of information, but you’ll be well rewarded for possessing this credential. AI is taking the world by storm, and better organizations will value the knowledge of leaders who understand how to build a governance function to ensure the best possible outcomes with new AI systems.

The AIGP exam consists solely of standard multiple-choice questions. Each question has four possible answer choices, and only one of those answers is correct. When taking the test, you’ll likely find some questions where you think multiple answers might be correct. In those cases, remember that you’re looking for the best possible answer to the question!

You’ll have three hours to take the exam and be asked to answer 100 questions during that time. Your exam will be scored on a scale of 100–500, with a passing score of 300.

In-person Exams

IAPP partners with Pearson VUE testing centers, so your next step is to find a testing center near you. In the United States, you can do this based on your address or your postal code, while non-U.S. test takers may find it easier to enter their city and country. You can search for a test center near you at the Pearson VUE Exams website: www.pearsonvue.com/us/en/iapp.html

Once you know where you’d like to take the exam, set up a Pearson VUE testing account and schedule an exam on their site.

On the day of the test, bring a government-issued identification card or passport that contains your full name (exactly matching the name on your exam registration), your signature, and your photograph. Make sure to show up with plenty of time before the exam starts. Please note that you are not allowed to bring your notes, electronic devices (including earbuds, smartphones, and watches), or any other materials. Test centers I have visited have lockers for storing your personal effects, if you don’t want to keep them in your vehicle.

Remote Exams

IAPP also offers online exam proctoring via OnVUE. Candidates using this approach will take the exam at home or in the office and be proctored remotely via webcam.

Due to the rapidly changing nature of the at-home testing experience, candidates wishing to pursue this option should check the OnVUE website for the latest details at: https://home.pearsonvue.com/op/OnVUE-technical-requirements

Exam candidates considering taking an at-home exam with a corporate-managed computer must carefully read these details, as many organizations place strict restrictions on permitted actions.

Develop a Study Plan

Whether this is your first professional certification, or if you have earned others, follow these steps to study and prepare for your AIGP exam.

Read the IAPP certification handbook:

This IAPP publication includes all the official steps required to earn IAPP certifications, including the AIGP.

Review or read this book in its entirety:

Depending on your background in governance or AI, read as much as you need to get an idea of the amount of study you’ll need.

Self-assess:

Read the study questions in each chapter and take the practice exams online to confirm your knowledge level and identify areas of study.

Know your best learning methods:

Each person has a preferred learning style that works better for comprehension and retention and aligns with their overall lifestyle.

Consider my online video course:

Find my AIGP video training course at

www.oreilly.com

. This online course is a companion to this study guide.

Study iteratively:

Depending on your work experience in governance and AI, plan your study program to last at least two months, but no longer than six months. During this time, periodically take practice exams and note your strengths and weaknesses. Once you have identified your weak areas, focus on those areas weekly by rereading the related sections in this book, retaking practice exams, and noting your progress.

Avoid cramming:

You may find online or printed resources that involve last-minute cramming. Research suggests that exam cramming can lead to susceptibility to illness, sleep disturbances, overeating, and digestive issues. One thing is sure: many people find that good, steady study habits result in less stress and greater clarity and focus during exams.

Find or form a study group:

Many IAPP chapters and other organizations have formed specific study groups or offer less expensive exam review courses. Contact your local chapter to see whether these options are available to you. In addition, be sure to keep an eye on the IAPP website. Use your local network to find out whether there are other local study groups and helpful resources.

Schedule your exam:

After you decide whether to take your exam at a Pearson VUE test center or online at home or work, log on to

iapp.org

and purchase and schedule your exam.

Check the IAPP candidate guide:

The IAPP candidate guide is your source for the most up-to-date and official information about taking your exam, including test center rules, and what you must bring (including a government-issued photo ID that matches your exam registration) to the test center.

Logistics check:

A week or more before your exam, confirm the time and place of your exam, and confirm transportation, parking, and other details.

Sleep:

Be sure to get plenty of sleep in the days leading up to your exam. I recommend you relax the day before your exam to give your mind a rest from studying.

Day of the Exam

Follow these tips on the day of the exam.

Arrive early:

Plan to arrive early at the test center, just in case traffic or other unforeseen circumstances add to your travel time.

Observe test center rules:

Be sure you understand and comply with all test center rules. Be sure to ask questions of your exam proctor so that you can put your mind at ease during the exam.

Answer all exam questions:

Read each question carefully, but don’t try to overanalyze. Select the best answer based on the AIGP job practice and the IAPP glossary. There may be more than one reasonable answer, but you are required to select the

best

answer. You should be able to flag any question you aren’t sure about so you can return to it later. Make sure your pace is fast enough to comfortably get through the entire exam with time to review questions you flagged—but don’t rush through it, or you’ll be likely to misread some questions and answer them incorrectly.

If You Did Not Pass

Don’t lose heart if you do not pass the exam. Instead, consider it a steppingstone to your eventual success! As soon as you leave the testing center, take some notes on what types of questions you feel you struggled with. Then, return to this book and my video study course and repeat those chapters and videos to reinforce your knowledge. Take at least a few weeks to study those topics and refresh your knowledge on other related subjects. Success is granted to those who persist and are determined.

After the AIGP Exam

Once you have taken the exam, you will be notified of your score immediately, so you’ll know if you passed the test right away. You should keep track of your score report with your exam registration records and the email address you used to register for the exam.

Maintaining Your Certification

AI is a rapidly evolving field with new capabilities and models arising regularly. All AIGP holders are required to complete continuing professional education annually to maintain current knowledge and sharpen their skills. The guidelines around continuing professional education are somewhat complicated, but they boil down to two requirements:

You must have a minimum of 20 hours of credit every year.

You must submit evidence of your continuing education on the CPE portal of the IAPP website.

There are many acceptable ways to earn CPE credits, many of which do not require travel or attending a training seminar. The critical requirement is that you generally do not earn CPEs for work you perform as part of your regular job. CPEs are intended to cover professional development opportunities outside of your day-to-day work. You can earn CPEs in several ways, including:

Attending conferences

Attending training programs

Attending professional association meetings and activities

Taking self-study courses

Attending vendor marketing presentations

Teaching, lecturing, or presenting

Publishing articles, monographs, or books

Participating in the exam development process

Volunteering with IAPP

Mentoring

For more information on the activities that qualify for CPE credits, visit this site to download the latest IAPP CPE policy: https://iapp.org/certify/cpe-policy

Study Guide Elements

This study guide uses some common elements to help you prepare. These include the following:

Summaries The Summary section of each chapter provides a brief overview of the chapter, enabling you to easily understand its contents.

Exam Essentials The Exam Essentials focus on major exam topics and critical knowledge that you should take into the test. The Exam Essentials focus on the exam objectives provided by IAPP.

Chapter Review Questions A set of questions at the end of each chapter will help you assess your knowledge and whether you are ready to take the exam based on your understanding of that chapter’s topics.

Additional Study Tools

This book includes additional study tools to help you prepare for the exam. They include the following.

Visit www.wiley.com/go/Sybextestprep to register and gain access to this interactive online learning environment and test bank, featuring study tools and resources.

Sybex Test Preparation Software

Sybex’s test preparation software lets you prepare with electronic test versions of the review questions from each chapter, the practice exam, and the bonus exam included in this book. You can build and take tests on specific domains, by chapter, or cover the entire set of AIGP exam objectives using randomized tests.

Electronic Flashcards

Our electronic flashcards are designed to help you prepare for the exam. Over 100 flashcards will ensure that you are familiar with critical terms and concepts.

Glossary of Terms

Sybex provides a glossary of terms in PDF format, allowing quick searches and easy reference to materials in this book.

Bonus Practice Exams

In addition to the practice questions for each chapter, this book includes two 100-question practice exams. We recommend using them both to test your preparedness for the certification exam.

AIGP Exam Objectives

IAPP publishes relative weightings for each exam objective. The following table lists the four AIGP domains and the extent they are represented on the exam.

Domain

% of Exam

*

I. Understanding the foundations of AI governance

~23

II. Understanding how laws, standards, and frameworks apply to AI

~20

III. Understanding how to govern AI development

~29

IV. Understanding how to govern AI deployment and use

~28

*Figures are approximate, based on the AIGP Body of Knowledge and Exam Blueprint that provides ranges of the number of exam coverage in each domain and competency.

AIGP Certification Exam Objective Map

The AIGP body of knowledge is organized in a way that duplicates some topics across multiple domains; for example, Domain IV (“Understanding how to govern AI deployment and use”) contains the performance indicator, “Identify laws that apply to the AI model.” Because Domain II (“Understanding how laws, standards, and frameworks apply to AI”) covers laws, we find it puzzling that AIGP put that performance indicator in Domain IV. There are other such examples. Thus, this book’s organization varies somewhat from the AIGP domains to a more logical sequence.

Table 1 illustrates how the domains, competencies, and performance indicators are addressed throughout this book.

TABLE 1 AIGP Domains, Competencies, and Performance Indicators Mapping to This Book

AIGP Domains, Competencies, and Performance Indicators

Chapter

Domain I: Understanding the foundations of AI governance

I.A Understand what AI is and why it needs governance (all performance indicators)

1

I.B Establish and communicate organizational expectations for AI governance (all performance indicators)

2

I.C Establish policies and procedures to apply throughout the AI lifecycle (all performance indicators)

3

Domain II: Understanding how laws, standards, and frameworks apply to AI

II.A Understand how existing data privacy laws apply to AI (all performance indicators)

4

II.B Understand how other types of existing laws apply to AI (all performance indicators)

5

II.C Understand the main elements of the EU AI Act (all performance indicators)

6

II.D Understand the main industry standards and tools that apply to AI (all performance indicators)

7

Domain III: Understanding how to govern AI development

III.A Govern the designing and building of the AI model (all performance indicators)

8

III.B Govern the collection and use of data in training and testing the AI model (all performance indicators)

9

III.C Govern the release, monitoring, and maintenance of the AI model

10

,

11

Assess readiness and prepare for release into production

10

Conduct continuous monitoring of the AI model and establish a regular schedule for maintenance, updates, and retraining

10

Conduct periodic activities to assess the AI model’s performance, reliability, and safety

11

Manage and document incidents, issues, and risks

11

Collaborate with cross-functional stakeholders to understand why incidents arise from AI models

11

Make public disclosures with to meet transparency obligations

11

Domain IV: Understanding how to govern AI deployment and use

IV.A Evaluate key factors and risks relevant to the decision to deploy the AI model

1

,

4

,

8

,

10

Understand the context of the AI use case

8

Understand the differences in AI model types

1

Understand the differences in AI deployment options

10

Understand the requirements that apply to sensitive or special categories of data

4

IV.B Perform key activities to assess the AI model

5

,

8

,

11

Perform or review an impact assessment on the selected AI model

8

Identify laws that apply to the AI model

5

Identify and evaluate key terms and risks in the vendor or open-source agreement

11

Identify and understand issues that are unique to a company deploying its own proprietary AI model

8

IV.C Govern the deployment and use of the AI model

10

,

11

,

12

Apply the policies, procedures, best practices, and ethical considerations to the deployment of an AI model

10

Conduct continuous monitoring of the AI model and establish a regular schedule for maintenance, updates, and retraining

10

Conduct periodic activities to assess the AI model’s performance, reliability, and safety

11

Document incidents, issues, risks, and post-market monitoring plans

12

Forecast and reduce risks of secondary or unintended uses and downstream harms

11

Establish external communication plans

12

Create and implement a policy and controls to deactivate or localize an AI model as necessary

12

Assessment Test

An AI-driven medical diagnostic system misclassifies a patient’s condition due to biased training data. Which principle of responsible AI is most directly violated?

Transparency

Fairness

Security

Human-centricity

An autonomous delivery robot is programmed to make decisions without human intervention. What governance safeguard should be in place to manage this AI system?

Implementation of a human-in-the-loop mechanism

Strict limitation of opacity

Use of supervised learning only

Elimination of data dependency

A company uses generative AI to design marketing materials trained on copyrighted online content. What AI characteristic primarily drives the need for governance in this case?

Probabilistic outputs

Complexity

Speed and scale

Data dependency

An organization’s AI governance board notices recurring gaps between technical and legal interpretations of “acceptable model risk.” Which structural action would best strengthen cross-functional collaboration?

Assign each department its own separate governance policy.

Require periodic joint review meetings and shared documentation templates.

Eliminate legal participation in technical discussions to reduce friction.

Move all governance decisions to the IT department.

A mid-sized financial services company wants to expand its AI program. Which governance maturity action should it prioritize next?

Create a lightweight checklist with no documentation requirements.

Focus exclusively on model accuracy metrics.

Formalize lifecycle checkpoints such as fairness reviews and deployment approval gates.

Replace the governance council with ad hoc task forces.

In a global healthcare enterprise, the AI governance team includes executives, clinicians, and data scientists. Which factor most justifies maintaining this diverse membership?

Diversity increases model training speed.

Cross-functional expertise ensures balanced evaluation of technical, ethical, and clinical risks.

It satisfies minimum staffing requirements for compliance audits.

It reduces total program cost through redundancy elimination.

An organization integrates AI governance into its existing IT governance framework rather than managing them separately. What is the primary benefit of this combined approach?

It avoids duplication and embeds AI oversight into established processes.

It eliminates the need for privacy and security policies.

It prevents non-technical staff from influencing AI decisions.

It removes the requirement for lifecycle documentation.

During a vendor assessment, a company discovers that a third-party AI model uses publicly scraped data with uncertain consent. Which governance control would best address this risk?

Requiring the vendor to provide documentation of data sources and licensing

Allowing deployment since the data is publicly available

Ignoring data provenance if the model performs accurately

Adding a confidentiality clause unrelated to data sourcing

An AI chatbot trained on historical customer interactions begins generating responses that reveal fragments of personal data. Which policy area most urgently needs revision?

Access control and password management

Network performance optimization

Facilities security and visitor access procedures

Traditional data retention and anonymization standards

An AI system trained on social media text begins predicting users’ religious beliefs, even though this attribute was never directly collected. What obligation does this create under privacy law?

None, since the system never collected explicit religion data.

The inference counts as processing of special category data and triggers GDPR Article 9 safeguards.

Only a transparency notice update is required.

It qualifies as anonymous data because it was inferred.

A multinational bank relies on a cloud-based AI model hosted in another region. What must the controller do to comply with GDPR transfer obligations?

Encrypt model outputs but skip documentation.

Obtain verbal consent from customers for each transfer.

Conduct a Transfer Impact Assessment (TIA) and ensure valid safeguards such as SCCs or BCRs.

Rely on processor confidentiality clauses alone.

A company developing an emotion-recognition tool processes voice recordings containing biometric and health cues. Which governance control is most essential before deployment?

Marketing approval from the product team

Execution of a Data Protection Impact Assessment (DPIA) addressing risks to data subjects

Annual employee privacy training

Generic system penetration testing

A generative-AI platform trains on millions of online articles under the assumption that scraping constitutes “fair use.” Which factor would most weaken its fair-use defense?

The model’s outputs are non-commercial.

The model’s training is transformative rather than duplicative.

The system reproduces recognizable portions of protected works.

The data come from publicly accessible sources.

An AI-based credit-scoring tool uses a consumer’s postal code as a model feature. Under anti-discrimination law, what is the primary concern?

Postal code can act as a proxy for protected classes, producing disparate impact.

The feature may reduce model accuracy.

The feature violates open-banking data-sharing rules.

The feature requires explicit customer consent under the FCRA.

A home-robot manufacturer releases a firmware update that introduces erratic movement, causing property damage. Which legal theory most likely applies?

Manufacturing defect

Design defect

Failure to warn

Breach of warranty

An AI system used to rank citizens based on their social behavior would violate which element of the EU AI Act?

Limited-risk AI disclosure obligations

High-risk AI documentation requirements

Prohibited AI classification

Systemic-risk designation for GPAI

Before a high-risk AI system can be marketed within the EU, what must its provider complete?

Certification from the European AI Office only

A conformity assessment and CE marking process

An EU-level procurement review

Submission of its algorithm source code to regulators

A company outside the EU develops a general-purpose AI model that is integrated into multiple EU applications. Which step ensures compliance with the EU AI Act’s legal requirements?

Applying for an EU data adequacy determination

Requesting exemption from systemic-risk classification

Registering the model only in the developer’s home country

Appointing an EU-based legal representative and maintaining conformity documentation

Which statement best describes the relationship between the NIST AI RMF and NIST ARIA?

ARIA replaces the AI RMF as a legally required framework for federal agencies.

The AI RMF provides strategic guidance, while ARIA offers tools to operationalize it.

The AI RMF applies only to cybersecurity, while ARIA applies to AI ethics.

ARIA focuses on model training, while the AI RMF addresses licensing.

According to ISO/IEC 42001, what is the purpose of integrating AI governance with existing management systems, such as ISO/IEC 27001 or ISO 9001?

To streamline certification processes and promote unified governance

To eliminate redundant controls by removing human oversight

To replace cybersecurity management with AI management

To prioritize algorithmic speed over quality management

How does ISO/IEC 22989 help organizations engaged in AI governance?

It defines a legal enforcement framework for AI-related misconduct.

It establishes certification rules for high-risk AI applications.

It provides a standard lexicon to ensure consistency in terminology and communication.

It mandates disclosure of proprietary algorithms to regulators.

During the design phase, an organization selects a highly complex deep learning model even though a simpler one would meet performance goals. Which governance issue does this decision most directly raise?

Transparency and explainability risk

Data sovereignty compliance

Vendor lock-in

Infrastructure scalability

A financial institution completes its AI impact assessment but fails to implement the mitigation steps it identified. What governance failure does this represent?

Poor system documentation

Lack of operationalization and review of assessment results

Overclassification of model risk

Duplication of regulatory filings

In designing a proprietary AI system, which practice most effectively reduces downstream legal and operational risk?

Allowing data scientists to change model parameters without recordkeeping

Using internal data without confirming its lawful basis for processing

Outsourcing data collection to anonymous contractors

Embedding documentation, testing, and explainability into the design process

A company trains an AI model using data purchased from a third-party broker. Later, it discovers that the broker lacked the legal authority to sell the data. Which governance control should have prevented this issue?

Bias and fairness testing

Verification of lawful data rights and provenance documentation

Integration testing before deployment

Regularization during model training

An AI system trained on clean, representative data begins producing biased results several months after deployment. What governance issue is the most likely cause?

Lack of data drift monitoring and retraining protocols

Overfitting during initial training

Failure to anonymize personal data

Use of synthetic data augmentation

During model validation, developers notice that results differ each time the model is retrained, even with identical data. Which testing or governance control addresses this risk?

Ensuring model determinism through seed control and documented configurations

Expanding the dataset with more examples

Applying dropout regularization

Replacing validation testing with performance testing

An AI customer support chatbot begins generating inaccurate responses after six months in production. Which governance control should have detected this issue earlier?

Regular penetration testing

Human-in-the-loop decision approval

Scheduled retraining and performance monitoring

Fine-tuning with anonymized data

Before deploying a high-risk AI model, a governance team confirms that documentation, risk mitigation plans, and monitoring plans are complete. What governance mechanism is being applied?

Post-market surveillance

Final gate review and stakeholder signoff

Shadow modeling for drift detection

Automated rollback testing

A healthcare provider deploys an AI diagnostic tool that runs on hospital devices without requiring cloud access. Which deployment model does this represent, and why is it appropriate?

Cloud deployment—ensures centralized control and scalability

Edge deployment—provides low latency and preserves patient privacy

On-premises deployment—simplifies external vendor management

Hybrid deployment—balances accuracy and interpretability

An organization integrates an open-source AI model licensed under AGPL into its commercial product. What is the most significant risk if the licensing terms are not appropriately reviewed?

The organization may be required to disclose proprietary source code.

The model could fail to meet expected performance benchmarks.

Users could access the model through unauthorized APIs.

The license automatically converts to the public domain after release.

During an AI system audit, a red team successfully manipulates a chatbot into revealing fragments of its training data. Which risk domain does this finding most directly relate to?

Operational risk

Ethical and social risk

Deployment risk

Data-related risk

A financial institution’s fraud detection model begins incorrectly flagging legitimate transactions following a data feed update. What assurance practice would most likely have prevented this?

Periodic retraining using customer feedback

Continuous monitoring with drift detection alerts

Limiting model access to authorized employees only

Increasing the volume of test data

An AI-driven hiring platform is being retired due to new legal requirements under the EU AI Act. What is the most critical first step in the deactivation process?

Delete all model artifacts immediately to prevent misuse.

Replace the model with a temporary open-source version.

Notify affected stakeholders and plan for controlled deactivation.

Suspend all employee access without communication.

A global company operates an AI translation system that performs differently across languages due to cultural differences. To maintain compliance and effectiveness, which governance strategy is most appropriate?

Implement a single universal model trained on all languages equally.

Disable translations in low-accuracy regions.

Localize and retrain regional models to meet linguistic and cultural requirements.

Outsource compliance entirely to regional vendors.

An organization’s AI monitoring system detects a recurring issue that doesn’t meet the threshold for an incident. What is the most appropriate governance response?

Ignore the issue until an incident occurs.

Record it in the issue tracking system for trend analysis and escalation if repeated.

Immediately shut down the affected AI model.

Report it publicly as a compliance event.

Answers to Assessment Test

B. Fairness requires that AI systems produce equitable outcomes and avoid discrimination caused by biased data. When an AI diagnostic tool generates inconsistent or discriminatory results, it reflects bias present in the training dataset. Transparency helps explain the AI system’s decision, but fairness is the principle that ensures equitable treatment for all individuals.

A. Autonomous systems introduce governance challenges because they act independently. A human-in-the-loop mechanism ensures oversight and allows intervention when autonomous behavior produces unsafe or unintended outcomes. This control supports accountability and reduces operational and ethical risks.

D. AI systems rely heavily on large volumes of training data, which may include copyrighted or proprietary material. This dependence introduces legal and ethical risks, making data dependency a key governance concern. Effective AI governance frameworks require processes to verify data provenance, manage intellectual property exposure, maintain data lineage, and ensure lawful use of all training inputs.

B. Cross-functional collaboration depends on shared processes and transparent communication. Regular joint review meetings supported by standard templates ensure alignment between technical precision and regulatory requirements. Separate or siloed policies create inconsistencies, and removing stakeholders can reduce oversight rather than improve it.

C. Organizations transitioning from informal to managed maturity should embed governance checkpoints throughout the AI lifecycle. Structured reviews, including bias testing and documentation gates, institutionalize accountability and readiness. Accuracy alone is insufficient, and ad hoc governance erodes traceability.

B. AI governance in regulated sectors requires multiple perspectives to detect complex risks. Including technical, operational, and ethical experts ensures decisions account for safety, fairness, and compliance obligations. Diversity of expertise is a risk-control mechanism.

A. Integrating AI governance into existing IT governance creates operational efficiency by leveraging existing review, approval, and documentation processes. These processes ensure AI-specific controls, such as fairness testing and model monitoring, fit within established workflows, reducing duplication and improving consistency and scalability across systems and processes.

A. Vendor transparency regarding training data sources and usage rights is an essential AI governance control. Policies and contracts should require vendors to disclose provenance and licensing to ensure compliance with data protection and intellectual property laws. The public availability of data does not exempt individuals from the obligation to verify lawful use.

D. Leakage of memorized personal data from AI outputs highlights weaknesses in traditional data retention and anonymization policies. AI governance must expand these controls to include differential privacy, data minimization, and lifecycle-based retention, recognizing that models can unintentionally preserve sensitive information long after data ingestion.

B. Under GDPR Article 9, processing special-category (sensitive) data, including religious or political beliefs, is prohibited unless a lawful exception applies. Inferred attributes are still personal data if they reveal protected categories. Controllers must identify and document a legal basis, conduct a DPIA, and implement technical and organizational safeguards to prevent discrimination or misuse.

C. Cross-border AI processing constitutes an international data transfer under GDPR. Controllers must assess the destination country’s privacy laws, apply an approved safeguard (typically Standard Contractual Clauses

—

SCCs or Binding Corporate Rules

—

BCRs), and document their findings in a TIA. These safeguards ensure lawful transfer and accountability for data processed outside the European Economic Area (EEA).

B. Processing biometric or health data represents a high-risk activity under GDPR Article 35 and mandates a DPIA. The assessment documents processing purpose, data sensitivity, potential harms, and mitigation measures such as encryption, consent management, and bias testing. Without a DPIA, controllers cannot demonstrate compliance or justify the lawful basis for handling sensitive data.

C. Fair use depends partly on whether the new use is transformative and whether it affects the market for the original work. If an AI system reproduces identifiable or substantial parts of copyrighted material, the use is not transformative and undermines the fair-use claim—even if the data were publicly available.

A. Postal codes often correlate with protected traits such as race, income, and other characteristics. Even without intent to discriminate, their inclusion can result in disparate impacts prohibited by laws such as the Equal Credit Opportunity Act (ECOA) and the Fair Housing Act. Models must test and justify any variable that could serve as a proxy for protected traits.

A. When a product deviates from its intended design due to an error during production or update deployment, it constitutes a manufacturing defect. Even if the original design was sound, an improperly executed firmware change that causes harm triggers strict liability under product liability law. If the update was improperly executed, it is considered a manufacturing defect; if the update design itself was unsafe, it could be a design defect. For exam purposes, a manufacturing defect is the best fit.

C. Social scoring systems by governments are explicitly prohibited under the EU AI Act. These systems are considered incompatible with EU values and fundamental rights because they create discriminatory and manipulative effects that cannot be mitigated by controls or transparency.

B. High-risk AI systems must undergo conformity assessment (either with a qualified internal resource or via a third-party Notified Body) and achieve CE marking before entering the market. This process verifies compliance with safety, transparency, and oversight obligations. Regulators do not require the disclosure of proprietary source code, but they do require traceable documentation and auditability.

D. The EU AI Act has extraterritorial reach. Non-EU providers placing AI systems on the EU market must designate a legal representative within the EU to liaise with regulators, ensure conformity assessments, and maintain documentation. This requirement extends to general-purpose AI (GPAI) models integrated into downstream EU-facing applications.

B. The NIST AI Risk Management Framework (AI RMF) defines strategic goals and functions (Govern, Map, Measure, and Manage) for managing AI risk. The NIST Assurance of AI Systems (ARIA) program builds on it by providing concrete tools, metrics, and implementation pathways to translate those principles into operational practice, particularly for public-sector organizations. ARIA is a research initiative providing methods and metrics to operationalize the AI RMF; it does not replace or supersede the RMF.

A. The ISO/IEC 42001 standard mirrors the structure of other ISO standards, allowing organizations to integrate AI governance into established quality or information security management systems. This approach consolidates documentation, audits, and improvement processes, strengthening organizational coherence while reducing redundancy.

C. ISO/IEC 22989 standardizes definitions of key AI terms, such as transparency, autonomy, and explainability, thereby creating a shared vocabulary for developers, policymakers, and auditors. Adopting these standard terms reduces ambiguity in technical documentation and contracts, thereby promoting cross-sector and cross-border alignment in AI governance.

A. Overly complex models often reduce explainability and transparency. Governance frameworks emphasize model interpretability as a cornerstone of accountability, particularly in regulated sectors. When simpler, equally effective alternatives exist, complexity adds unnecessary governance burden and can obscure bias or error analysis. Additional complexity also increases the attack surface, potentially making it more difficult to protect such a system from malicious input and other forms of attack. Finally, additional complexity increases the governance burden by requiring additional effort to assess, monitor, and mitigate issues.

B. Impact assessments are only effective if their findings are reviewed, approved, and integrated into development and deployment processes. Failing to act on assessment results undermines the purpose of the AIA and reflects a breakdown in the feedback loop essential to continuous governance improvement. Note that a decision to accept any individual risk is permissible, provided it is made in accordance with defined governance roles and responsibilities.

D. Governance, testing, and explainability controls included in the design phase create defensibility and traceability throughout the lifecycle. Proper documentation and transparency enable organizations to demonstrate compliance and respond to audits or litigation.