Erhalten Sie Zugang zu diesem und mehr als 300000 Büchern ab EUR 5,99 monatlich.

- Herausgeber: The History Press

- Kategorie: Wissenschaft und neue Technologien

- Sprache: Englisch

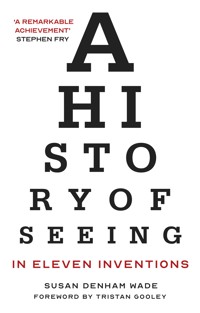

'A remarkable achievement' - Stephen Fry In 2015 #thedress captured the world's imagination. Was the dress in the picture white and gold or blue and black? It inspired the author to ask: if people in the same time and place can see the same thing differently, how did people in distant times and places see the world? Jam-packed with fascinating stories, facts and insights and impeccably researched, A History of Seeing in Eleven Inventions investigates the story of seeing from the evolution of eyes 500 million years ago to the present day. Time after time, it reveals, inventions that changed how people saw the world ended up changing it altogether. Twenty-first-century life is more visual than ever, and seeing overwhelmingly dominates our senses. Can our eyes keep up with technology? Have we gone as far as the eye can see?

Sie lesen das E-Book in den Legimi-Apps auf:

Seitenzahl: 584

Veröffentlichungsjahr: 2019

Das E-Book (TTS) können Sie hören im Abo „Legimi Premium” in Legimi-Apps auf:

Ähnliche

For Rob, Charlie, Stella, Rosie and Hattie Boo

First published by The History Press as As Far As The Eye Can See: A History of Seeing, 2019

This updated paperback edition first published 2021

FLINT is an imprint of The History Press

97 St George’s Place, Cheltenham,

Gloucestershire, GL50 3QB

www.flintbooks.co.uk

© Susan Denham Wade, 2019, 2021

The right of Susan Denham Wade to be identified as the Author of this work has been asserted in accordance with the Copyright, Designs and Patents Act 1988.

All rights reserved. No part of this book may be reprinted or reproduced or utilised in any form or by any electronic, mechanical or other means, now known or hereafter invented, including photocopying and recording, or in any information storage or retrieval system, without the permission in writing from the Publishers.

British Library Cataloguing in Publication Data.

A catalogue record for this book is available from the British Library.

ISBN 978 0 7509 9294 7

Typesetting and origination by The History Press

Printed and bound in Turkey by Imak.

eBook converted by Geethik Technologies

CONTENTS

Foreword by Tristan Gooley

Preface

Prologue: 2015

Becoming:How We See

1 You See Tomayto, I See Tomarto: The Subjective Art of Seeing

2 Perfect and Complex: Eyes in Evolution

Transforming:The Visual Technologies that Begat History

3 Stolen from the Gods: Firelight

4 From the Eye to the Pencil: Art

5 From Eye to I: Mirrors

6 Geometry of the Soul: Writing

Believing:When We Didn’t See

7 Amongst Barbarians: The Age of the Invisible

8 Through a Glass, Clearly: Spectacles

Observing:The Optical Tools that Made the Modern World

9 Gunpowder for the Mind: The Printing Press

10 The Eye, Extended: The Telescope

11 In Love with Night: Industrialised Light

Showing:Mass Media and the Conquest of Seeing

12 Nature’s Pencil: Photography

13 Surpassing Imagination: Moving Images

14 Seeing, Weaponised: Smartphones

15 An All-Seeing World

Epilogue: 2019

Postscript: 2021

Notes

Bibliography

Index

FOREWORDBY TRISTAN GOOLEY

Without a brain, we would be little more than sacks of water, proteins and fats. And without our senses, our brain is tofu. Not literally, but as good as.

Our senses give our brain the information it needs to understand our world and make decisions about ways to improve our lives and avoid danger. The senses are the keys to a richer, more dynamic and safer life. Sight is the most powerful sense for more than 99 per cent of the population.

But there is a problem. We don’t know what we’re doing.

We muddle through life with a pair of super-tools bulging out of the front of our skull, hoping to learn how to use them as we go. We pick up some vague clues along the way by studying the behaviour of others. We learn that watching YouTube videos doesn’t make us wise – it makes us fat.

Our eyes are the most extraordinary tools we will ever use, but they come with no manual. And most of us wouldn’t read a book of dry instructions even if we were handed one. Fortunately, Denham Wade shows us what we need to know through the colourful lens of a cultural history of our relationship with this sense. And she brings this history into our lives vividly, bridging vast gaps so that we can see the past. We learn that individualism flourished soon after the first polished mirrors appeared in Turkey.

It is this weaving of world history and very personal history that thrills. Did you know that overweight people overestimate distances or that we see ourselves and our partners as better looking than we are? I didn’t, but it did make me think. It doesn’t apply to me, I’m sure, but it is very clever writing that tickles our weaknesses. We absolutely must find out how others see us: we are powerless against our own vanity.

In his book Sapiens, Yuval Noah Harari gave us a portrait of our broad family history. A History of Seeing in Eleven Inventions paints a picture that is more intimate, closer both physically and in time.

After reading this book, I could see how things were not as they first looked. You’ll view things differently too.

PREFACE

‘No history of anything,’ a wise man once said, ‘will ever include more than it leaves out.’1 It is difficult to imagine a better exemplar of this insight than the history explored in this book. Seeing in some form or other has been around for hundreds of millions of years. It is a near universal but highly subjective experience among humans and across the animal kingdom. It is a complex neuro-physiological process that natural philosophers have struggled to understand for centuries, and its deeper workings are only just beginning to be unravelled. What’s more, there are dozens of fascinating ways seeing can go wrong.

The definitive history of seeing may one day be written, but that day has not yet come.

In researching and writing this book I have picked a course through the millions of words written about the many different aspects of seeing. I’ve forged a path through a dense forest that traverses dozens of different fields of expertise. There is a logic to my path but others would inevitably have made different choices along the way. One way or another, a lot of territory remains unexplored. Despite my best efforts there are, no doubt, twists and turns and views I’ve failed to spot along the way, and the odd misstep. I apologise in advance for these, and welcome correction.

Susan Denham Wade

PROLOGUE: 2015

Early in 2015, Grace McGregor was looking forward to her wedding on the tiny island of Colonsay, two and a half hours by boat from the Scottish mainland. As the bride-to-be was making her plans, 300 miles away in Blackpool her mother, Cecilia, was shopping for her mother-of-the-bride outfit. Cecilia sent Grace pictures of several dresses she was thinking of buying, taken in a store on her partner’s phone, then called her from the store.

Grace asked her mother which one she liked best.

‘The third one,’ said Cecilia.

‘Oh you mean the white and gold one?’ said Grace.

‘No. It’s blue and black,’ said Cecilia.

‘Mum, that’s white and gold,’ said Grace. When Cecilia insisted the dress was blue and black, Grace showed the picture to her fiancé, Keir. He agreed with Cecilia that the dress was blue and black. Keir’s father was called in from next door to give an opinion. He thought it was white and gold.

The debate continued and spread to the couple’s friends and family. Some people saw the dress in Cecilia’s picture as blue and black, some saw white and gold. After a few weeks of local arguments, a friend of the couple called Caitlin McNeill put the photo on the social media website Tumblr and asked her followers to ‘please help me – is the dress white and gold, or blue and black? Me and my friends can’t agree …’1

Within half an hour the picture found its way onto Twitter and with that became a hashtag: #thedress. It spread around the web like wildfire. Buzzfeed picked it up and asked its users to vote for white and gold, or blue and black. Now the Twittersphere erupted. At its peak, the hashtag was tweeted more than 11,000 times per minute. Eleven million tweets in total were posted overnight. Comments came from reality TV star Kim Kardashian (white and gold), who disagreed with her husband, Kanye West (blue and black). Pop stars Justin Bieber and Taylor Swift also saw blue and black, the latter tweeting that she felt ‘confused and scared’ by the phenomenon.

The next morning the picture featured on television news reports around the world, with newscasters arguing on air about the colours of the dress. Not only could no one agree, they couldn’t comprehend how anyone else could see it differently from themselves. Even when they were told the dress was blue and black people couldn’t change the way they saw the image.

As the debate continued, the media tracked down the family behind the original photograph. The weekend before #thedress went viral Grace and Kier had had their wedding as planned and gone off on honeymoon. The Ellen Degeneres chat show persuaded them to cut their holiday short and flew the whole party to the US to tell their story live on air. At the opening of the show Ellen showed the studio audience the original image – with which they were clearly already familiar – and asked them to indicate whether they saw blue and black, or white and gold. Sure enough, they were split. Later in the show she brought on Grace and Kier and their friend, Caitlin. They told their story on air, and the couple were rewarded with another honeymoon, this time in the Caribbean, and $10,000 cash to ‘start their new lives’.

The climax of the interview came when Ellen called Grace’s mother, Cecilia, onto the set. She walked on stage to cheers and applause, wearing the world’s most famous dress. It was, unmistakably, blue and black.

BECOMING

HOW WE SEE

1

YOU SEE TOMAYTO, I SEE TOMARTO:THE SUBJECTIVE ART OF SEEING

Every man takes the limits of his own field of vision for the limits of the world.

Arthur Schopenhauer, Studies in Pessimism, 1851

Had I been born 500 years earlier I would be blind. In their natural state my eyes see a world with no lines. Shapes are smudges and faces are blank. Colours merge into a murky brown and distances collapse to a single plane a few feet away. Everything is a complete blur.

But I was lucky enough to be born in the twentieth century. From the age of 8 I wore glasses and from 14 contact lenses – life-changing medical interventions so familiar they’re hardly even thought of as technology – and my extreme myopia was corrected. As long as I had my specs on or my contacts in, I could live the same life as someone with 20/20 vision.

A few years ago I started fretting about my poor eyesight. What if there was a fire in the night and I had to leave the house without grabbing my specs? What if I got stuck somewhere for days with no glasses or spare contacts? I would be utterly helpless. It was a silly fear perhaps, but real; the universe of possible disasters expands as we get older, I’ve noticed. In any case, after more than thirty years I was tired of wearing glasses and fiddling around with contact lenses. Every week, it seemed, someone else regaled me with their successful laser surgery story. The time seemed right to take the plunge myself.

It turned out I was too blind for laser surgery, but I was eligible for lens replacement. That’s the Clockwork Orange procedure where you sit in a chair with your eyes clamped open while the surgeon mashes up your lens, plucks it out, and replaces it with an artificial one that corrects the faulty sight. Thousands of people undergo the same procedure every day to treat cataracts. After a couple of mishaps and a bit more painless visual torture it worked. Now, for the first time in my life, when I wake up in the morning I can see exactly what the next person can see.

But I don’t. And neither do you.

Looking Alike?

In Western societies more than 99 per cent of people share the daily experience of sight.* We can look at the objects around us and describe them using the same words: a red apple, a white cup, a wooden chair. We can recognise each other when we meet and translate marks on a page into language. The shared experience of our visual world seems complete: the world is as we all see it, together.

But the sense of commonality about what we see is an illusion. While two people may have identical visual capability, and so can see the same, no two people do see exactly the same. Every aspect of visual perception is subjective, unique to the perceiver. It isn’t just beauty that is in the eye of the beholder, it is every single thing we see.

This subjectivity is down to the way seeing works. Human and all other vertebrate eyes are called ‘simple’ eyes as they have only one lens (insects and other arthropods have ‘compound’ eyes with many lenses). Simple eyes are structured superficially like a camera. Light comes in through a small opening (the pupil) and a lens focuses it onto a light-sensitive area at the rear of the eyeball (the retina), just as a camera lens focuses light coming in through the aperture onto a film. That’s where the analogy ends, however. Unlike a camera, eyes don’t capture the image in front of them then send it off ‘upstairs’ to the brain to be developed, like a film going off for processing. Vision is a pathway, an information processing system,1 from the way the eyes gather visual information to analysing its components, to building up a conscious perception of sight and recognising the scene being observed. Different parts of the brain are involved at each stage, bringing each individual’s experience, memory, expectations, goals and desires to bear on everything they see – or don’t see. Neuroscientists call gathering and processing light signals – the physical seeing, if you like – ‘bottom-up’ processing, and the mechanisms the brain uses to influence vision – turning seeing into perceiving – ‘top-down’ processing. It’s only in the last few decades that they have begun to understand the interplay between them.

The brain’s involvement in seeing starts before we even see anything, with the way the eye gathers visual information. While cameras capture an entire image in one shot, eyes don’t. A photographic film has light-sensitive chemicals spread evenly across it, so the whole surface of the film reacts equally and immediately with the light that comes in through the aperture. The retina is very different. It is an outpost of the brain, formed in the early weeks of pregnancy from the same neural tissues as the embryonic brain and covered in neurons. Two types of photoreceptors – rods and cones – detect light and turn it into electric signals. They do quite different things and are spread very unevenly across the retina.

Cones can detect colours and provide excellent visual acuity but need relatively bright light. We use them for high-resolution daytime (or artificially lit) vision. Most of the eye’s 6 million cones are concentrated in a tiny area in the centre of the retina called the fovea, the eye’s central point of focus. They run out quite quickly away from the focal point.

Rods are roughly a thousand times more light sensitive than cones, capable of seeing a single photon, the smallest unit of light. They are extremely good at detecting motion, but they cannot see colours and they provide relatively poor resolution. That is why our night vision is colour-blind and relatively blurry. There are around twenty times as many rods as cones, clustered in the mid to inner part of the retina, outside the fovea, and continuing out in gradually decreasing density to the retina’s edge.* Rods provide our night and peripheral vision.

The concentration of cones in the tiny fovea means eyes can only focus on a very small area at a time. At an arm’s length from the eye, the zone of sharp focus is only about the size of a postage stamp. Test this for yourself by holding up this book with your arm outstretched and looking at a single word. All the words around it will be blurred. We compensate for this tiny focal area by constantly and very rapidly moving our eyes around whatever we are looking at, three or four times per second in tiny subconscious movements called saccades, gathering more and more detailed information.

Eyes don’t move like a printer scanning a document section by section. They move around a scene in all directions, fixing momentarily on something, then moving on, piecing an image together one postage stamp at a time. In the 1960s a Russian psychologist called Alfred Yarbus devised an evil-looking apparatus with suction cups like giant contact lenses that he placed over his subject’s eyes while a camera tracked and recorded where they moved as they looked at various images. He traced the recorded movements onto the images they were looking at, showing the course of the eyes’ journey and where they paused.

Yarbus discovered several extremely important things about vision. Firstly, saccades aren’t systematic, but nor are they random. Eyes don’t attempt to get around the entire scene being observed, but seek out the information that is most useful. From a biological point of view the most ‘useful’ information is what helps us survive. Thus, as Yarbus demonstrated, eyes are drawn towards images of other living creatures, especially humans, and particularly the face, eyes and mouth. These are the most important body parts for survival because they reveal important information about a person’s intention and mood.

Yarbus also discovered that, when looking at a scene, our eyes try to interpret it in a narrative way, to piece together a story that tells us what is going on. Eyes move back and forward from one character to another, and to details in the scene the brain thinks will be important in understanding what is happening. Somehow our eyes and brain stitch all this together into a coherent impression of what we are looking at, ignoring the movements in between each eye fix. It’s similar to the way a film editor works, cutting together various shots to guide the audience through a scene’s story. Incidentally, film editors have learned that editing cuts are most pleasing to the audience if they are made during motion. Harvard neuroscientist Margaret Livingstone believes this is because our visual system is accustomed to processing a series of shifting scenes (eye fixes) separated by movement.2

Later eye movement studies reveal that a person’s cultural background can influence their gaze patterns. In one experiment two groups, one Western and one East Asian, were shown a series of images of a central object set against a background scene, such as a tiger in a forest or a plane flying over a mountain range. The Western group tended to focus on the main object, while the East Asian group tended to shift their gaze between the main object and the background.3 The researchers proposed the reason for the difference was that Western culture values individuality and independence, hence the focus on the central character, while East Asian cultures are more interdependent, and thus those subjects were more interested in the context within which the central object was placed.

More recent neurological studies have shed light on this early stage of visual information gathering and how it contributes to vision. Light signals from the retina travel to two places – the thalamus, of which more in a minute, and the superior colliculus. The superior colliculus, which also has a major role in controlling head and eye movements, combines light signals from the retina with input from other parts of the brain – including areas responsible for memory and intention – to determine where the eye looks next. Have you ever looked around suddenly, but not been quite sure why? This was probably because the rods in your peripheral vision unconsciously detected some sort of movement, and your top-down system, realising this might mean danger, directed your eyes to examine the situation more closely. This is a basic survival response.4 Thus from the very outset seeing is a combination of eye and brain, whether we realise it or not.

The top-down brain is also pivotal in filling in the parts of the scene that the eyes don’t actively focus on. The rods and cones outside the foveal area provide a rough visual indication of the scene surrounding the focal point, and the brain fills in the rest from memory and experience. This gives us the confident – but quite erroneous – impression that we’ve seen the entire scene.

Sometimes our brains direct our eyes to focus on the wrong things, leading us to miss important information. This is the stock in trade of magicians, fairground tricksters and pickpockets. They are all experts at getting us to focus on irrelevant details while they deceive us before our very eyes. Even the apparently unmissable can become invisible when we are focusing on something else. In 1999, a Harvard research team showed subjects a video of a group of people throwing a ball and asked them to count the number of passes made. Half of the subjects didn’t notice a gorilla walking right across the court as they were watching.5 Similarly, we often miss quite major changes to what we are seeing. The same Harvard team sent a researcher posing as a tourist into a park to ask a passer-by for directions. As the researcher/tourist and the passer-by talked, two other team members walked between them carrying a door and swapped the ‘tourist’ for another researcher. Most of the passers-by didn’t notice the change and carried on talking to the second researcher.

As light signals are received from the fovea and the rest of the retina they travel to the thalamus and are relayed from there to the visual cortex located at the back of the brain. This first stage of processing deals with basic visual signals such as whether a line is horizontal, vertical or diagonal, and was discovered in the 1950s by physiologists David Hubel and Torsten Weisel. In a ground-breaking study, they inserted microscopic electrodes into individual cells within the visual cortex of a cat’s brain. They immobilised the cat with its eyes trained onto a screen and attempted to record its brain’s responses to different light patterns. Over several days they shone lights all over the screen but couldn’t get any of the cat’s brain cells to respond. Eventually they tried a glass slide with a small paper dot stuck on it. As they moved the slide around they finally got a response: a single cell in the cat’s brain started firing. They continued moving the slide around, trying to pin down where on the screen the dot set off the active brain cell. After many puzzled hours they realised it wasn’t the dot that was causing the cell to respond, but the diagonal shadow cast by the edge of the slide when it moved across the face of the projector.

This was a completely unexpected result. After many more experiments the pair concluded that within a part of the visual cortex (now known as V1) each of the millions of cells is programmed to respond to a single, very specific visual feature. A different cell responds to each of /, \, –, |, and so on. From these basic signals the brain can quickly build an outline of a scene – effectively a line drawing of whatever the eye is looking at. This is why we naturally recognise simple line drawings: they replicate the most basic way the brain processes images.

Hubel and Weisel’s experiments were revolutionary because they showed that the brain doesn’t actively analyse visual information. On the contrary, it reacts. Each individual cell within V1 either fires or doesn’t fire automatically in response to the visual properties of a particular light signal. In the next stage of processing, V2, specific cells respond to contours, textures and location. Once again depending on the visual characteristics of each object – in this case, say, colour, shape, or movement – certain cells do or don’t fire. Perception is formed by the combination of all the cells that fire in response to an image’s various visual properties. This was an extraordinary conclusion and entirely contrary to what researchers had assumed up to that point. Hubel and Weisel later won the Nobel Prize (1981) for this work (though no prizes for kindness to cats) and their insights have underpinned research into the workings of the visual system since.

From the visual cortex, information is relayed through one of two pathways – the ‘Where’ pathway, common to all mammals, and the ‘What’ system that we share with only a few species. The ‘Where’ pathway is located in the parietal lobe at the top of the brain towards the back. It is colour blind but detects motion and depth, separates objects from their background, and places things in space. These are the basic aspects of vision required for survival, as they enable the seer to detect possible food sources and danger, and to move within their environment.

The second pathway, the ‘What’ system, takes place in the temporal lobe at the sides of the brain, over the ears. This pathway perceives colours and, critically, recognises things. It is a more sophisticated system than the ‘Where’ system and is thought to be present only in humans and other primates and, possibly, dogs.6

The human ‘What’ system has a particular region in the brain dedicated to recognising faces – a function of our deep history as a social species in which faces are extremely important to our survival (and why our focus is drawn to faces, as we’ve seen). That is why a young child’s drawings of a person are almost always of a face with stick arms and legs: the face is instinctively what is most important. When we look at faces our brains compare the features we see with a stored database of ‘average’ facial features – eye width, length of face, nose size and so on – all within a split second. Caricaturists exploit this to create pictures that exaggerate the facial features that differ most from the norm. We recognise these images instantly because the artist is deliberately doing the same thing our brain does unconsciously.7

As we saw with the Yarbus experiments, the objective of visual processing is to understand what is going on around us, rather than to establish an accurate optical representation. Some top-down mechanisms add information to an image, wh ch i w y yo c n rea his se t nce. Others take away extraneous detail or adjust what we see to compensate for ambiguity. These measures allow us to survive and thrive but also leave us vulnerable to a wide variety of optical errors and illusions.* Many of these are so powerful that, even when we know what the illusion is, it is impossible to ‘see’ the optical reality. Consider a chessboard in partial shadow. In terms of its optical properties, a dark square in bright light might be lighter than a light square in shadow. Nevertheless, our eyes will always see a darkened light square as lighter than a brightly lit dark square. Our top-down system is using our past experience and memory to direct our perception here. It is an interesting philosophical question as to which version of the chessboard represents the ‘truth’.

Bearing in mind the complexity of the visual processing system, and the varying role the brain plays at every stage of it, one may well imagine that people of different backgrounds might see the same thing differently. Recent research has uncovered several examples of significant perceptual differences across groups.

A 2016 study demonstrated that obese people perceive distances differently from people of average weight. When asked to judge a 25m distance, a 150kg person estimated its distance as 30m, while a 60kg person judged the same distance to be 15m. The researchers put this down to a link between a person’s perception and their ability to act – the assumption being an obese person would find it more difficult than a slim person to travel the same distance.8

The Himba tribe in Namibia continue to live a traditional life away from Western influences. Their language describes colours completely differently from ours. One colour, called Dambu, includes a variety of what we describe as greens, reds, beige and yellows (they describe white people as Dambu). Another colour, Zuzu, describes most dark colours, including black, dark red, dark purple, dark green and dark blue. A third, Buru, includes various blues and greens. Within their language and colour system, what we would call different shades of the single colour green might belong to three different colour families.

In 2006 researchers put this to the test.9 They showed Himba people a set of twelve tiles, of which eleven were the same colour and one was different. In the first test the tiles were all green, with one being a slightly different shade. To most Westerners the tiles looked identical, but the Himba volunteers spotted the odd one out immediately. The second experiment showed the volunteers eleven identical green tiles and one that, to Western eyes, immediately stood out as being blue. The Himba, however, had difficulty differentiating this tile from the others.

Do You See What I See?

Around the time of my eye surgery #thedress happened. At a time when vision was uppermost in my mind, I started wondering: if it is so easy for people living in a similar time and place to see things differently, how different must the world have looked to people living hundreds or even thousands of years ago?

This was never going to be a easy question to answer. Nevertheless, I began digging around looking for clues. I read books and academic articles, visited museums, galleries and ancient places, and talked to experts. While I couldn’t see through the eyes of ancient peoples, I discovered a lot of things that surprised and intrigued me. The more I found out, the more intrigued I became.

Eyes, I discovered, have existed longer than any other part of our body. Their structure has remained virtually unchanged through most of evolutionary history, even while the heads and bodies that housed them changed dramatically. Our eyes are almost identical to those of the very earliest vertebrates – our ancestors – eel-like creatures who lived in the sea more than 500 million years ago (mya).

But the most primitive eyes go back 100 million years further than that, back to the time when every living thing on Earth was still microscopic, until something triggered an explosion of frenzied evolution that resulted in the earliest animal kingdom. What sparked that explosion isn’t certain, but a good candidate for the trigger is those primitive eyes. Millions of years before anyone coined the term, eyes may have been the original super‑disruptor.

When the hominids – the immediate ancestors of humans – came along, they weren’t content with their natural vision, venerable or not. They mastered fire, the first disruptive technology, giving them precious light through the night for hundreds of thousands of years, and changing humankind’s place in the ecosystem forever.

Many thousands of years later, their descendants started making images of the world around them – pictures. Art was born. A few millennia after that they discovered how to polish glass into a mirror and see themselves reflected back. Then, just a few centuries further on, someone invented the first writing system, which eventually captured spoken language in a visual form. Writing was the beginning of what we call history and enabled the world’s first civilisations to develop.

Centuries later, an Italian artisan ground two glass discs into lenses, joined them together in a frame and made spectacles. Two hundred years after that, a German goldsmith invented the printing press and spread literacy and learning throughout the known world, with dramatic consequences. A century and half later a Dutchman put two lenses in a tube and created a telescope, tilting the world on its intellectual axis. Many scientific advances and a few more centuries on, light was released from the bounds of the hearth and the wick and rechannelled into pipes that lit city streets, homes and the new factories like never before. The modern world as we know it had arrived.

In the nineteenth-century spirit of active enquiry and enterprise, two amateur scientists invented different versions of photography within weeks of one another. By the end of that century, still images had become motion pictures, which eventually came into homes as television.

Then just over a decade ago, a charismatic Californian entrepreneur launched the first smartphone. We’ve had our eyes glued to glowing screens ever since.

Each of these eleven inventions – firelight, pictures, mirrors, writing, spectacles, the printing press, telescopes, industrialised light, photography, motion pictures and smartphones – changed the way people saw the world. But each of them also changed the world into which they came: some immediately and with great fanfare, others more slowly and subtly but, I argue, no less dramatically. With each new visual technology the world was seen differently and became a different world. And with each new invention, vision slowly eclipsed our other senses, eventually relegating them to supporting roles in pleasure and leisure.

A dozen years into the smartphone era, it’s time to take a look back at previous epoch-defining visual discoveries and ask ourselves the question: have we gone as far as the eye can see?

____________

1 World Health Organisation figures state there are 36 million blind people in the world, and 217 million with moderate to severe vision impairment, based overwhelmingly in low income countries. (WHO Fact Sheet #213, accessed at www.who.int/en/news-room/fact-sheets/detail/blindness-and-visual-impairment.)

2 You can see the difference between rods and cones by holding a coloured object at arm’s length in front of you. Holding your gaze to the front, slowly move the object around to the side, wiggling it as you go. Quite quickly you will no longer see the object’s colour, and it will become very blurry, but you will continue to be aware of movement even when you can’t see what’s causing it.

3 German musician and visual artist Michael Bach has a wonderful set of optical illusions online at www.michaelbach.de/ot and see a short video of optical illusions at www.youtube.com/watch?time_continue=76&v=z9Sen1HTu5o.

2

PERFECT AND COMPLEX:EYES IN EVOLUTION

To suppose that the eye with all its inimitable contrivances for adjusting the focus to different distances, for admitting different amounts of light, and for the correction of spherical and chromatic aberration, could have been formed by natural selection, seems, I confess, absurd in the highest degree … [But] The difficulty of believing that a perfect and complex eye could be formed by natural selection, though insuperable by our imagination, should not be considered subversive of the theory.

Charles Darwin, On the Origin of Species, 18591

In the beginning, there was light, but nothing was there to see it.

For several hundred million years after a giant cloud of gas and dust formed our solar system, the Earth was a molten mass of turbulence. Eventually the surface solidified into great, grey continents peppered with rumbling volcanoes and surrounded by vast reaches of ocean. The Sun shone by day and the Moon by night.

There was light and dark, lightning and rain, rocks and water, but it all went by unnoticed.

One day, about 3.8 billion years ago, the first life appeared. No one really knows how it happened. Tiny, single-celled organisms lived underwater, drifting around in the warm, chemical-rich currents rising below the surface of the young planet’s huge seas.

Life moved very, very slowly for about 3 billion years. Gradually the single-celled organisms evolved into different forms that would eventually become the major kingdoms of living things: bacteria, algae, fungi, plants, and animals. Around 650 mya some organisms developed a bilateral body form, with a front and back, and left and right sides. They were still too small to see and, aside from a microscopic mouth and anus, were featureless, worm-like blobs. It was only millions of years later when experts examined their fossilised forms under powerful microscopes that any of these changes were distinguishable. The bilateral group split further into what would eventually become the vertebrates (mammals, birds, fish, and reptiles) and the arthropods (insects, spiders and crustaceans) about 600 mya, but all remained invisible to the naked eye.

Then about 540 mya, something changed. After 3 billion years of glacial change the microscopic animal kingdom exploded into a frenzy of evolution. Creatures morphed, died out, grew, proliferated, changed, died out, mutated, reproduced, proliferated, died out, reproduced, mutated, thrived, grew features, reproduced, grew, died out, mutated and proliferated, again and again, generation after generation.

After about 20 million years of frenetic change, everything slowed down again. Winning species emerged and relatively stable ecosystems were established. But the underwater world was transformed. Murkily invisible swarms of microscopic life were replaced with complex, visible flora and fauna, a diverse range of species both large and small. They had characteristic shapes and features including limbs, teeth, antennae, gills, shells, spines and claws. Some had optical features like stripes and iridescent colours.

At the top of the food chain was the Anomalorcaris, a large predator with a soft, segmented body shaped a bit like a stingray. It had a fish-like tail and a pair of hooked, grasping appendages projecting from its head, between two bulging eyes, and it could grow up to 6ft long. There were many varieties of Trilobite, giant woodlice-type creatures with long antennae and a pair of compound eyes on the top of the head. Opabina was a bit like a prawn with five eyes and a long nozzle appendage at the front. The predator Nectocaris vaguely resembled a squid and had eyes on short stalks.2 They hunted and were hunted, scavenged, reproduced, lived and died under the sea for tens of millions of years until an extinction event wiped many of them out around 488 mya.3

Palaeontologists call the period of rapid evolution – known as adaptive radiation – that happened 540 mya the Cambrian Explosion. It is probably the most important evolutionary event in the history of life on Earth. It is also one of the biggest mysteries. Before the Cambrian Explosion life forms comprised undistinguished, microscopic organisms. Just 20 million years later there was a richly diverse, and visible, underwater ecosystem. No one knows exactly what happened in the intervening years.

The animals that appeared during the Cambrian Explosion are documented in great detail by modern palaeontologists thanks to some catastrophic events that rocked the underwater world more than half a billion years ago. Very occasionally, a huge expanse of mountainside slid suddenly down a steep underwater slope onto the seabed below, engulfing all the surrounding plants and animals. These particular mudslides were formed of exceptionally fine sediment that immediately made its way into every nook and cranny of the unsuspecting wildlife below. The events happened so suddenly and with such force that all the oxygen and bacteria present in the ecosystem were evacuated, leaving the captured organisms vacuum-packed in the exquisitely insidious mud.

The trapped organisms turned into extraordinarily complete and detailed fossils – almost perfect three-dimensional snapshots of the scene at the moment of devastation. Unlike most fossils that form gradually as silt builds up over a decaying organism, these were formed almost instantaneously, capturing soft tissues that usually rot away long before fossilisation takes place, sometimes down to microscopic details. They revealed species and body parts that almost never make it into the fossil record, including eyes.

The aquatic communities these extraordinary mudslides captured were frozen in time – Pompeiis of some of the earliest visible life on Earth – until just over a century ago. In the summer of 1909, self-taught palaeontologist and geologist Charles Doolittle Walcott took his family on a fossil-hunting holiday in the Canadian Rockies. They collected a large haul of fossils including species they had never seen before, and ‘several slabs of rock to break up at home’.4

Walcott had stumbled upon a Konservat-Lagerstätte (from the German for ‘conserving storage place’), an equatorial marine-scape frozen in time around 508 mya, just a few million years after the Cambrian Explosion. Over eons the Earth had shifted, moving the ocean bed from the equator to western Canada. The Burgess Shale, as the fossil field that Walcott discovered is now called, revealed more than 150 new species and more than 200,000 extraordinarily well-preserved specimens.

In the century since Walcott’s find, palaeontologists have uncovered other Cambrian Era Lagerstätten in Australia, China, Greenland and Utah, USA. They reveal that many of the Cambrian animals had eyes. There were creatures with two eyes, four, and more. Some were on stalks, some at the front of the head, others at the back or the side, some scattered over the body. Eyes varied but they were not unusual. Analysis of particularly well-preserved fossils has revealed that compound eyes from as long ago as 520 million years had vision equivalent to that of a modern insect. Researchers at the Lagerstätte in Chengjiang, China, have further established that vision among the earliest animals was closely associated with a predatory lifestyle. They found that around a third of the specimens discovered had eyes, and of those with eyes, 95 per cent were mobile hunters or scavengers.5

The Light Switch Theory

The very early appearance of functioning eyes and the association of eyes with predatory behaviour prompted a theory that the development of primitive vision may have triggered the Cambrian Explosion. Oxford zoologist Andrew Parker’s ‘Light Switch’ theory maintains that, as tiny creatures slowly became more complex, some started to develop a form of vision.6 It began with a light-sensitive spot somewhere on the body then mutated further over generations until it became a primitive eye.

It is easy to imagine the survival advantage animals with even a primitive form of vision would have. They could avoid danger, and instead of floating about passively waiting for food to happen by, could seek it out.

Once creatures started actively searching for food, some inevitably became predators and others, correspondingly, prey. It was the opening line of the story of the animal kingdom. But vision alone was not enough. Remember that body forms at the outset were tiny and indistinct. To become active in seeking food, predators needed mobility and weapons. Prey needed defences and means of escape. Sure enough, evolution’s random process of mutation and proliferation gradually provided these. Predators developed claws, teeth, appendages for swimming. Prey species formed hard shells, spines, means to burrow into the sand, even camouflaging colours.

New traits evolved rapidly to out-compete other species, who responded with further adaptations. It was an evolutionary arms race between predators and prey as every species had to adapt or die.

Eventually some became new species with varied body parts, different ways of moving through the water, and diverse feeding strategies. None of this was conscious, it was all trial and error: millions of organisms reproducing, mutating, dying out, mutating, thriving, over and over again, generation by generation. It didn’t come about overnight, but in evolutionary terms it happened very fast. In the face of a rapidly changing environment, the advantage or disadvantage of a random mutation becomes apparent very quickly: creatures either survive or are quickly wiped out. When we’re talking in terms of millions of years, small changes can become major changes in a relatively short period of time.

To take the specific example of an eye, in 2004 a Danish zoologist built a theoretical model to estimate how quickly a light-sensitive patch on an animal’s skin could evolve into a sharply focused camera-type eye like our own. The model assumed a small improvement in each generation. It calculated that the entire transformation from patch to eye could take place in less than half a million generations.7 This would take 10 billion years for humans, but for a creature with a life span of months or even days, it could take no time at all.

If vision triggered the Cambrian Explosion, as the Light Switch theory maintains, it would have to exist before the Explosion began. But all the fossil evidence for species with eyes comes from after it settled down.

There is another way to probe the deep past, however. All living things carry with them their entire evolutionary history, locked into the genes that reside in every cell. Rapid progress in genetics over the last few decades has managed to unpick that lock, allowing zoologists to reach back through time and find connections between species that physical observation can miss. Every species has thousands of different genes, each made up of complicated sequences of DNA. If different species share a matching DNA sequence, then almost certainly those species share a common ancestor, because the chance that they would both evolve the same version of something as complex as a gene independently is so small as to be virtually impossible.

In 1994, a Swiss biologist called Walter Gehring wanted to test his hypothesis that the Pax6 gene, found in every modern animal species, prompts eye growth. In a laboratory experiment he inserted Pax6 from a mouse into fruit fly embryos, in different places corresponding to particular parts of the adult fly’s body.8 The fly embryos hatched with an eye growing where the Pax6 gene had been inserted. The fly with Pax6 inserted into its antenna area grew an eye on its antenna. Another grew an eye on its leg, while another still grew one on its wing, all corresponding to where the gene had been inserted.9 But crucially, although the introduced Pax6 came from a mouse, the extra eyes weren’t mouse-type eyes. They were compound fly eyes, fully formed and responsive to light. Gehring concluded Pax6 does prompt eye growth, but doesn’t determine eye type. Eye type genes must have evolved later, after the two animal groups had diverged.

This result tells us that the common ancestor of mice and flies had the eye growth gene Pax6, so must have had some sort of primitive eyes. Every eye that exists today evolved from these, the proto-eyes of a microscopic common ancestor living 600 mya when the ancestors of mice and flies diverged. The experiment established that eyes – and therefore vision – existed 60 million years before the Cambrian Explosion.10

Darwin wrote that the idea that eyes could have evolved through natural selection seemed ‘absurd’. If the Light Switch theory is correct – and it seems to be supported by fossil and genetic evidence – eyes were not only the product of evolution, but the spark that prompted the evolution of life on Earth as we know it.11

If so, seeing wasn’t the outcome of the biggest evolutionary event in history – it was the cause. The advent of vision turned 3 billion years of settled life on its head and caused 20 million years of evolutionary chaos. Hundreds of millions of years before human technology appeared, seeing itself was the original super-disrupter.

Eternal Eyes

What about our own eyes? What has happened to eyes in the eons since they first appeared? How have they changed? What makes human eyes special? The answer to all these questions is: not much.

Humans have simple (single-lensed), camera-type eyes. Chinese palaeontologists have found fossil remains of 520 million-year-old simple eyes but they weren’t complete enough for them to be sure whether they were camera-type eyes like our own, or to assess how well they could see. Again, we need to look beyond the fossil record to discover how and when human eyes evolved.

Like geneticists looking for a common gene, zoologists can trace the evolution of a particular complex trait by looking for species, living or extinct, with the same trait. If two species have a complex trait in common, and share a common ancestor, it is very likely that the common ancestor had the trait in question. In the same way that common genes indicate a historic relationship, the same complex trait is unlikely to have developed independently in two species after they diverged.

Australian neuroscientist Trevor Lamb has used this principle to trace the deep history of the human eye, working backwards up the evolutionary tree combining information from fossils, living species, and genes.12

The first steps up the human family tree are straightforward. The camera-type structure of human eyes is shared by all mammals. Birds, fish, reptiles and amphibians also have a camera-type eye structure. The last common ancestor of this group – the jawed vertebrates – lived around 420 mya, which means that camera-type eyes are at least this old.

The next step back takes us to an eel-like creature called a lamprey. The lamprey is an ancient and primitive creature; there are fossilised remains of lampreys from 360 mya13 and they are thought to have hardly changed in over 500 million years.14 It has a primitive cartilaginous skeleton, making it a vertebrate, and as such the species is of great interest to biologists studying the early evolution of vertebrates. What differentiates the lamprey from other vertebrates is that it has no jaw. Instead it has a permanently open ring of a mouth filled with spiky-looking teeth that latch on to a victim’s flesh so the lamprey can suck its blood. Lamprey was a delicacy in the Middle Ages, and King Henry I famously died after eating a ‘surfeit of lampreys’ in 1135.

Lampreys are not pretty – ‘killer lampreys’ featured in a recent horror movie15 – but for our purposes they have one feature of particular interest. Lampreys have a camera-type eye structure essentially the same as our own, with a cornea, lens, iris and eye muscles, a similar three-layered retina and photoreceptors like our rods, although they don’t have cones. This makes it extremely likely that our common early vertebrate ancestor also had camera-type eyes when the vertebrates diverged into jawed and jawless species around 500 mya.16

The trail ends here. The next step up the evolutionary family tree takes us back to a microscopic ancestor that lived 100 million years earlier, before the Cambrian Explosion. The living descendant of this creature only has a simple eye spot, indicating that the common ancestor had at best a rudimentary eye. That leaves us with the lamprey as our oldest seeing relative, giving our camera-type eyes a highly respectable vintage of at least 500 million years.

In half a million millennia camera-type eyes changed very little. Essentially the same eyes served all the descendants of our common ancestor including fish, dinosaurs, birds, mammals, reptiles and humans … and lampreys.

However, the bodies that hosted those eyes changed a lot, giving similarly structured eyes quite different capabilities. Carnivorous dinosaurs such as Tyrannosaurus rex were the first to develop heads with forward-facing eyes. This gave them binocular, three-dimensional vision, valuable for hunting and chasing prey.

Predators that are low to the ground and hunt by ambushing, such as crocodiles, snakes, and some small cats, have pupils with vertical slits that fine-tune their distance perception so they can judge the moment of attack precisely.

Prey species, meanwhile, evolved visual traits to help them evade capture. Hunted animals need to maximise their field of vision to detect potential predators. Many herbivores evolved protruding eyes on the sides of their heads, like rabbits, and have almost 360-degree vision. Some grazing animals including sheep, goats and antelopes developed horizontal postbox-shaped pupils, to further improve their field of vision, and eyes that swivel when they bend down to graze, to keep their pupils horizontal and on the lookout.17 Nocturnal animals such as tarsiers, the small googly-eyed primates, have very large eyes that improve their night vision. Tarsiers’ eyes are bigger than their brains. Unfortunately for them (and other nocturnal prey), various predators have also developed night vision, most famously owls.

Human Eyes

When our ancestors, the primates, first appeared about 55 mya they were largely nocturnal omnivores, feeding on insects and small vertebrates. As predators, their eyes were forward facing and were large relative to their bodies to aid night-time vision. They may have been able to see ultraviolet rays.18 When the apes and monkeys (our ancestors) separated from the other primates, they shifted to a daytime hunting pattern and their eyes evolved a higher concentration of light receptors in the fovea, giving sharper daytime vision, and a wider range of colour perception.19

When human ancestors diverged from the other apes, the appearance of their eyes changed. Ape eye sockets are round and dark, similar in colour to the surrounding face, and their ‘whites’ are also dark. Human eyes, by contrast, stand out clearly from the face. Iris and pupil are surrounded by a white sclera that is highlighted by the almond shape of the human eye socket.

This change makes it very easy to follow a person’s gaze, and we are programmed from birth to do this. Human babies stare intently into their carer’s eyes during feeding (to an extent that can be both uplifting and unnerving), and follow a carer’s gaze, even when the carer’s head doesn’t move, from a very young age. They don’t follow head movements if the carer’s eyes are closed, implying the eyes are the critical feature. Apes do exactly the opposite. They follow head movements with or without a shift in gaze, but they don’t follow eye movements alone.20

Seeing where another individual is looking gives away important information about them: their intended direction of travel, or that they have seen something interesting, or an enemy approaching. In a society where there is an assumed level of trust, communicating by eye movement is highly efficient.

However, a highly visible gaze can be a liability in different circumstances. In a competitive social environment, like that of chimpanzees, an individual who sends signals through eye movements might give away valuable information, such as where the best food can be found. Visible eye movements are a disadvantage.

Michael Tomasello, the zoologist who conducted the gaze experiments, believes that visible eye movements are evidence of very distinctive human characteristics. Tomasello believes that as hominins (predecessors to modern man) diverged from the other apes over the past 2 million years, with a corresponding divergence of lifestyles, their eyes evolved to support a more cooperative way of living. The whites of human eyes, he believes, are evidence of long-standing close-range cooperation within social groups, possibly reaching back before the development of verbal language.21

Our eyes may be the windows to the soul, but they are also windows into our history. Their positioning, size and shape, capability and internal structure all tell us about how our ancestors lived, and who our earliest ancestors were.

Darwin called eyes a ‘complex and perfect’ organ. They are not perfect; they bear various scars of the bumpy evolutionary process they resulted from. However, their great longevity demonstrates they must be perfectly good enough, or else they – and we – would have been superseded at some point by a better model through the relentless forces of natural selection.

TRANSFORMING

THE VISUAL TECHNOLOGIES THAT BEGAT HISTORY

3

STOLEN FROM THE GODS:FIRELIGHT

I am he that searched out the source of fire, by stealth borne-off inclosed in a fennel-rod, which has shown itself a teacher of every art to mortals.

Prometheus, from Aeschylus (525–426 BCE), Prometheus Bound, trans. T.A. Buckley, 1897

In a story told by Kalahari Bushmen, at the beginning of time the People and the animals lived under the ground with the great god and creator, Kaang. The People and the animals could talk to each other and understand each other and they lived in peace. Although it was under the ground, it was always light and warm, and everyone had enough of everything. One day Kaang decided to build a world on the surface. First He created an enormous tree with many branches. Then He created all the features of the land: the mountains, valleys, forests, streams and lakes. Finally, He dug a deep hole from the base of the tree down to where the People and animals lived. He invited the first man and the first woman to come up and see the world he had made. The man and the woman came to the surface and saw the world and the Sun and the sky and they were very happy. All the other People and animals came up through the hole too and saw the new world.

Kaang said, ‘This is the world I have created for you all to live in. I have just one rule: do not make fire, as this will unleash an Evil force.’ He left and went to a secret place to watch them all.

At the end of the day the Sun set. It became dark and got cooler. Everyone was scared, especially the People. None of them had been in darkness before. The People could not see in the dark like the animals could, and they did not have fur to protect them from the cold. They became afraid of the animals and didn’t trust them. They forgot Kaang’s words and built a fire. Now the People could see each other’s faces in the light of the flames and they felt safe and warm.

But when they turned around the People saw all the animals running away. The animals were frightened by the fire, and they fled into the caves and mountains. The People were sorry, and called after the animals, but they didn’t reply. Kaang came out of his hiding place and said, ‘You disobeyed me and built a fire. It is too late to be sorry; the Evil force has already been released.’

Ever since that day the animals and the People have feared each other and lost their ability to talk and live together.1

The terror the People in the creation story felt on confronting darkness is a universal theme. In spite of its nightly inevitability, humans have always feared the dark. Language is peppered with allusions to our dread of lightlessness: dark deeds, black looks, dim view, gloomy, obscure, shady, shadowy, drear. The word darkness is used to describe ignorance, anger, sorrow and death,2 while the forces of darkness are wicked and evil.

Night is the natural conspirator of darkness, and by association the dwelling place of evil. In Greek mythology the gods Erebus (darkness) and Nyx (night) were husband and wife. They spawned the demigods Disease, Strife and Doom.3 On the other side of the world, the Maori goddess Hine-nui-te-pō represented night and death and ruled the underworld. In Germanic cultures, night hosted the witching hour when monsters such as vampires, ghosts, werewolves and demons emerged, and when black magic was at its most potent.

Darkness was also the bedfellow of death in ancient cultures from Mesopotamia to Egypt to China to Europe. Almost all cultures have stories of an underworld, bathed in eternal darkness, representing death and housing evil spirits.

Light, on the other hand, is almost always associated with the good, and often the divine. We see the light, are illuminated and enlightened. The first chapter of Genesis proclaims: ‘God said, Let there be light: and there was light. And God saw the light, that it was good: and God divided the light from the darkness.’ Many other cultures also associate the creation of the world with light.

Most of the world’s major religious icons are associated with light. Jesus said, ‘I am the Light’; the prophet Mohammed is noor, the light of Allah personified; Buddha is the enlightened one; light represents the almighty in Hinduism. Religious rituals and festivals also often centre around light: Buddhist festivals are all on full Moon days; Diwali is a festival of light; Hanukkah requires the daily lighting of a candle; Christians light a votive candle as an offering with prayer.

Satan, on the other hand, is the Prince of Darkness.