Erhalten Sie Zugang zu diesem und mehr als 300000 Büchern ab EUR 5,99 monatlich.

- Herausgeber: Atlantic Books

- Kategorie: Fachliteratur

- Sprache: Englisch

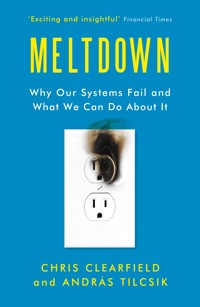

Financial Times' best business books of the year, 2018 'Endlessly fascinating, brimming with insight, and more fun than a book about failure has any right to be.' - Charles Duhigg, author of The Power of Habit A groundbreaking exploration of how complexity causes failure in business and life - and how to prevent it. An accidental overdose in a state-of-the-art hospital. The Post Office software that led to a multimillion-pound lawsuit. The mix-up at the 2017 Oscars Awards ceremony. An overcooked meal on holiday. At first glance, these events have little in common. But surprising new research shows that many modern failures share similar causes. In Meltdown, world-leading experts in disaster prevention, Chris Clearfield and András Tilcsik, use real-life examples to reveal the errors in thinking, perception, and system design that lie behind both our everyday errors and disasters like the Fukushima nuclear accident. But most crucially, Meltdown is about finding solutions. It reveals why ugly designs make us safer, how a five-minute exercise can prevent billion-dollar catastrophes, why teams with fewer experts are better at managing risk, and why diversity is one of our best safeguards against failure. The result is an eye-opening and empowering book - one that will change the way you see our complex world and your own place within it.

Sie lesen das E-Book in den Legimi-Apps auf:

Seitenzahl: 442

Veröffentlichungsjahr: 2018

Das E-Book (TTS) können Sie hören im Abo „Legimi Premium” in Legimi-Apps auf:

Ähnliche

MELTDOWN

About the Authors

Chris Clearfield is a former derivatives trader. He is a licensed commercial pilot and a graduate of Harvard University, where he studied physics and biology. Chris has written about complexity and failure for the Guardian, Forbes, and the Harvard Kennedy School Review.

András Tilcsik holds the Canada Research Chair in Strategy, Organizations, and Society at the University of Toronto’s Rotman School of Management. He has been recognized as one of the world’s top forty business professors under forty. The United Nations named his course on organizatio nal failure as the best course on disaster risk management in a business school.

First published in the United States in 2018 by Penguin Press, an imprint of Penguin Random House Publishing LLC, New York.

Published in trade paperback in Great Britain in 2018 by Atlantic Books, an imprint of Atlantic Books Ltd.

Copyright © Christopher Clearfield and András Tilcsik, 2018

The moral rights of Christopher Clearfield and András Tilcsik to be identified as the authors of this work have been asserted by them in accordance with the Copyright, Designs and Patents Act of 1988.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of both the copyright owner and the above publisher of this book.

Internal illustrations by Anton Ioukhnovets. Copyright © Christopher Clearfield and András Tilcsik, 2018

Text design by Nicole Laroche

10 9 8 7 6 5 4 3 2 1

A CIP catalogue record for this book is available from the British Library.

Trade paperback ISBN: 978 1 78649 224 1

E-book ISBN: 978 1 78649 225 8

Printed in Great Britain

Atlantic Books

An imprint of Atlantic Books Ltd

Ormond House

26–27 Boswell Street

London

WC1N 3JZ

www.atlantic-books.co.uk

To whistleblowers, strangers, and leaderswho listen. We need more of you.

To Linnéa, Torvald, and Soren—CHRIS CLEARFIELD

To my parents and Marvin—ANDRÁS TILCSIK

CONTENTS

Prologue: A Day Like Any Other

PART ONE: FAILURE ALL AROUND US

1. The Danger Zone

2. Deep Waters, New Horizons

3. Hacking, Fraud, and All the News That’s Unfit to Print

PART TWO: CONQUERING COMPLEXITY

4. Out of the Danger Zone

5. Complex Systems, Simple Tools

6. Reading the Writing on the Wall

7. The Anatomy of Dissent

8. The Speed Bump Effect

9. Strangers in a Strange Land

10. Surprise!

Epilogue: The Golden Age of Meltdowns

Acknowledgments

Notes

Index

meltdown / 'mεlt·daʊn / noun

1: an accident in a nuclear reactor in which the fuel overheats and melts the reactor core; may be caused by earthquakes, tsunamis, reckless testing, mundane mistakes, or even just a stuck valve

2: collapse or breakdown of a system

Prologue

A DAY LIKE ANY OTHER

“It was the quotation marks around‘empty’ that got me.”

I.

It was a warm Monday in late June, just before rush hour. Ann and David Wherley boarded the first car of Metro Train 112, bound for Washington, DC, on their way home from an orientation for hospital volunteers. A young woman gave up her seat near the front of the car, and the Wherleys sat together, inseparable as they had been since high school. David, sixty-two, had retired recently, and the couple was looking forward to their fortieth wedding anniversary and a trip to Europe.

David had been a decorated fighter pilot and Air Force officer. In fact, during the 9/11 attacks, he was the general who scrambled fighter jets over Washington and ordered pilots to use their discretion to shoot down any passenger plane that threatened the city. But even as a commanding general, he refused to be chauffeured around. He loved taking the Metro.

At 4:58 p.m., a screech interrupted the rhythmic click-clack of the wheels as the driver slammed on the emergency brake. Then came a cacophony of broken glass, bending metal, and screams as Train 112 slammed into something: a train inexplicably stopped on the tracks. The impact drove a thirteen-foot-thick wall of debris—a mass of crushed seats, ceiling panels, and metal posts—into Train 112 and killed David, Ann, and seven others.

Such a collision should have been impossible. The entire Washington Metro system, made up of over one hundred miles of track, was wired to detect and control trains. When trains got too close to each other, they would automatically slow down. But that day, as Train 112 rounded a curve, another train sat stopped on the tracks ahead—present in the real world, but somehow invisible to the track sensors. Train 112 automatically accelerated; after all, the sensors showed that the track was clear. By the time the driver saw the stopped train and hit the emergency brake, the collision was inevitable.

As rescue workers pulled injured riders from the wreckage, Metro engineers got to work. They needed to make sure that other passengers weren’t at risk. And to do that, they had to solve a mystery: How does a train twice the length of a football field just disappear?

II.

Alarming failures like the crash of Train 112 happen all the time. Take a look at this list of headlines, all from a single week:

CATASTROPHIC MINING DISASTER IN BRAZIL

ANOTHER DAY, ANOTHER HACK: CREDIT CARDSTEALING MALWARE HITS HOTEL CHAIN

HYUNDAI CARS ARE RECALLED OVERFAULTY BRAKE SWITCH

STORY OF FLINT WATER CRISIS, “FAILURE OFGOVERNMENT,” UNFOLDS IN WASHINGTON

“MASSIVE INTELLIGENCE FAILURE” LEDTO THE PARIS TERROR ATTACKS

VANCOUVER SETTLES LAWSUIT WITHMAN WRONGFULLY IMPRISONEDFOR NEARLY THREE DECADES

EBOLA RESPONSE: SCIENTISTS BLAST“DANGEROUSLY FRAGILE GLOBAL SYSTEM”

INQUEST INTO MURDER OF SEVEN-YEAR-OLDHAS BECOME SAGA OF THE SYSTEM’S FAILURETO PROTECT HER

FIRES TO CLEAR LAND SPARK VAST WILDFIRES ANDCAUSE ECOLOGICAL DISASTER IN INDONESIA

FDA INVESTIGATES E. COLI OUTBREAK ATCHIPOTLE RESTAURANTS IN WASHINGTONAND OREGON

It might sound like an exceptionally bad week, but there was nothing special about it. Hardly a week goes by without a handful of meltdowns. One week it’s an industrial accident, another it’s a bankruptcy, and another it’s an awful medical error. Even small issues can wreak great havoc. In recent years, for example, several airlines have grounded their entire fleets of planes because of glitches in their technology systems, stranding passengers for days. These problems may make us angry, but they don’t surprise us anymore. To be alive in the twenty-first century is to rely on countless complex systems that profoundly affect our lives—from the electrical grid and water treatment plants to transportation systems and communication networks to healthcare and the law. But sometimes our systems fail us.

These failures—and even large-scale meltdowns like BP’s oil spill in the Gulf of Mexico, the Fukushima nuclear disaster, and the global financial crisis—seem to stem from very different problems. But their underlying causes turn out to be surprisingly similar. These events have a shared DNA, one that researchers are just beginning to understand. That shared DNA means that failures in one industry can provide lessons for people in other fields: dentists can learn from pilots, and marketing teams from SWAT teams. Understanding the deep causes of failure in high-stakes, exotic domains like deepwater drilling and high-altitude mountaineering can teach us lessons about failure in our more ordinary systems, too. It turns out that everyday meltdowns—failed projects, bad hiring decisions, and even disastrous dinner parties—have a lot in common with oil spills and mountaineering accidents. Fortunately, over the past few decades, researchers around the world have found solutions that can transform how we make decisions, build our teams, design our systems, and prevent the kinds of meltdowns that have become all too common.

This book has two parts. The first explores why our systems fail. It reveals that the same reasons lie behind what appear to be very different events: a social media disaster at Starbucks, the Three Mile Island nuclear accident, a meltdown on Wall Street, and a strange scandal in small-town post offices in the United Kingdom. Part One also explores the paradox of progress: as our systems have become more capable, they have also become more complex and less forgiving, creating an environment where small mistakes can turn into massive failures. Systems that were once innocuous can now accidentally kill people, bankrupt companies, and jail the innocent. And Part One shows that the changes that made our systems vulnerable to accidental failures also provide fertile ground for intentional wrongdoing, like hacking and fraud.

The second part—the bulk of the book—looks at solutions that we can all use. It shows how people can learn from small errors to find out where bigger threats are brewing, how a receptionist saved a life by speaking up to her boss, and how a training program that pilots initially dismissed as “charm school” became one of the reasons flying is safer than ever. It examines why diversity helps us avoid big mistakes and what Everest climbers and Boeing engineers can teach us about the power of simplicity. We’ll learn how film crews and ER teams manage surprises—and how their approach could have saved the mismanaged Facebook IPO and Target’s failed Canadian expansion. And we’ll revisit the puzzle of the disappearing Metro train and see how close engineers were to averting that tragedy.

We came together to write this book from two different paths. Chris started his career as a derivatives trader. During the 2007–2008 financial crisis, he watched from his trading desk as Lehman Brothers collapsed and stock markets around the world unraveled. Around the same time, he began to train as a pilot and developed a very personal interest in avoiding catastrophic mistakes. András comes from the world of research and studies why organizations struggle with complexity. A few years ago, he created a course called Catastrophic Failure in Organizations, in which managers from all sorts of backgrounds study headline-grabbing failures and share their own experiences with everyday meltdowns.

Our source material for the book comes from accident reports, academic studies, and interviews with a broad swath of people, from CEOs to first-time homebuyers. The ideas that emerged explain all sorts of failures and provide practical insights that anyone can use. In the age of meltdowns, these insights will be essential to making good decisions at work and in our personal lives, running successful organizations, and tackling some of our greatest global challenges.

III.

One of the first people we interviewed for this book was Ben Berman, a NASA researcher, airline captain, and former accident investigator who also has an economics degree from Harvard. In many ways, Berman explained, aviation is an ideal laboratory in which to understand how small changes can prevent big meltdowns.

Though the likelihood of a failure on an individual flight is vanishingly small, there are more than one hundred thousand commercial flights per day. And there are lots of noncatastrophic failures, occasions when error traps—things like checklists and warning systems—catch mistakes before they spiral out of control.

But still, accidents happen. When they do, there are rich sources of data about what went wrong. Cockpit voice recorders and black boxes provide records of the crew’s actions and information about what was going on with the airplane itself, often all the way to the point of impact. These records are critical to investigators like Berman—people who dig through the human tragedy of crash sites to prevent future accidents.

On a beautiful May afternoon in 1996, Berman was in New York City with his family when his pager went off. Ben was on the National Transportation Safety Board’s “Go Team,” a group of investigators dispatched in case of a major accident. He soon learned the grim details: ValuJet Flight 592, carrying more than one hundred passengers, had disappeared from radar ten minutes after takeoff from Miami and crashed into the Florida Everglades swamp. A fire had broken out on board—that much was clear from the pilots’ radio calls to air traffic control—but what had caused it was a mystery.

When Berman arrived at the accident site the next day, the smell of jet fuel still lingered in the air. Debris was scattered over the dense marshes, but there was no sign of the fuselage or anything else that looked like an airplane. The fragmented wreckage was buried under waist-high water and layers of saw grass and swamp muck. Sneakers and sandals floated on the surface.

While search crews combed the black swamp water, Berman assembled his team at the Miami Airport and began to interview people who had handled the flight on the ground. One by one, ramp agents came to the ValuJet station manager’s office, where the investigators had set up for the day. Most interviews went something like this:

BERMAN: What did you notice about the plane?

RAMP AGENT: Nothing special, really . . .

BERMAN: Anything unusual when you were servicing the plane? Or when you were helping with the pushback? Or any other time?

RAMP AGENT: No, everything was normal.

BERMAN: Did anything at all draw your attention?

RAMP AGENT: No, really, there was nothing at all.

No one had seen anything.

Then, while sipping coffee between interviews, Berman noticed something interesting in a stack of papers on the station manager’s desk. The bottom of a sheet stuck out from the pile, with a signature on it. It was Candalyn Kubeck’s; she was the flight’s captain. Berman pulled the stack from the tray and leafed through the sheets. They were nothing special, just the standard flight papers for ValuJet 592.

But one sheet caught his attention:

It was a shipping ticket from SabreTech, an airline maintenance contractor, listing ValuJet “COMAT”—company-owned materials—that were on the plane. Berman was intrigued. There had been a fire on the plane, and here was a document saying there had been oxy canisters on board. And there was something else: “It was the quotation marks around ‘empty’ that got me,” Berman told us.

The investigators drove over to SabreTech’s office at the airport and found the clerk who had signed the shipping ticket. They learned that the items described on the ticket as oxy canisters were actually chemical oxygen generators, the devices that produce oxygen for the masks that drop from overhead compartments if a plane loses cabin pressure.

“So, were these empty?” Berman asked.

“They were out of service—they were unserviceable, expired.”

This was a big red flag. Chemical oxygen generators create tremendous heat when activated. And, under the wrong conditions, the otherwise lifesaving oxygen can stoke an inferno. If the boxes contained expired oxygen generators—ones that reached the end of their approved lives—rather than truly empty canisters, a powerful time bomb might have been loaded onto the plane. How could this happen? How did this deadly cargo find its way onto a passenger jet?

The investigation revealed a morass of mistakes, coincidences, and everyday confusions. ValuJet had bought three airplanes and hired SabreTech to refurbish them in a hangar at Miami Airport. Many of the oxygen generators on these planes had expired and needed to be replaced. ValuJet told SabreTech that if a generator had not been expended (that is, if it was still capable of generating oxygen), it was necessary to install a safety cap on it.

But there was confusion over the distinction between canisters that were expired and canisters that were not expended. Many canisters were expired but not expended. Others were expired and expended. Still others were expended but unexpired. And there were also replacement canisters, which were unexpended and unexpired. “If this seems confusing, do not waste your time trying to figure it out—the SabreTech mechanics did not, nor should they have been expected to,” wrote the journalist and pilot William Langewiesche in the Atlantic:

Yes, a mechanic might have found his way past the ValuJet work card and into the huge MD-80 maintenance manual, to chapter 35-22-01, within which line “h” would have instructed him to “store or dispose of oxygen generator.” By diligently pursuing his options, the mechanic could have found his way to a different part of the manual and learned that “all serviceable and unserviceable (unexpended) oxygen generators (canisters) are to be stored in an area that ensures that each unit is not exposed to high temperatures or possible damage.” By pondering the implications of the parentheses he might have deduced that the “unexpended” canisters were also “unserviceable” canisters and that because he had no shipping cap, he should perhaps take such canisters to a safe area and “initiate” them, according to the procedures described in section 2.D.

And this just went on: more details, more distinctions, more terms, more warnings, more engineer-speak.

The safety caps weren’t installed, and the generators ended up in cardboard boxes. After a few weeks, they were taken over to SabreTech’s shipping and receiving department. They sat there until a shipping clerk was told to clean up the place. It made sense to him to ship the boxes to ValuJet’s headquarters in Atlanta.

The canisters had green tags on them. Technically, a green tag meant “repairable,” but it’s unclear what the mechanics meant by it. The clerk thought the tag meant “unserviceable” or “out of service.” He concluded that the canisters were empty. Another clerk filled out a shipping ticket and put quotation marks around “empty” and “5 boxes.” It was just his habit to put words between quotation marks.

The boxes traveled through the system, step by step, from the mechanics to the clerks, from the ramp agents to the cargo hold. The flight crew didn’t spot the problem, and Captain Kubeck signed the flight papers. “As a result, the passengers’ last line of defense folded,” wrote Langewiesche. “They were unlucky, and the system killed them.”

THE INVESTIGATIONS into Washington Metro Train 112 and ValuJet 592 revealed that these accidents were rooted in the same cause: the increasing complexity of our systems. When Train 112 crashed, Jasmine Garsd, a producer at National Public Radio, happened to be riding a few cars back from the impact. “The train collision was like a very fast movie coming to a screeching halt,” she recalled. “I think in moments like these you come to realize two things: how tiny and vulnerable we are in this world of massive machines we’ve built, and how ignorant we are of that vulnerability.”

But there is hope. In the past few decades, our understanding of complexity, organizational behavior, and cognitive psychology has given us a window into how small mistakes blossom into massive failures. Not only do we understand how these sorts of accidents happen, but we also understand how small steps can prevent them. A handful of companies, researchers, and teams around the world are leading a revolution to find solutions that prevent meltdowns—and don’t require advanced technologies or million-dollar budgets.

In the spring of 2016, we arranged for Ben Berman to speak to a room full of people interested in the risk management lessons of aviation. It was an incredibly diverse group: HR professionals and civil servants, entrepreneurs and doctors, nonprofit managers and lawyers, and even someone from the fashion industry. But Berman’s lessons cut across disciplines. “System failures,” he told the group, “are incredibly costly and easy to underestimate—and it’s very likely that you’ll face something like this in your career or your life.” He paused and looked out at the audience. “The good news, I think, is that you can make a real difference.”

Part One

FAILURE ALLAROUND US

Chapter One

THE DANGER ZONE

“Oh this will be fun.”

I.

The Ventana Nuclear Power Plant lies in the foothills of the majestic San Gabriel Mountains, just forty miles east of Los Angeles. One day in the late 1970s, a tremor rattled through the plant. As alarms rang and warning lights flashed, panic broke out in the control room. On a panel crowded with gauges, an indicator showed that coolant water in the reactor core had reached a dangerously high level. The control room crew, employees of California Gas and Electric, opened relief valves to get rid of the excess water. But in reality, the water level wasn’t high. In fact, it was so low that the reactor core was inches away from being exposed. A supervisor finally realized that the water level indicator was wrong—all because of a stuck needle. The crew scrambled to close the valves to prevent a meltdown of the reactor core. For several terrifying minutes, the plant was on the brink of nuclear disaster.

“I may be wrong, but I would say you’re probably lucky to be alive,” a nuclear expert told a couple of journalists who happened to be at the plant during the accident. “For that matter, I think we might say the same for the rest of Southern California.”

Fortunately, this incident never actually occurred. It’s from the plot of The China Syndrome, a 1979 thriller starring Jack Lemmon, Jane Fonda, and Michael Douglas. It was sheer fiction, at least according to nuclear industry executives, who lambasted the film even before it was released. They said the story had no scientific credibility; one executive called it a “character assassination of an entire industry.”

Michael Douglas, who both coproduced and starred in the film, disagreed: “I have a premonition that a lot of what’s in this picture will be reenacted in life in the next two or three years.”

It didn’t take that long. Twelve days after The China Syndrome opened in theaters, Tom Kauffman, a handsome twenty-six-year-old with long red hair, arrived for work at the Three Mile Island Nuclear Generating Station, a concrete fortress built on a sandbar in the middle of Pennsylvania’s Susquehanna River. It was 6:30 on a Wednesday morning, and Kauffman could tell that something was wrong. The vapor plumes coming from the giant cooling towers were much smaller than normal. And as he was receiving his security pat down, he could hear an emergency alarm. “Oh, they’re having some problem down there in Unit Two,” the guard told him.

Inside, the control room was crowded with operators, and hundreds of lights were flashing on the mammoth console. Radiation alarms went off all over the facility. Shortly before 7:00 a.m., a supervisor declared a site emergency. This meant there was a possibility of an “uncontrolled release of radioactivity” in the plant. By 8:00 a.m., half of the nuclear fuel in one of the plant’s two reactors had melted, and by 10:30 a.m., radioactive gas had leaked into the control room.

It was the worst nuclear accident in American history. Engineers struggled to stabilize the overheated reactor for days, and some officials feared the worst. Scientists debated whether the hydrogen bubble that had formed in the reactor could explode, and it was clear that radiation would kill anyone who got close enough to manually open a valve to remove the buildup of volatile gas.

After a tense meeting in the White House Situation Room, President Carter’s science aide took aside Victor Gilinsky, the commissioner of the Nuclear Regulatory Commission, and quietly suggested that they send in terminal cancer patients to release the valve. Gilinsky looked him over and could tell he wasn’t joking.

The communities around the plant turned into ghost towns as 140,000 people fled the area. Five days into the crisis, President Carter and the First Lady traveled to the site to quell the panic. Wearing bright yellow booties over their shoes to protect themselves from traces of radiation on the ground, they toured the plant and reassured the nation. The same day, engineers figured out that the hydrogen bubble posed no immediate threat. And once coolant was restored, the core temperature began to fall, though it took a whole month before the hottest parts of the core started to cool. Eventually, all public advisories were lifted. But many came to think of Three Mile Island as a place where our worst fears almost came to pass.

The Three Mile Island meltdown began as a simple plumbing problem. A work crew was performing routine maintenance on the nonnuclear part of the plant. For reasons that we still don’t totally understand, the set of pumps that normally sent water to the steam generator shut down. One theory is that, during the maintenance, moisture accidentally got into the air system that controlled the plant’s instruments and regulated the pumps. Without water flowing to the steam generator, it couldn’t remove heat from the reactor core, so the temperature increased and pressure built up in the reactor. In response, a small pressure-relief valve automatically opened, as designed. But then came another glitch. When pressure returned to normal, the relief valve didn’t close. It stuck open. The water that was supposed to cover and cool the core started to escape.

An indicator light in the control room led operators to believe that the valve was closed. But in reality, the light showed only that the valve had been told to close, not that it had closed. And there were no instruments directly showing the water level in the core, so operators relied on a different measurement: the water level in a part of the system called the pressurizer. But as water escaped through the stuck-open valve, water in the pressurizer appeared to be rising even as it was falling in the core. So the operators assumed that there was too much water, when in fact they had the opposite problem. When an emergency cooling system turned on automatically and forced water into the core, they all but shut it off. The core began to melt.

The operators knew something was wrong, but they didn’t know what, and it took them hours to figure out that water was being lost. The avalanche of alarms was unnerving. With all the sirens, klaxon horns, and flashing lights, it was hard to tell trivial warnings from vital alarms. Communication became even more difficult when high radiation readings forced everyone in the control room to wear respirators.

And it was unclear just how hot the core had become. Some temperature readings were high. Others were low. For a while, the computer monitoring the reactor temperature tapped out nothing but lines like these:

The situation was nearly as bad at the Nuclear Regulatory Commission. “It was difficult to process the uncertain and often contradictory information,” Gilinsky recalled. “I got lots of useless advice from all sides. No one seemed to have a reliable grip on what was going on, or what to do.”

It was a puzzling, unprecedented crisis. And it changed everything we know about failure in modern systems.

II.

Four months after the Three Mile Island accident, a mail truck climbed a winding mountain road up to a secluded cabin in Hillsdale, New York, in the foothills of the Berkshires. It was a hot August day, and it took the driver a few tries to find the place. When the truck stopped, a lean, curly-haired man in his mid-fifties emerged from the cabin and eagerly signed for a package—a large box filled with books and articles about industrial accidents.

The man was Charles Perrow, or Chick, as his friends called him. Perrow was an unlikely person to revolutionize the science of catastrophic failure. He wasn’t an engineer but a sociology professor. He had done no previous research on accidents, nuclear power, or safety. He was an expert on organizations rather than catastrophes. His most recent article was titled “Insurgency of the Powerless: Farm Worker Movements, 1946–1972.” When Three Mile Island happened, he was studying the organization of textile mills in nineteenth-century New England.

Sociologists rarely have a big impact on life-and-death matters like nuclear safety. A New Yorker cartoonist once lampooned the discipline with the image of a man reading the newspaper headline “Sociologists on Strike!!! Nation in Peril!!” But just five years after that box was delivered to Perrow’s cabin, his book Normal Accidents—a study of catastrophes in high-risk industries—became a sort of academic cult classic. Experts in a range of fields—from nuclear engineers to software experts and medical researchers—read and debated the book. Perrow accepted a professorship at Yale, and by the time his second book on catastrophes was published, the American Prospect magazine declared that his work had “achieved iconic status.” One endorsement for the book called him “the undisputed ‘master of disaster.’”

Perrow first got interested in meltdowns when the presidential commission on the Three Mile Island accident asked him to study the event. The commission was initially planning to hear only from engineers and lawyers, but its sole sociologist member suggested they also consult Perrow. She had a hunch that there was something to be learned from a social scientist, someone who had thought about how organizations actually operate in the real world.

When Perrow received the transcripts of the commission’s hearings, he read all the materials in an afternoon. That night he tossed and turned for hours, and when he finally got to sleep, he had his worst nightmares since his army days in World War II. “The testimony of the operators made a profound impression on me,” he recalled years later. “Here was an enormously, catastrophically risky technology, and they had no idea what was going on for some hours. . . . I suddenly realized that I was in the thick of it, in the very middle of it, because this was an organizational problem more than anything else.”

He had three weeks to write a ten-page report, but—with the help of graduate students who sent boxes of materials to his cabin—he wound up cranking out a forty-page paper by the deadline. He then put together what he would later describe as “a toxic and corrosive group of graduate research assistants who argued with me and each other.” It was, Perrow recalled, “the gloomiest group on campus, known for our gallows humor. At our Monday meetings, one of us would say, ‘It was a great weekend for the project,’ and rattle off the latest disasters.”

This group reflected Perrow’s personality. One scholar described him as a curmudgeon but called his research a “beacon.” Students said he was a demanding teacher, but they loved his classes because they learned so much. Among academics, he had a reputation for giving unusually intense but constructive criticism. “Chick’s critical appraisals of my work have been the yardstick by which I’ve judged my success,” wrote one author. “He has never failed to produce pages and pages of sometimes scathing remarks, usually well reasoned, and always ending with something like ‘Love, Chick’ or ‘My Usual Sensitive Self.’”

III.

The more Perrow learned about Three Mile Island, the more fascinated he became. It was a major accident, but its causes were trivial: not a massive earthquake or a big engineering mistake, but a combination of small failures—a plumbing problem, a stuck valve, and an ambiguous indicator light.

And it was an accident that happened incredibly quickly. Consider the initial plumbing glitch, the resulting failure of the pumps to send water to the steam generator, the increasing pressure in the reactor, the opening of the pressure relief valve and its failure to close, and then the misleading indication of the valve’s position—all this happened in just thirteen seconds. In less than ten minutes, the damage to the core was already done.

To Perrow, it was clear that blaming the operators was a cheap shot. The official investigation portrayed the plant staff as the main culprits, but Perrow realized that their mistakes were mistakes only in hindsight—“retrospective errors,” he called them.

Take, for example, the greatest blunder—the assumption that the problem was too much water rather than too little. When the operators made this assumption, the readings available to them didn’t show that the coolant level was too low. To the best of their knowledge, there was no danger of uncovering the core, so they focused on another serious problem: the risk of overfilling the system. Though there were indications that might have helped reveal the true nature of the problem, the operators thought these were due to instrument malfunction. And that was a reasonable assumption; instruments were malfunctioning. Before investigators figured out the bizarre synergy of small failures that had occurred at the plant, the operators’ decisions seemed sensible.

That was a scary conclusion. Here was one of the worst nuclear accidents in history, but it couldn’t be blamed on obvious human errors or a big external shock. It somehow just emerged from small mishaps that came together in a weird way.

In Perrow’s view, the accident was not a freak occurrence, but a fundamental feature of the nuclear power plant as a system. The failure was driven by the connections between different parts, rather than the parts themselves. The moisture that got into the air system wouldn’t have been a problem on its own. But through its connection to pumps and the steam generator, a host of valves, and the reactor, it had a big impact.

For years, Perrow and his team of students trudged through the details of hundreds of accidents, from airplane crashes to chemical plant explosions. And the same pattern showed up over and over again. Different parts of a system unexpectedly interacted with one another, small failures combined in unanticipated ways, and people didn’t understand what was happening.

Perrow’s theory was that two factors make systems susceptible to these kinds of failures. If we understand those factors, we can figure out which systems are most vulnerable.

The first factor has to do with how the different parts of the system interact with one another. Some systems are linear: they are like an assembly line in a car factory where things proceed through an easily predictable sequence. Each car goes from the first station to the second to the third and so on, with different parts installed at each step. And if a station breaks down, it will be immediately obvious which one failed. It’s also clear what the consequences will be: cars won’t reach the next station and might pile up at the previous one. In systems like these, the different parts interact in mostly visible and predictable ways.

Other systems, like nuclear power plants, are more complex: their parts are more likely to interact in hidden and unexpected ways. Complex systems are more like an elaborate web than an assembly line. Many of their parts are intricately linked and can easily affect one another. Even seemingly unrelated parts might be connected indirectly, and some subsystems are linked to many parts of the system. So when something goes wrong, problems pop up everywhere, and it’s hard to figure out what’s going on.

To make matters worse, much of what goes on in complex systems is invisible to the naked eye. Imagine walking on a hiking trail that winds down the edge of a cliff. You are only steps away from a precipice, but your senses keep you safe. Your head and eyes are constantly focusing to make sure you’re not making missteps or veering too close to the edge.

Now, imagine that you had to navigate the same path by looking through binoculars. You can no longer take in the whole scene. Instead, you have to move your attention around through a narrow and indirect focus. You look down to where your left foot might land. Then you swing the binoculars to try to gauge how far you are from the edge. Then you prepare to move your right foot, and then you have to focus back on the path again. And now imagine that you’re running down the path while relying only on these sporadic and indirect pictures. That’s what we are doing when we’re trying to manage a complex system.

Perrow was quick to note that the difference between complex and linear systems isn’t sophistication. An automotive assembly plant, for example, is anything but unsophisticated, and yet its parts interact in mostly linear and transparent ways. Or take dams. They are marvels of engineering but weren’t complex by Perrow’s definition.

In a complex system, we can’t go in to take a look at what’s happening in the belly of the beast. We need to rely on indirect indicators to assess most situations. In a nuclear power plant, for example, we can’t just send someone to see what’s happening in the core. We need to piece together a full picture from small slivers—pressure indications, water flow measurements, and the like. We see some things but not everything. So our diagnoses can easily turn out to be wrong.

And when we have complex interactions, small changes can have huge effects. At Three Mile Island, a cupful of nonradioactive water caused the loss of a thousand liters of radioactive coolant. It’s the butterfly effect from chaos theory—the idea that a butterfly flapping its wings in Brazil might create the conditions for a tornado in Texas. The pioneers of chaos theory understood that our models and measurements would never be good enough to predict the effects of the flapping wings. Perrow argued something similar: we simply can’t understand enough about complex systems to predict all the possible consequences of even a small failure.

IV.

The second element of Perrow’s theory has to do with how much slack there is in a system. He borrowed a term from engineering: tight coupling. When a system is tightly coupled, there is little slack or buffer among its parts. The failure of one part can easily affect the others. Loose coupling means the opposite: there is a lot of slack among parts, so when one fails, the rest of the system can usually survive.

In tightly coupled systems, it’s not enough to get things mostly right. The quantity of inputs must be precise, and they need to be combined in a particular order and time frame. Redoing a task if it’s not done correctly the first time isn’t usually an option. Substitutes or alternative methods rarely work—there is only one way to skin the cat. Everything happens quickly, and we can’t just turn off the system while we deal with a problem.

Take nuclear power plants. Controlling a chain reaction requires a specific set of conditions, and even small deviations from the correct process (like a stuck-open valve) can cause big problems. And when problems arise, we can’t just pause or unplug the system. The chain reaction proceeds at its own pace, and even if we stop it, there is still a great deal of leftover heat. Timing also matters. If the reactor is overheating, it doesn’t help to add more coolant a few hours later—it needs to be done right away. And problems spread quickly as the core melts and radiation escapes.

An aircraft manufacturing plant is more loosely coupled. The tail and the fuselage, for example, are built separately, and if there are problems in one, they can be fixed before attaching the two parts. And it doesn’t matter which part we build first. If we run into any problems, we can just put things on hold and store incomplete products, like partially finished tails, and then return to them later. And if we turn off all the machines, the system will stop.

No system fits perfectly into Perrow’s categories, but some systems are more complex and tightly coupled than others. It’s a matter of degrees, and we can map systems based on these dimensions. Perrow’s initial sketch looked something like this:

Toward the top of the chart, dams and nuclear plants are both tightly coupled, but dams are (at least traditionally) much less complex. They comprise fewer parts and provide fewer opportunities for unforeseen and invisible interactions.

Near the bottom of the chart, post offices and universities are loosely coupled—things need not be in a precise order, and there is a lot of time to fix problems. “In the post office, mail can pile up for a while in a buffer stack without undue alarm,” Perrow wrote, because “people tolerate the Christmas rush just as students tolerate lines at fall registration.”

But a post office is less complex than a university. It’s a fairly straightforward system. A university, in contrast, is an intricate bureaucracy filled with a bewildering array of units, subunits, functions, rules, and people with different agendas—from researchers and teachers to students and administrators—often linked in messy, unpredictable ways. With decades of experience in this system, Perrow wrote vividly about how an ordinary academic incident—the decision not to grant tenure to an assistant professor who is popular with students and community members but has published too little—might create tricky and unexpected problems for a dean. But thanks to loose coupling, there is a lot of time and flexibility to deal with such issues, and the incident won’t damage the rest of the system. A scandal in the sociology department doesn’t usually affect the medical school.

The danger zone in Perrow’s chart is the upper-right quadrant. It’s the combination of complexity and tight coupling that causes meltdowns. Small errors are inevitable in complex systems, and once things begin to go south, such systems produce baffling symptoms. No matter how hard we try, we struggle to make a diagnosis and might even make things worse by solving the wrong problem. And if the system is also tightly coupled, we can’t stop the falling dominoes. Failures spread quickly and uncontrollably.

Perrow called these meltdowns normal accidents. “A normal accident,” he wrote, “is where everyone tries very hard to play safe, but unexpected interaction of two or more failures (because of interactive complexity) causes a cascade of failures (because of tight coupling).” Such accidents are normal not in the sense of being frequent but in the sense of being natural and inevitable. “It is normal for us to die, but we only do it once,” he quipped.

Normal accidents, Perrow admits, are extremely rare. Most disasters are preventable, and their immediate causes aren’t complexity and tight coupling but avoidable mistakes—management failures, ignored warning signs, miscommunication, poor training, and reckless risk-taking. But Perrow’s framework also helps us understand these accidents: complexity and tight coupling contribute to preventable meltdowns, too. If a system is complex, our understanding of how it works and what’s happening in it is less likely to be correct, and our mistakes are more likely to become combined with other errors in perplexing ways. And tight coupling makes the resulting failures harder to contain.

Imagine a maintenance worker who accidentally causes a small glitch—by closing the wrong valve, for example. Many systems absorb such trivial failures every day, but Three Mile Island shows just how much damage small failures can do under the right conditions. Complexity and coupling create a danger zone, where small mistakes turn into meltdowns.

And meltdowns don’t just include large-scale engineering disasters. Complex and coupled systems—and system failures—are all around us, even in the most unlikely places.

V.

In the winter of 2012, Starbucks launched a social media campaign to get coffee lovers in the holiday spirit. It asked its customers to post festive messages on Twitter using the hashtag #SpreadThe Cheer. The company also sponsored an ice rink at the Natural History Museum in London, which featured a giant screen to display all the tweets that included the hashtag.

It was a smart marketing idea. Customers would generate free content for Starbucks and flood the internet with warm and fuzzy messages about the upcoming holidays and their favorite Starbucks drinks. The messages wouldn’t just appear online but also on a big screen visible to many ice skaters, coffee drinkers at the ice rink café, museumgoers, and passersby. And inappropriate messages would be weeded out by a moderation filter, so the holiday spirit—and its association with warm Starbucks drinks—would prevail.

It was a Saturday evening in mid-December, and everything at the ice rink was going well—for a while. Then, unbeknownst to Starbucks, the content filter broke, and messages like these began to appear on the giant screen:

I like buying coffee that tastes nice from a shop that pays tax. So I avoid @starbucks#spreadthecheer

Hey #Starbucks. PAY YOUR FUCKING TAX #spreadthecheer

If firms like Starbucks paid proper taxes, Museums wouldn’t have to prostitute themselves to advertisers #spreadthecheer

#spreadthecheer Tax Dodging MoFo’s

The messages were referring to a recent controversy that involved the use of legal tax-avoidance tactics by Starbucks.

Kate Talbot, a community organizer in her early twenties, took a photo of the screen with her phone and tweeted it with these words: “Oh dear, Starbucks have a screen showing their #spreadthecheer tweets at the National History Museum.” Soon enough Talbot’s own tweet showed up on the screen. So she sent another one: “Omg now they are showing my tweet! Someone PR should be on this . . . #spreadthecheer#Starbucks#payyourtaxes.”

News of the ongoing fiasco spread quickly over Twitter and encouraged even more people to get involved. “Turns out a Starbucks in London is displaying on a screen any tweet with the #spreadthecheer hashtag,” one man tweeted. “Oh this will be fun.”

The avalanche of tweets was unstoppable.

Will Starbucks #SpreadTheCheer this Christmas by stopping to exploit workers and starting to contribute some tax? #taxavoidance #livingwage

Dear @StarbucksUK, was it clever to have a screen at a museum showing all tweets to you? #spreadthecheer#payyourtaxes

Smash Starbucks! Vive la revolution, you have nothing to lose but your overpriced milky coffee drowned in syrup #spreadthecheer

Maybe starbucks should hire a minimum-wage, no benefits or paid lunch breaks “barista” to monitor and vet tweets first for #spreadthecheer.

Talk about a PR fail. #spreadthecheer

Starbucks found itself in Chick Perrow’s world.

Social media is a complex system. It’s made up of countless people with many different views and motives. It’s hard to know who they are and what they will make of a particular campaign. And it’s hard to predict how they might react to a mistake like the glitch of Starbucks’ moderation filter. Kate Talbot responded by taking a photo of the screen and sharing it. Others then reacted to the news that any tweet using the right hashtag would be displayed at a prominent location. And then traditional media outlets reacted to the blizzard of tweets. They published reports of how the PR stunt backfired, so the botched campaign became mainstream news and reached even more people. These were unintended interactions between the glitch in the content filter, Talbot’s photo, other Twitter users’ reactions, and the resulting media coverage.

When the content filter broke, it increased tight coupling because the screen now pulled in any tweet automatically. And the news that Starbucks had a PR disaster in the making spread rapidly on Twitter—a tightly coupled system by design. At first, just a few people shared the information, then some of their followers shared it, too, and then the followers of those followers, and so on. Even after the moderation filter was fixed, the slew of negative tweets continued. And there was nothing Starbucks could do to stop them.

A campaign for holiday cheer seems as far from a nuclear power plant as you can get, but Perrow’s ideas still apply. In fact, we can see complexity and coupling in all sorts of places, even at home. Take Thanksgiving dinner, something we don’t usually think of as a system. First, there’s the travel: the days before and after the holiday are some of the busiest travel days in the year. In the United States, Thanksgiving is always the fourth Thursday in November; in Perrow’s terms, that means there’s little slack—there’s only a one-day window for the holiday. The massive amount of travel also creates complex interactions: cars on the roads create gridlock, and the network structure of air travel means that bad weather at a major hub—like Chicago, New York, or Atlanta—can cause a ripple effect that strands travelers around the country.

Then there’s the dinner itself. Many houses have only one oven, so the classic roasted or baked Thanksgiving dishes—the turkey, casseroles, and pies—are linked. If a casserole or the turkey takes longer than expected, that delays the rest of the meal. And the dishes depend on one another. Stuffing often cooks inside the turkey, and gravy comes from the roasted bird’s juices. A simple meal like spaghetti with meat sauce doesn’t have these kinds of interconnections.

It’s also hard to tell what’s going on inside the system—that is, whether the turkey is done cooking or still has hours left to go. To solve this problem, some companies add a safety mechanism—a small plastic button stuck in the turkey that pops out when the bird is done cooking. But these buttons, like many safety systems, are unreliable. More experienced cooks use a meat thermometer as an indicator of what’s going on inside the turkey, but it’s still hard to pinpoint the amount of time needed.

The meal is also tightly coupled: for the most part, you can’t pause the cooking process and return to it later. Dishes keep cooking and the guests are on their way. Once a mistake has been made—once the turkey is overcooked or an ingredient missed—you can’t go back.

Sure enough, as Perrow might predict, the whole meal can easily spiral out of control. A few years ago, the gourmet food magazine Bon Appétit asked its readers to share “their craziest Thanksgiving food disaster stories.” The response was overwhelming. Hundreds of people wrote in with all kinds of culinary failures, from flaming turkeys and bland batches of gravy to stuffing that tasted like soggy bread crumbs.

False diagnoses are a common problem. People worry that their turkey will be undercooked when in fact it’s already as dry as a bone. Or they worry about burning the turkey only to find out it’s still raw on the inside, which means the stuffing inside the bird is also undercooked. Sometimes both problems happen at the same time: the breast meat is overdone, but the thighs are undercooked.

With the clock ticking, complexity often overwhelms cooks. They make a mistake without even realizing it’s a mistake until much later—when their guests sit down and taste the food. “Hundreds of you sent in stories about accidentally using the wrong ingredient in your pies, gravies, casseroles and more,” the magazine noted. “Our favorite iteration was a reader who accidentally used Vicks 44 [a potent cough syrup] instead of vanilla in her ice cream.”

To avoid Thanksgiving disasters, some experts recommend simplifying the part of the system that’s most clearly in the danger zone: the turkey. “If you break down the turkey into smaller pieces and cook them separately, you’ll have a higher margin for success,” says chef Jason Quinn. “It’s easier to cook white meat perfectly than trying to cook white meat and dark meat perfectly at the same time.” The stuffing, too, can be made separately.

As a result, the turkey becomes a less complex system. The various parts are less connected, and it’s easier to see what’s going on with each of them. Tight coupling is also reduced. You can roast some parts—the drumsticks and wings, for example—earlier. Then there is more space in the oven later on, and it’s easier to monitor the breast meat to make sure that it’s roasted to perfection. If unexpected issues arise, you can just focus on the problem at hand—without having to worry about a whole complex system of white meats, dark meats, stuffing, and all.

This approach—reducing complexity and adding slack—helps us escape from the danger zone. It can be an effective solution, one we’ll explore later in this book. But in recent decades, the world has actually been moving in the opposite direction: many systems that were once far from the danger zone are now in the middle of it.

Chapter Two

DEEP WATERS, NEW HORIZONS

“People have been jailed when it is obvious acomplex computer system is at fault.”

I.

When Erika Christakis hit send on an email to the students in her residential college, she didn’t expect that it would spur a controversy across the Yale University campus, garner national attention, and drive aggrieved students to confront her and her husband, Professor Nicholas Christakis. She and Nicholas were co-masters at Yale’s Silliman College, a residential community that housed more than four hundred students and included a library, a movie theater, a recording studio, and a dining hall.

In the days before Halloween in 2015, Yale’s Intercultural Affairs Committee sent an email urging students to avoid racially and culturally insensitive Halloween costumes. The email came amid a broader conversation about race and privilege in the United States, spurred by the shooting of black men by police, the mass shooting of nine black worshipers in a South Carolina church by a white supremacist, and conversations and protests led by Black Lives Matter activists.