144,99 €

Mehr erfahren.

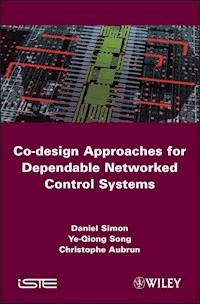

- Herausgeber: John Wiley & Sons

- Kategorie: Wissenschaft und neue Technologien

- Sprache: Englisch

Networked Control Systems (NCS) is a growing field of application and calls for the development of integrated approaches requiring multidisciplinary skills in control, real-time computing and communication protocols. This book describes co-design approaches, and establishes the links between the QoC (Quality of Control) and QoS (Quality of Service) of the network and computing resources. The methods and tools described in this book take into account, at design level, various parameters and properties that must be satisfied by systems controlled through a network. Among the important network properties examined are the QoC, the dependability of the system, and the feasibility of the real-time scheduling of tasks and messages. Correct exploitation of these approaches allows for efficient design, diagnosis, and implementation of the NCS. This book will be of great interest to researchers and advanced students in automatic control, real-time computing, and networking domains, and to engineers tasked with development of NCS, as well as those working in related network design and engineering fields.

Sie lesen das E-Book in den Legimi-Apps auf:

Seitenzahl: 486

Veröffentlichungsjahr: 2013

Ähnliche

Contents

Foreword

Introduction and Problem Statement

I.1. Networked control systems and control design challenges

I.2. Control design: from continuous time to networked implementation

I.3. Timing parameter assignment

I.4. Control and task/message scheduling

I.5. Diagnosis and fault tolerance in NCS

I.6. Co-design approaches

I.7. Outline of the book

I.8. Bibliography

Chapter 1. Preliminary Notions and State of the Art

1.1. Overview

1.2. Preliminary notions on real-time scheduling

1.3. Control aware computing

1.4. Feedback-scheduling basics

1.5. Fault diagnosis of NCS with network-induced effects

1.6. Summary

1.7. Bibliography

Chapter 2. Computing-aware Control

2.1. Overview

2.2. Robust control w.r.t. computing and networking-induced latencies

2.3. Weakly hard constraints

2.4. LPV adaptive variable sampling

2.5. Summary

2.6. Bibliography

Chapter 3. QoC-aware Dynamic Network QoS Adaptation

3.1. Overview

3.2. Dynamic CAN message priority allocation according to the control application needs

3.3. Bandwidth allocation control for switched Ethernet networks

3.4. Conclusion

3.5. Bibliography

Chapter 4. Plant-state-based Feedback Scheduling

4.1. Overview

4.2. Adaptive scheduling and varying sampling robust control

4.3. MPC-based integrated control and scheduling

4.4. A convex optimization approach to feedback scheduling

4.5. Control and real-time scheduling co-design via a LPV approach

4.6. Summary

4.7. Bibliography

Chapter 5. Overload Management Through Selective Data Dropping

5.1. Introduction

5.2. Scheduling under (m, k)-firm constraint

5.3. Stability analysis of a multidimensional system

5.4. Optimized control and scheduling co-design

5.5. Plant-state-triggered control and scheduling adaptation and optimization

5.6. Conclusions

5.7. Bibliography

Chapter 6. Fault Detection and Isolation, Fault Tolerant Control

6.1. Introduction

6.2. FDI and FTC

6.3. Networked-induced effects

6.4. Pragmatic solutions

6.5. Advanced techniques

6.6. Conclusion and perspectives

6.7. Bibliography

Chapter 7. Implementation: Control and Diagnosis for an Unmanned Aerial Vehicle

7.1. Introduction

7.2. The quadrotor model, control and diagnosis

7.3. Simulation of the network

7.4. Hardware in the loop architecture

7.5. Experiments and results

7.6. Summary

7.7. Bibliography

Glossary and Acronyms

List of Authors

Index

First published 2010 in Great Britain and the United States by ISTE Ltd and John Wiley & Sons, Inc.

Apart from any fair dealing for the purposes of research or private study, or criticism or review, as permitted under the Copyright, Designs and Patents Act 1988, this publication may only be reproduced, stored or transmitted, in any form or by any means, with the prior permission in writing of the publishers, or in the case of reprographic reproduction in accordance with the terms and licenses issued by the CLA. Enquiries concerning reproduction outside these terms should be sent to the publishers at the undermentioned address:

ISTE Ltd

John Wiley & Sons, Inc.

27-37 St George’s Road

111 River Street

London SW19 4EU

Hoboken, NJ 07030

UK

USA

www.iste.co.uk

www.wiley.com

© ISTE Ltd 2010

The rights of Christophe Aubrun, Daniel Simon and Ye-Qiong Song to be identified as the authors of this work have been asserted by them in accordance with the Copyright, Designs and Patents Act 1988.

Library of Congress Cataloging-in-Publication Data

Co-design approaches for dependable networked control systems / edited by Christophe Aubrun, Daniel Simon, Ye-Qiong Song.

p. cm.

Includes bibliographical references and index.

ISBN 978-1-84821-176-6

1. Feedback control systems--Reliability. 2. Feedback control systems--Design and construction. 3. Sensor networks--Reliability. 4. Sensor networks--Design and construction. I. Aubrun, Christophe. II. Simon, Daniel, 1954- III. Song, Ye-Qiong.

TJ216.C62 2010

629.8′3--dc22

2009041851

British Library Cataloguing-in-Publication Data

A CIP record for this book is available from the British Library

ISBN 978-1-84821-176-6

Foreword

Modeling, analysis and control of networked control systems (NCS) have recently emerged as topics of significant interest to the control community. The defining feature of any NCS is that information (reference input, plant output, control input) is exchanged using a digital band-limited serial communication channel among control system components (sensors, controller, actuators) and usually shared by other feedback control loops. The insertion of a communication network in the feedback control loop makes the analysis and design of an NCS more challenging. Conventional control theory with many ideal assumptions, such as synchronized control and non-delayed sensing and actuation, must be revisited so that the limitations on communication capabilities within the control design framework can be integrated.

Furthermore, the new trend is to implement the realization of fault diagnosis (FD) and fault tolerant control (FTC) systems that employ supervision functionalities (performance evaluation, fault diagnosis) and reconfiguration mechanisms by using cooperative functions that are also distributed on a networked architecture. A critical issue, therefore, which must always be considered in the design of any networked process control system, is its robustness with respect to failure situations, including system component failures as well as network failures. By network failure, we mean a total breakdown in the communication between the control system components as a result of, for example, some physical malfunction in the networking devices or severe overloading of the network resources that cause a network shut down.

In this framework, dependability of NCS represents the emergence of an important research field. Dependability groups together with three properties that the NCS must satisfy in order to be designed: safety, reliability, and availability. Therefore, the design of a dependable NCSs implies a multidisciplinary approach; more precisely, dealing with a deep knowledge of both fault tolerant control and computer science (mainly real time scheduling and communication protocols).

The content of this book gives an overview of the main results obtained after three years of research work within the safe-NECS project funded by the French “Agence Nationale de la Recherche—ANR”. During these three years, five research groups have cooperated intensively to propose a framework for the design of dependable networked control systems. In this context, the research of safe-NECS took into consid-eration process control functions, FDI/FTC and their implementation over a network as an integrated system. In particular, the project aim was to develop, in a coordinated way, a “co-design” approach that integrates several kinds of parameters: the characteristics modeling the Quality of Control (QoC), the dependability properties required for a system and the parameters of real-time scheduling (tasks and messages). Issues such as network-induced delays, data losses and signal quantization as well as sensor and actuator faults represent some of the more common problems that have motivated the extensive research work developed within the safe-NECS project.

The research work in the safe-NECS project aimed at enhancing the integration of control, real-time scheduling and networking. The safe-NECS project was clearly a multi-disciplinary project in that it brought together partners from the control and computer science communities. A major difficulty for this project came from the fact that both communities manipulate objects and formalisms of very different natures. We consider that one of the major contributions of this project was to promote a synergistic approach, which is a necessary condition for achieving the objectives of the co-design which is demonstrated in this project.

Safe-NECS project focuses on the following points, which are reported in this book:

– specification of a dependable system and performance evaluation;

– modeling the effect of a real-time distributed implementation (i.e. scheduling parameters and networking protocols) on Quality of Control (QoC) and dependability;

– an integrated control and scheduling co-design method realized by developing feedback scheduling algorithms, taking into account both QoC parameters and dependability constraints;

– fault tolerance and the on-line re-configuration of NECS with distributed diagnostic and decision-making mechanisms;

– testing and validation using a UAV-type quadrotor.

Dominique SAUTER

Introduction and Problem Statement1

Networked control systems (NCS) are feedback control systems wherein the control loops are closed over a shared network. Control and feedback signals are transmitted among the system’s components as information flows through a network, as depicted in figure I.1. A fully featured NCS is made up of four kinds of components to close the control loops: sensors to collect information on the controlled plant’s state, controllers to provide decisions and commands, actuators to apply the control signals and communication networks to enable communications between the NCS components.

Figure I.1.Typical NCS architecture

Compared with conventional point-to-point control systems, the advantages of NCS are lighter wiring, lower installation costs, and greater abilities in diagnosis, reconfigurability and maintenance. Furthermore, the technologies used in computer and industrial networks, both wired and wireless, have progressed rapidly providing high bandwidth, quality of service (QoS) guarantees and low communication costs. Because of these distinctive benefits, typical application of these systems nowadays ranges over various fields of industry and services. X-by-wire automotive systems, coordinated control of swarms of mobile robotics and advanced aircraft on-board control and housekeeping are examples of NCS usage in the field of embedded systems. Large-scale utility systems are deployed in order to control and monitor water, gas, energy networks, and transportation services on roads and railways. The capabilities of wireless sensor networks, which are widespread and cost very little to cooperatively monitor physical or environmental conditions, can be enhanced by adding some actuation capacities to make them control systems, such as in smart automated buildings.

I.1. Networked control systems and control design challenges

The design of NCS combines the domains of control systems, computer networks, and real-time computing. Historically, tools for the design and analysis of systems related to these disciplines have been designed and used with limited interaction. The increasing complexity of modern computer systems and the rapidly evolving technology of computer networks require more integrated methodologies, specifically suited to NCS.

From a control-theoretic point of view, the main problem to be solved is the achievement of a control objective (i.e. a mixture of stability, performance and reliability requirements), despite the disturbances induced by the distribution of the control system over a network. For instance, the sharing of common computing resources and communication bandwidths by competing control loops along with other general purpose applications introduces random delays and even data losses. Moreover, as the computations are supported by heterogenous computers and the communication between distributed components may spread over different levels of area networks, NCS become more and more complex and difficult to model. A key built-in feature of a multi-layer NCS is the availability of redundant information pathways, as well as the distributed nature of the overall task that the NCS has to perform. Hence the distribution of devices on nodes provides the ability for computing load distribution and re-routing information pathways in the event of a component or a subsystem of the network malfunctioning. This leads to the ability to reconfigure a new system from a nominally configured NCS.

Control is playing an increasing role in the design and run-time management of large interconnected systems to enhance high performance in nominal modes and safe reconfiguration processes in the occurrence of faults and failures. In fact, it appears that the achievement of system-level requirements by far exceed the achievable reliability of individual components [MUR 03]. The deep intrication of components and sub-systems, which come from different technologies, and which are subject to various constraints, calls for a joint design to solve potentially conflicting constraints early on. The fact that control has the ability to cope with uncertainty and disturbances, due to closed loops based on sensing the current system’s state, makes it a basic methodology to be used in such complex systems design.

Besides reaching the specified performance in normal situations, reliability and safety-related problems are of a constant concern for system designers. A general and integrated concept is dependability, which is the system property that includes various attributes such as availability, reliability, safety, confidentiality, integrity, and maintainability [LAP 92]. Being confronted with faults, errors and failures, a system’s dependability can be achieved in different ways, i.e. fault prevention, fault tolerance, fault removal and fault forecasting [AVI 00]. While these concepts have been formalized for systems in a broad sense, it appears that the existing control toolbox already provides concepts, e.g. robust and fault tolerant control, which are likely to achieve control system dependability. A better integration of advanced control, computing power and redundancy based on resource distribution is expected to further enhance this capability.

Except in the case of failures due to hardware or software components, most processes usually run with nominal behavior: however, even in the nominal modes, neither the process nor the execution resource parameters are ever perfectly known or modeled. A very conservative viewpoint consists of allocating system resources to sat-isfy the worst case, but this results in the execution resources being over-provisioned and thus wasted. From the control viewpoint, specific deficiencies to be considered include poorly predictable timing deviations, delays, and data loss.

Control usually deals with modeling uncertainty, dynamic adaptation, and disturbance attenuation. More precisely, as shown with recent results obtained on NCS [BAI 07], control loops are often robust and can tolerate computing and networking performance induced disturbances, up to a certain extent. Therefore, timing devia-tions such as jitter or data loss, as long as they remain inside the bounds which are compliant with the control specification, may be considered as features of the nominal system, not exceptions. Relying on control robustness allows for provisioning the execution resources according to average needs rather than for worst cases, and to consider system reconfiguration only when the failures exceed the capabilities of the running controller tolerance.

An NCS is made up of a heterogenous collection of physical devices, falling within the realm of continuous time, and information sub-systems basically working with discrete timescales. During an NCS design process, many conflicting constraints must be simultaneously solved before reaching a satisfactory and implementable solution. For example, trade-offs must be negotiated between processing and networking speed, control tracking performance, robustness, redundancy and reconfigurability, energy consumption, and overall cost effectiveness. These conventional design process problems from the different domains in succession prevent any coherent and effective integration of methodologies, technologies or associated constraints.

Traditionally control usually deals with a single process and a single computer, and it is often assumed that the limitations of communication links and computing resources do not significantly affect performance, or they are taken into account in a limited way. Existing tools dealing with modeling and identification, robust control, fault diagnosis and isolation, fault tolerant control and flexible real-time scheduling need to be enhanced, adapted and extended to cope with the networked characteristics of the control system.

Finally, the concept of a co-design system approach has emerged to allow progress in the integration of control, control, and communications in the NCS design [MUR 03] and to develop implementation-aware control system co-design approaches [BAI 07].

According to the systems scientist and philosopher, C.W. Churchman, “the system approach begins when first you view the world through the eyes of another” [CHU 79]. The basic aspect of co-design applied to NCS is that the design of controllers and the design of the execution resources, i.e. the real-time computing and communication sub-systems, are integrated right from the early design steps to jointly solve the constraints arising from all sides.

I.2. Control design: from continuous time to networked implementation

In the early age of control, analog computers were used to work out the control signals, firstly from mechanical devices, and then from electronic amplifiers and integrators: both the plant and the controller remained in the realm of continuous time, while frequency analysis and Laplace transform were the main tools at hand. The main drawbacks of analog computing come from limited accuracy and bandwidth, drift and noise, and from limited capabilities to handle nonlinearities. Pure and known delays could, however, be handled at control synthesis time by using the well-known Smith predictor.

Then, due to the increasing power and availability of cheap numerical processors, digital controllers gradually took over the analogue technology. However, controlling a continuous plant with a discrete digital system inevitably introduces timing distortions. In particular, it becomes necessary to sample and convert the sensors measurements to binary data, and conversely to convert them back to physically related values and hold the control signals to actuators. The sampling theory and the z transform became the standard tools for digital control systems analysis and design. A smart property of the z transform is that it keeps the linearity of the system through the sampling process. As the underlying assumption behind the z transform is equidistant sampling, periodic sampling became the standard for the design and implementation of digital controllers.

Note that, at the infancy of digital control, where computing power was weak and memory was expensive, it was important to minimize the controllers’ complexity and needed operating power. It is not obvious that the periodic sampling assumption is always the best choice: for example, [DOR 62] show that adaptive sampling, where the sampling frequency is changed according to the value of the derivative of the error signal, can be more effective than equidistant sampling in terms of the number of computed samples (but possibly not in terms of disturbance rejection [SMI 71]). [HSI 72] and [HSI 74] provide a summary of these efforts. However, due to the constantly increasing power and decreasing costs of computing, interest in sampling adaptability and the related computing power savings has progressively vanished, while the linearity preservation property of equidistant sampling has helped it to remain the indisputable standard for years.

From the computing side, real-time scheduling modeling and analysis were introduced in the illustrious seminal paper [LIU 73]. This first schedulability analysis was based on restrictive assumptions, one of them being the periodicity of all the real-time tasks in the system. Even if more general assumptions have been progressively in-troduced to cope with more realistic problems and tasks sets [AUD 95; SHA 04], the periodicity assumption remains very popular, e.g. see today’s success of rate monotonic analysis (RMA) based tools in industry, e.g. [SHA 90; DOY 94; HEC 94]. The combination of these popular modeling and analysis methods and tools has likely reinforced the understanding that control systems are basically periodic and hard real-time systems.

More recently, again due to the progress in electronic devices technology, it has become possible to distribute control loops over networks. Networking allows for the dissemination of sensors, actuators, and controllers on different physical nodes. Moreover, the topology of the network can be time varying, thus allowing the control devices to be mobile: hence, the whole control system can be highly adaptive in a dynamic environment. In particular, wireless communications allow for a cheap deployment of sensor networks and remotely controlled devices. However, networking also induces disturbances in control loops, such as variable and potentially long delays, data corruption, message desequencing and occasional data loss. These timing uncertainties and disturbances are in addition to those coming from the digital implementation of the controllers in the network nodes.

I.3. Timing parameter assignment

Digital control systems can be implemented as a set of tasks running on top of a commercial off-the-shelf real-time operating system (RTOS) using fixed-priority and pre-emption. The performance of a control loop, e.g. measured by the tracking error, and even more importantly its stability, strongly relies on the values of the sampling rates and sensor-to-actuator latencies (the latency considered for control purposes is the delay between the instant when a measure qn is taken at a sensor and the instant when the control signal U(qn) is received by the actuators [ÅST 97]. Therefore, it is essential that the implementation of the controller respects an adequate timing behavior to meet the expected performance. However, implementation constraints such as multi-rate sampling, pre-emption, synchronization, and various sources of delays make the run-time behavior of the controller very difficult to accurately predict. Dealing with closed-loop controllers may take advantage of the robustness and adaptivity of such systems to design and implement flexible and adaptive real-time control archi-tectures.

Closed-loop digital control systems use a computer to sample sensors, calculate a control law and send control signals to the actuators of a physical process. The control algorithm can be either designed in continuous time and then discretized or directly synthesized in discrete time, taking into account a model of the plant sampled by a zero-order holder. A control theory for linear systems sampled at fixed rates was established a long time ago [ÅST 97].

Assigning an adequate value for the sampling rate is a decisive duty, as this value has a direct impact on the control performance and stability. While an absolute lower limit for the sampling rate is given by Shannon’s theorem, in practice, rules of thumb are used to give a useful range of control frequencies according to the process dynamics and the desired closed loop bandwidth. Among others, such a rule of thumb is given in [ÅST 97] as ωch ≈ 0.15 … 0.5, where ωc is the desired closed-loop pulsation and h is the sampling period. Note that such rules only give preliminary information about the sampling rate to be actually implemented, and sampling rate selection needs to be further refined by simulations and experiments. In particular, it appears that the actual sampling rate to be used with some nonlinear systems, as those described in sections 1.4.2.3, 2.4.4, and in Chapter 7, must be far faster to achieve closed-loop stability. However, most often, it can be stated that the lower the control period and latencies are, the better the control performance is, e.g. measured by the tracking error or disturbance rejection. This assumption can be reinforced by providing a suitable control structure and parameter tuning, as shown in section 2.3 with the discussion on weakly hard real-time constraints and accelerable control tasks.

While timing uncertainties have an impact on the control performance, the actual scheduling parameters are difficult to model accurately or constrain within precisely known bounds. Thus, it is worth examining the sensitivity of control systems w.r.t. timing fluctuations. The accepted wisdom is that the lower the control period is, the better the control performance is. However, the underlying implementation system is a limited resource and cannot accommodate arbitrarily high sampling rates; thus, there must be a trade-off between the control performance and the execution resource utilization. This is particularly true for distributed embedded system design with limited resources due to weight, cost and energy consumption constraints. In [MOY 07] and [LIA 02], the illustrative chart in Figure I.2 is given to show the importance of choosing a good sampling period which gives a trade-off between control performance and the related network load.

Figure I.2.Performance comparison of continuous control, digital control, and networked control vs. sampling rate

From Figure I.2, it can be seen that the control performance is acceptable for a sampling rate range from PB to PC. Increasing the sampling rate beyond PC will increase the network load and lead to longer network-induced delays. This results in a control performance degradation. Note that for a given control application, the affordable network bandwidth can also vary accordingly. This can provide a network QoS designer with a larger solution space for designing the QoS mechanisms with more flexibility.

The hard real-time assumption must be softened to better cope with the reality of closed-loop control, for instance, changing the hard timing constraints for “weakly-hard” constraints [BER 01]. For example, hard deadlines may be replaced by statistical models, e.g. to specify the jitter characteristics compliant with the requested control performance. They may also be changed for deadline miss or data-loss patterns, e.g. to specify the number of deadline misses allowed over a specified time window according to the so-called (m, k)-firm model [HAM 95]. More precisely, a task meets the (m, k)-firm constraint if at least m among any k consecutive task instances meet their deadline. Note that to be fully exploited, weakly hard constraints should be associated with a decisional process: tasks missing their deadline can, for example, be delayed, aborted or skipped according to their impact on the control law behavior, e.g. as analyzed in [CER 05].

Finding the values of such weakly hard constraints for a given control law is currently out of the scope of current control theory, in general. However, the intrinsic robustness of closed-loop controllers allows for relying on softened timing constraint specification and flexible scheduling design, leading to an adaptive system with grace-ful performance degradation during system overloads. Chapter 5 gives an example of finding the values of m and k when the (m, k)-firm constraint is applied to the control-loop task execution requirement.

I.4. Control and task/message scheduling

From the implementation point of view, real-time systems are often modeled as a set of periodic tasks assigned to one or several processors. In distributed real-time systems, messages are exchanged among related tasks through a network. To ensure the execution of tasks on a processor, the worst-case response time analysis technique is often used to analyze fixed-priority real-time systems. Well-known scheduling policies, such as rate monotonic for fixed priorities and EDF for dynamic priorities, assign priorities according to timing parameters, respectively, sampling periods and deadlines. They are said to be “optimal” as they maximize the number of task sets which can be scheduled with respect to deadlines, under some restrictive assumptions. Unfortunately, they are not optimized for control purposes. They hardly take into account precedence and synchronization constraints, which naturally appear in a control algorithm. The relative urgency or criticality of the control tasks can be unrelated with the timing parameters. Thus, the timing requirements of control systems w.r.t. the performance specification do not fit well into scheduling policies based purely on schedulability achievement.

It has been shown through experiments, e.g. [CER 03], that a blind use of such traditional scheduling policy can lead to an inefficient controller implementation; on the other hand, a scheduling policy based on an application’s requirements, associated with a smart partition of the control algorithm into real-time modules may give better results. It may be that improving some computing related feature is in contradiction with another one targeted to improve the control behavior. For example, the case studies examined in [BUT 07] show that an effective method to minimize the output control jitter consists of systematically delaying the output delivery at the end of the control period: however, this method also introduces a systematic one period input/output latency, and therefore most often provides the worst possible control performance among the set of considered strategies.

Another example of unsuitability between computing and control requirements arises when using priority inheritance or priority ceiling protocols to bypass priority inversion due to mutual exclusion, e.g. to ensure the integrity of shared data. While they are designed to avoid deadlocks and minimize priority inversion lengths, such protocols jeopardize the initial schedule at run time, although it was carefully designed with latencies and control requirements in mind. As a consequence, latencies along some control paths can be largely increased, leading to a poor control performance or even instability.

In a distributed system design, not only should the task set schedulability be ensured, but also message transmission through the network, since sensor to actuator latencies heavily depend on transmission delay. Also, the schedulability tests must consider tasks and messages as a whole, since a message is produced by a task execution and it may be needed to trigger another task execution. In [TIN 94], a holistic approach is proposed. It consists of applying the worst-case response time analysis technique to evaluating the end-to-end response time of a set of tasks distributed over a controller area network (CAN). Periodic messages over CAN are scheduled under a non pre-emptive, fixed priority policy. Their release jitters are caused by local task scheduling. The worst case is considered where all messages are assumed to be released at the same time. This approach can effectively be used to validate distributed control applications, but suffers from the resource over-provisioning problem because of the obligation to consider the worst-case. The inherent robustness of the closed-loop control application is not exploited.

Finally, off-line schedulability analysis relies on correctly estimating the tasks’ worst-case execution time (WCET). Even in embedded systems the processors use caches and pipelines to improve the average computing speed, but this decreases the timing predictability. When a network is included, additional timing uncertainty is emphasized. Depending on the network protocols, the network-induced delay and data loss can be very different. For instance, let us just take as an example the two widely used industrial networks CAN and switched Ethernet.

A CAN uses a global priority-based medium access control protocol. High priority messages have lower transmission latencies while low priority ones can suffer from longer transmission delays [LIA 01]. An Ethernet switch can also deal with messages using quite different scheduling policies (e.g. FIFO, WRR, fixed priority), resulting in very different transmission delays. For real-time computing and networking designers, the new challenge is how to design the quality of service (QoS) mechanisms in scheduling both tasks in multitasking computing and messages managed by network protocols, e.g. message scheduling at the MAC level, routing, etc. to meet specified control-loop requirements. In fact, traditional QoS approaches assume that there is a deadline for each application, and a static resource allocation principle based on the worst-case situation is often used to provide delay/deadline guarantees. This approach also leads to a resource over-provisioning problem since a worst-case scenario is considered.

Another source of uncertainty may come from some parts of the estimation and control algorithms themselves. For example, the duration of a vision process highly depends on incoming data from a dynamic scene. Also, some algorithms are iterative, with a poorly predictable convergence rate, so the time before reaching a predefined threshold is unknown (and must be bounded by a timeout associated with a recovery process). In a dynamic environment, some of the less important control activities can be suspended or resumed in the case of transient overload, or alternative control algorithms with different costs can be scheduled according to various control modes, leading to large variations in the computing load.

Thus, real-time control design based on worst-case execution time, maximum expected delay, and strict deadlines inevitably lead to a low average usage of the computing resources and to a poor adaptability w.r.t. a complex execution environment. All these drawbacks call for a better integration of control objectives with computing and communication capabilities through a co-design approach taking into account both actual application requirements and the implementation system characteristics.

I.5. Diagnosis and fault tolerance in NCS

Due to an increasing complexity of dynamic systems, as well as the need for reliability, safety and efficient operation, model-based fault diagnosis has became an important subject in modern control theory and practice, e.g. [WIL 76; FRA 90; GER 98]. Different techniques of model-based methods include observer based-, parity relation- and parameter estimation approaches [CHE 99; MAN 00; ZHA 03]. When sampling and control data are transmitted over the network, many network-induced effects such as time delays and packet losses will naturally arise. Owing to the network-induced effects, the theories for traditional point-to-point systems should be revisited when dealing with NCSs. Different studies have shown the importance of taking into account characteristics of networks in the design of a fault diagnosis system [DIN 06; LLA 06]. The main idea of these approaches is to minimize the false alarms caused by transmission delays. In this case, a network-induced delay is considered when designing the FDI filter. On the other hand, FDI algorithms require specific information on the process, thus the implementation of such algorithms increases the network load and consequently, affects its QoS. The number of signals to be transmitted may be reduced by allowing only a part of sensors and actuators to have access to the network. In this case, less information is available for FDI at each sampling time. In [ZHA 05], observability conditions are established for the reduced communication pattern.

Based on the fault diagnosis algorithm for NCSs, fault-tolerant control of NCSs can be obtained. The existing methods of fault-tolerant control techniques against actuator faults can be categorized into two groups: passive [SEO 96; CHE 04] and active approaches [ZHA 02; ZHA 06]. [ZHE 03] proposed a passive controller for NCSs considering random time delays. Although the passive controllers are easy to implement, their performances are relatively conservative. The reason is that this class of controllers, based on the alleged set of component failures and with a fixed structure and parameters, is used to deal with all the different failure scenarios possible. If a failure occurs out of those considered in the design, the stability and performance of the closed-loop system is unanticipated. Such potential limitations of passive approaches are behind the motivation for the research on active FTC (AFTC). AFTC procedures require an on-line and real-time fault diagnosis process and a controller reconfiguration mechanism. Because AFTC approaches propose a kind of flexibility to select different controllers according to different component failures, better performance of the closed loop system is expected. However, the above case holds only if the fault diagnosis process provides a correct, or synchronous, decision.

Some preliminary results have been obtained on AFTC which tend to make the reconfiguration mechanism immune from imperfect fault diagnosis decisions, as in [MAH 03] and [WU 97]. [MAK 04] further discussed the above issue by using the guaranteed cost control approach and on-line controller switching in such a way that the closed-loop system was stable at all times. However, [MAK 04] did not consider the plant controlled over the network.

I.6. Co-design approaches

NCS encompasses the control-loop application and the implementation system (CPU and the networks managed by operating systems and protocols). Research work on NCS mainly focuses on the robust control-loop design which takes into account the implementation-induced delays and data loss. Network delays are assumed to be either constant (can be realized by input data buffering) or randomly distributed, following a well-known probability distribution. Data losses are assumed to follow a Bernoulli process [ANT 07a; ZAM 08]. From these works, it appears that the assumptions on network delay or data loss patterns seldom consider the actual network characteristics nor the possible QoS mechanisms which are specific for each type of network. In fact, using a prioritized bus like CAN, a switched Ethernet or a wireless sensor network will result in fundamentally different QoS characteristics. This point is of primary importance especially when the network is shared by several control loops and other applications whose exact characteristics are often unknown at the control loop design step. In this case, the traffic scheduling has a great impact on both delay variations and packet loss, which in turn impacts the control quality (stability and performance) of the control loops. One solution to this problem relies on a tight coupling between the control specification and the implementation system at design time.

Generally speaking, two ways to achieve an efficient NCS design can be distinguished. One way that is currently being explored by the control theorists can be called “implementation-aware control law design.” The idea is to make on-line adaptations to the control loops parameters by adjusting, for example, the control loop sampling period using a LQ approach in [EKE 00; CER 03], sample-time and/or delay dependent gain scheduling in [MAR 04] and [SAL 05], a robust H∞ design in [SIM 05], a hybrid rate adaptation in [ANT 07b], or both the actuation intervals and control gains using model predictive control and hybrid modeling as in [Ben 06], or LQ control associated with a (m, k)-firm policy as in [JIA 07] and [FEL 08].

Another is the so-called “control-aware QoS adaptation,” which is being explored by the network QoS designers. The idea is to re-allocate the implementation system resources on-line to maintain or increase the QoS level required by the control application. In [JUA 07], a hybrid CAN message priority allocation scheme is proposed. When there is an urgent transmission need, a dynamic priority field can be used to give it even higher priority. This work exhibits a link between the hybrid priority scheme and the control loop performance. In [DIO 07], the dynamic allocation of bandwidth sharing in Ethernet switches with weighted round-robin (WRR) scheduler based on both observed delay and the variation in the control quality (i.e. the difference between the reference and the process state) is presented. For this purpose, bandwidth sharing (i.e. the weight assigned to each data flow or Ethernet switch port) is defined as a function of the sensor to actuator delay and the current quality of control (QoC) level.

Of course, a combination of both approaches will contribute to a more efficient NCS design. Another very important aspect is the diagnosis and fault tolerance in NCS. This aspect is to be integrated in the co-design approach for achieving efficient and dependable NCS design.

I.7. Outline of the book

This book intends to provide an introduction to the problems that arise in NCS design and to present the different co-design approaches.

The first chapter provides preliminary concepts, together with state of the art techniques and existing solutions to implement distributed process control and diagnosis. In particular, control-aware static computing and network resource allocation schemes are first reviewed. Then, the real-time adaptive resource allocation approach through feedback scheduling is described, and its feasibility is assessed through a robot control application. Techniques for diagnosis and fault tolerance for networked control systems are also reviewed.

A first step toward dependable control systems consists of using robust controllers, e.g. controllers which are weakly sensitive to both process model and execution resource incertitude. Chapter 2 deals with implementation-aware or more precisely computing-aware robust control. Computation durations, pre-emption between tasks and communication over networks provide various sources of delays in the control loops. Although the control systems with constant delays have been studied earlier, taking into account more realistic variables or badly known delays recently brought new and powerful results which are surveyed in section 2.2. Traditionally, real-time control systems have been considered as “hard real-time”, i.e. systems where timing deviations such as deadline misses are forbidden. In fact, closed-loop systems have some intrinsic robustness against timing uncertainties, which can be enhanced, as shown in section 2.3, by being devoted to weakly hard control tasks. Robustness can be considered as a passive approach w.r.t. timing uncertainties which are not measured and which are only assumed to have known bounds. In the case where the control intervals can be controlled, an adaptation of the controller gains w.r.t. the varying control interval can be used in combination with robust control design, as developed in section 2.4.

In addition to the controllers design, different approaches to enhance the QoC consist of techniques enabling to adjust the QoS offered by a network. Chapter 3 deals with control-aware dynamic network QoS adaptation. The key point here relies on the determination of the relation between QoC and QoS. QoC might be formulated in terms of overshoot or damping for instance, whereas QoS is often expressed in terms of delays. As the QoS adaptation mechanisms differ with the kinds of networks being considered, two approaches are illustrated in this chapter. One approach is based on CAN bus, the second is based on switched Ethernet architectures. These two protocols are widely used in industrial networks. In the case of the CAN protocol, a dynamic hybrid message-priority allocation, taking into account the control application needs, is proposed. In the case of switched Ethernet networks, bandwidth allocation control strategies are defined. For both approaches, the network feedback control is based on both the current QoS and the dynamic application-related parameters such as process state output deviation or certain control loop cost functions. Finally, since networks might have to support several applications, the QoS offered to each one is adjusted according to its specific needs.

Feedback scheduling, as presented in the previous chapters, provides an effective but limited adaptability of execution resource allocation w.r.t. varying operation conditions because the control performance is not directly taken into account in the scheduling parameter tuning. Chapter 4 describes other elaborate control and scheduling co-design schemes, coupling control performance, and scheduling parameters more tightly, through the means of a few case studies. In section 4.2 the varying sampling control laws are used as building blocks for such co-design schemes, with no guarantees for the stability of the global loops. Section 4.3, summarizes a sub-optimal solution for the case of control/scheduling co-design using a slotted timescale based on the model predictive control approach. A convex optimization approach in the framework of linear systems and linear quadratic control is provided in section 4.4. Finally, section 4.5 describes a LPV-based joint control and scheduling approach applied to the control of a robot arm.

During system and network overload, excessive delays, or even data loss, may occur. To maintain the QoC of an NCS, the implementation system overload must be dealt with. As shown in Chapters 1 and 4, a common approach to deal with this overload problem is to dynamically change the sampling period of the control loops. In Chapter 5, an alternative to the explicit sampling period adjustment is proposed as an indirect sampling period adjustment. It is based on selective sampling data drops according to the (m, k)-firm model [HAM 95]. The interest of this alternative is its ease of implementation, despite reduced adjustment quality since only the multiples of the basic sampling period are used.

Chapter 6 deals with the processing of faults that may occur during the NCS operation. These faults may affect the system’s physical components, or its sensors and actuators, or even the network itself. From a safety point of view, it is important to detect the occurrence of such faults, to determine the faulty physical components by fault detection and isolation (FDI). FDI allows some automatic reaction to the supervision system (for instance automatic shut down in case of danger) or reaction to the human operators in charge of the installation (for instance, manual control in open loop). Sometimes, when these faults exceed the capabilities of robust control loops, they can be accommodated by advanced control algorithms, compensating for the sys-tem’s deficiencies by means of FTC. Section 6.2 recalls the basic results in FDI/FTC for the centralized case. Then the drawbacks of networking for fault detection and isolation are highlighted and some pragmatic solutions are given in section 6.4. More advanced techniques for FDI/FTC over networks subject to delays and data loss, some of which are still in the research field, are finally given in section 6.5.

Finally, Chapter 7 is devoted to the experimental validation of the approaches exposed along the previous chapters, to assess their feasibility and effectiveness. A quad-rotor miniature drone has been used throughout the SafeNecs project1 as a common setup to integrate contributions from the team’s partners, including variable sampling control, control under (m, k)-firm scheduling constraints, diagnosis and FDI over a network, dynamic priorities on a CAN bus and fault-tolerant control of the set of actuators. The development process starts with simulation under Matlab/Simulink; real-time constraints are then added and evaluated using the TrueTime toolbox. The next step consists of setting up a “hardware_in_the_loop” real-time simulation before performing tests on the real process.

I.8. Bibliography

[ANT 07a] ANTSAKLIS P., AND BAILLIEUL J., Guest editorial, special issue on Technology of networked control systems, Proceedings of the IEEE, vol. 95, p. 5–8, 2007.

[ANT 07b] ANTUNES A., PEDREIRAS P., ALMEIDA L., AND MOTA A., Dynamic rate and control adaptation in networked control systems, 5th International Conference on Industrial Informatics, Vienna, Austria, p. 841–846, June 2007.

[ÅST 97] ÅSTRÖM K. J., AND WITTENMARK B., Computer-Controlled Systems, Information and System Sciences Series, Prentice Hall, Englewood Cliffs, NJ, 3rd edition, 1997.

[AUD 95] AUDSLEY N., BURNS A., DAVIS R., TINDELL K., AND WELLINGS A., Fixed priority preemptive scheduling: an historical perspective, Real-Time Systems, vol. 8, p. 173– 198, 1995.

[AVI 00] AVIŽIENIS A., LAPRIE J.-C., AND RANDELL B., Fundamental concepts of dependability, Report 01145, LAAS, Toulouse, France, 2000.

[BAI 07] BAILLIEUL J., AND ANTSAKLIS P.-J., Control and communication challenges in networked real-time systems, Proceedings of the IEEE, vol. 95, p. 9–28, 2007.

[Ben 06] BEN GAID M., Optimal scheduling and control for distributed real-time systems, PhD thesis, University of Evry Val d’Essonne, France, 2006.

[BER 01] BERNAT G., BURNS A., AND LLAMOSÍ A., Weakly hard real-time systems, IEEE Transactions on Computers, vol. 50, p. 308–321, 2001.

[BUT 07] BUTTAZZO G., AND CERVIN A., Comparative assessment and evaluation of jitter control methods, 15th International Conference on Real-Time and Network Systems, Nancy, France, March 2007.

[CER 03] CERVIN A., Integrated control and real-time scheduling, PhD thesis, Department of Automatic Control, Lund Institute of Technology, Sweden, April 2003.

[CER 05] CERVIN A., Analysis of overrun strategies in periodic control tasks, 16th IFAC World Congress, Prague, Czech Republic, July 2005.

[CHE 99] CHEN J., AND PATTON R., Robust Model Based Fault Diagnosis for Dynamic Systems, Kluwer Academic Publishers, Dordrecht, 1999.

[CHE 04] CHENG C., AND ZHAO Q., Reliable control of uncertain delayed systems with integral quadratic constraints, IEE Proceedings Control Theory Applications, vol. 151, p. 790– 796, 2004.

[CHU 79] CHURCHMAN C., The Systems Approach and its Enemies, Basic Books, New York, 1979.

[DIN 06] DING S., AND ZHANG P., Observer based monitoring for distributed networked control systems, 6th IFAC Symposium SAFEPROCESS’06, Beijing, China, August 2006.

[DIO 07] DIOURI I., GEORGES J., AND RONDEAU E., Accommodation of delays for NCS using classification of service, International conference on networking, sensing and control, London, UK, April 2007.

[DOR 62] DORF R., FARREN M., AND PHILLIPS C., Adaptive sampling frequency for sampled-data control systems, IEEE Transactions on Automatic Control, vol. 7, p. 38–47, 1962.

[DOY 94] DOYLE L., AND ELZEY J., Successful use of rate monotonic theory on a formidable real time system, Proceedings of the 11th IEEE Workshop on Real-Time Operating Systems and Software, Seattle, USA, p. 74–78, 1994.

[EKE 00] EKER J., HAGANDER P., AND ARZEN K.-E., A feedback scheduler for real-time controller tasks, Control Engineering Practice, vol. 8, p. 1369–1378, 2000.

[FEL 08] FELICIONI F., JIA N., SIMONOT-LION F., AND SONG Y.-Q., Optimal on-line (m,k)-firm constraint assignment for real-time control tasks based on plant state information, 13th IEEE ETFA, Hamburg, Germany, September 2008.

[FRA 90] FRANK P. M., Fault diagnosis in dynamic systems using analytical and knowledge-based redundancy: a survey and some new results, Automatica, vol. 26, p. 459–474, 1990.

[GER 98] GERTLER J., Fault Detection and Diagnosis in Engineering Systems, Marcel Dekker Inc., New York, 1998.

[HAM 95] HAMDAOUI M., AND RAMANATHAN P., A dynamic priority assignment technique for streams with (m, k)-firm deadlines, IEEE Transactions on Computers, vol. 44, p. 1443– 1451, December 1995.

[HEC 94] HECHT M., HAMMER J., LOCKE C., DEHN J., AND BOHLMANN R., Rate monotonic analysis of a large, distributed system, IEEE Workshop on Real-Time Applications, Washington, DC, USA, p. 4–7, July 1994.

[HSI 72] HSIA T. C., Comparisons of adaptive sampling control laws, IEEE Transactions on Automatic Control, vol. 17, p. 830–831, 1972.

[HSI 74] HSIA T. C., Analytic design of adaptive sampling control law in sampled data systems, IEEE Transactions on Automatic Control, vol. 19, p. 39–42, 1974.

[JIA 07] JIA N., SONG Y.-Q., AND SIMONOT-LION F., Graceful degradation of the quality of control through data drop policy, Proceedings of the European Control Conference, Kos, Greece, July 2007.

[JUA 07] JUANOLE G., AND MOUNEY G., Networked control systems: definition and analysis of a hybrid priority scheme for the message scheduling, IEEE RTCSA, Daegu, Korea, August 2007.

[LAP 92] LAPRIE J.-C. (ed.), Dependability: Basic Concepts and Terminology, Springer Verlag, New York, 1992.

[LIA 01] LIAN F.-L., MOYNE J., AND TILBURY D., Performance evaluation of control networks: Ethernet, ControlNet, and DeviceNet, IEEE Control Systems Magazine, vol. 21, p. 66–83, February 2001.

[LIA 02] LIAN F.-L., MOYNE J., AND TILBURY D., Network design consideration for distributed control systems, IEEE Transactions on Control Systems Technology, vol. 10, p. 297–307, 2002.

[LIU 73] LIU C., AND LAYLAND J., Scheduling algorithms for multiprogramming in hard real-time environment, Journal of the ACM, vol. 20, p. 40–61, February 1973.

[LLA 06]LLANOS D., STAROSWIECKI M.,COLOMER J., AND MELENDEZ J., H∞ detection filter design for state delayed linear systems, 6th IFAC Symposium SAFEPROCESS’06, Beijing, China, August 2006.

[MAH 03] MAHMOUD M., JIANG J., AND ZHANG Y., Active fault tolerant control systems: stochastic analysis and synthesis, vol. 287 of Lecture Notes in Control and Information Sciences, Springer, Berlin, 2003.

[MAK 04] MAKI M., JIANG J., AND HAGINO K., A stability guaranteed active fault-tolerant control against actuator failures, International Journal of Robust and Nonlinear Control, vol. 14, p. 1061–1077, 2004.

[MAN 00] MANGOUBI R., AND EDELMAYER A., Model based fault detection: the optimal past, the robust present and a few thoughts on the future, Proceedings of the fourth IFAC symposium on fault detection supervision and safety for technical processes, SAFEPRO-CESS’00, Budapest, Hungary, p. 64–75, June 2000.

[MAR 04] MARTI P., YEPEZ J., VELASCO M., VILLA R., AND FUERTES J., Managing quality-of-control in network-based control systems by controller and message scheduling co-design, IEEE Transactions on Industrial Electronics, vol. 51, p. 1159–1167, December 2004.

[MOY 07] MOYNE J., AND TILBURY D., The emergence of industrial control networks for manufacturing control, diagnostics, and safety data, Proceedings of the IEEE, vol. 95, p. 29–47, 2007.

[MUR 03] MURRAY R. M., ÅSTRÖM K. J., BOYD S. P., BROCKETT R. W., AND STEIN G., Future directions in control in an information-rich world, IEEE Control Systems Magazine, vol. 23, April 2003.

[SAL 05] SALA A., Computer control under time-varying sampling period: an LMI gridding approach, Automatica, vol. 41, p. 2077–2082, 2005.

[SEO 96] SEO C., AND KIM B., Robust and reliable H∞ control for linear systems with parameter uncertainty and actuator failure, Automatica, vol. 32, 1996.

[SHA 90] SHA L., AND GOODENOUGH J. B., Real-time scheduling theory and Ada, IEEE Computer, vol. 23, p. 53–62, 1990.

[SHA 04] SHA L., ABDELZAHER T., ÅRZéN K.-E., CERVIN A., BAKER T., BURNS A., BUTTAZZO G., CACCAMO M., LEHOCZKY J., AND MOK A. K., Real time scheduling theory: a historical perspective, Real Time Systems, vol. 28, p. 101–156, 2004.

[SIM 05] SIMON D., ROBERT D., AND SENAME O., Robust control / scheduling co-design: application to robot control, Proceedings of the 11th IEEE Real-Time and Embedded Technology and Applications Symposium, San Francisco, USA, March 2005.

[SMI 71] SMITH M., An evaluation of adaptive sampling, IEEE Transactions on Automatic Control, vol. 16, p. 282–284, 1971.

[TIN 94] TINDELL K., AND CLARK J., Holistic schedulability analysis for distributed hard real-time systems, Microprocessors and Microprogramming, vol. 40, p. 117–134, 1994.

[WIL 76] WILLSKY A., A survey of design methods for failure detection in dynamic systems, Automatica, vol. 12, p. 601–611, 1976.

[WU 97] WU N., Robust feedback design with optimized diagnostic performance, IEEE Transactions on Automatic Control, vol. 42, p. 1264–1268, 1997.

[ZAM 08] ZAMPIERI S., Trends in networked control systems, 17th IFAC World congress, Seoul, Korea, p. 2886–2894, July 2008.

[ZHA 02] ZHANG Y.-M., AND JIANG J., An active fault-tolerant control system against partial actuator failures, IEE Proceedings, Control Theory and Applications, vol. 149, p. 95– 104, 2002.

[ZHA 03] ZHANG Y., AND JIANG J., Bibliographical review on reconfigurable fault-tolerant control systems, 5th IFAC Symposium SAFEPROCESS’03, Washington, DC, USA, June 2003.

[ZHA 05] ZHANG L., AND HRISTU-VARSAKELIS D., Stabilization of networked control systems: designing effective communication sequence, 16th IFAC world congress, Prague, Czech Republic, July 2005.

[ZHA 06] ZHANG J., AND J. JIANG, Modeling of vertical gyroscopes with consideration of faults, Proceedings of Safeprocess’2006, Beijing, China, 2006.

[ZHE 03] ZHENG Y., Fault diagnosis and fault tolerant control of networked control systems, PhD thesis, Huazhong University of Science and Technology, 2003.

1 Introduction written by Christophe AUBRUN, Daniel SIMON and Ye-Qiong SONG.

1. SafeNecs is a research project funded by the French “Agence Nationale de la Recherche” under grant ANR-05-SSIA-0015-03

Chapter 1

Preliminary Notions and State of the Art1

1.1. Overview

Basically, co-design needs to share, compare, and gather the knowledge and perspectives brought by the stakeholders involved in the design process. Indeed, the design of safe networked control systems involves many basic methodologies and technologies. The essential methodologies involved here are feedback control, real-time scheduling, fault detection and isolation (FDI), filtering and identification, networking protocols, and QoS metrics: each of them relies on theoretic concepts and specific domains of applied mathematics such as optimization and information theory. On the other hand, these concepts are implemented via various technologies and devices, for example, involving mechanical or chemical engineering, continuous and digital electronics and software engineering.

These basic domains are explained in existing literature; hence, this chapter is not meant to give an exhaustive overview of all the methodologies and technologies used further in the book. This book is also not meant to provide an exhaustive state of the art nor to be a definitive treatise on the open topic of safe NCS; it is aimed at recording and disseminating the experience gathered by the authors during the joint SAFENECS academic research project. The team brought together people from different horizons, with basic backgrounds in control or computer science, and expertise in various domains and technologies such as digital control design, modeling of dynamic systems, real-time scheduling, identification, and diagnosis. Fault-tolerant control (FTC), networking protocols, quality of service (QoS) analysis in networks, and model-based software development, among others.

Besides the knowledge provided by basic education in control and computer science, it appears that some topics that are useful in the joint design of control systems over networks are too specific, or too new and not disseminated enough, to be currently a part of basic education in control or industrial computing. So the next sections provide additional knowledge about such topics and will be useful in what follows.

Section 1.2 gives preliminary notions about real-time scheduling as well as some popular real-time scheduling policies. A particular focus is given on the so-called (m, k)-firm scheduling policy, which is, in particular, the groundwork for the control/networking co-design methodology that is developed in Chapter 5. Then, section 1.3 provides basic considerations and describes the current solutions for control-aware computing, i.e. providing computing architecture designs able to improve the quality of control of the system. One very appealing solution for the control of computing and networking resources subject to variable and/or badly known operating conditions uses a feedback-scheduling loop, whose basic design and implementation are described in section 1.4. Finally, section 1.5 provides a brief state of the art about fault diagnosis in control systems subject to network-induced effects.

1.2. Preliminary notions on real-time scheduling

When taking into account the implementation aspect of the control applications, one of the fundamental problems is to ensure timely execution of the tasks and transmission of messages related to control loops, e.g. transmission of a sampling data from a sensor to a controller, execution of the control task on a multitasking operating system (OS), sending the command from the controller to the actuator.

Control applications are typical real-time applications. The execution of a task or transmission of data is under time constraint (often under deadline constraint) in order to ensure the reactivity of the system and thus guarantee the stability and desired control performance. Real-time scheduling theory has been developed for studying how to effectively schedule the access to a shared resource of the concurrent tasks (through scheduling algorithm development) and to guarantee that the designed system can meet time constraints (through schedulability analysis).

This section is not intended to give a comprehensive review of the real-time scheduling theory, but rather provides the necessary basic background to facilitate the understanding of the remaining chapters of this book. Readers interested in more detail may refer to [LIU 00], and also to [LEU 04] for a broader view on scheduling.

The notion of priority is commonly used to order access to the shared resources such as a processor in multitask systems and a communication channel in networks. In the following, except in case of necessity, we will always use the term task which may represent either a task execution on a processor or a packet/message transmitted on a network channel.