9,49 €

Mehr erfahren.

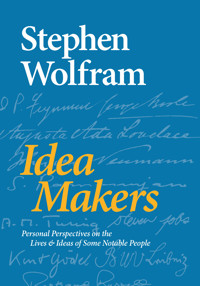

- Herausgeber: Wolfram Media

- Kategorie: Sachliteratur, Reportagen, Biografien

- Sprache: Englisch

This book of thoroughly engaging essays from one of today's most prodigious innovators provides a uniquely personal perspective on the lives and achievements of a selection of intriguing figures from the history of science and technology. Weaving together his immersive interest in people and history with insights gathered from his own experiences, Stephen Wolfram gives an ennobling look at some of the individuals whose ideas and creations have helped shape our world today.

From his recollections about working with Richard Feynman to his insights about how Alan Turing's work has unleashed generations of innovation to the true role of Ada Lovelace in the history of computing, Wolfram takes the reader into the minds and lives of great thinkers and creators of the past few centuries—and shows how great achievements can arise from dramatically different personalities and life trajectories.

Das E-Book können Sie in Legimi-Apps oder einer beliebigen App lesen, die das folgende Format unterstützen:

Seitenzahl: 301

Veröffentlichungsjahr: 2016

Ähnliche

Idea Makers: Personal Perspectives on the Lives & Ideas of Some Notable PeopleCopyright © 2016 Stephen Wolfram, LLC

Wolfram Media, Inc. | wolfram-media.com

ISBN 978-1-57955-003-5 (hardback)ISBN 978-1-57955-005-9 (ebook)

Biography / Science

Library of Congress Cataloging-in-Publication Data

Wolfram, Stephen, author.

Idea makers : personal perspectives on the lives & ideas of some notable people by Stephen Wolfram.

First edition. | Champaign : Wolfram Media, Inc., [2016]

LCCN 2016025380 (print) | LCCN 2016026486 (ebook)ISBN 9781579550035 (hardcover : acid-free paper)ISBN 9781579550059 (ebook) | ISBN 9781579550110 (ePub)ISBN 9781579550059 (kindle)

LCSH: Scientists—Biography. | Science—History.

LCC Q141 .W678562 2016 (print) | LCC Q141 (ebook)DDC 509.2/2—dc23

LC record available at https://lccn.loc.gov/2016025380

Sources for photos and archival materials that are not from the author’s collection or in the public domain:

pp. 45, 66, 67: The Carl H. Pforzheimer Collection of Shelley and His Circle, The New York Public Library; pp. 46, 55, 56, 63, 65, 67, 79: Additional Manuscripts Collection, Charles Babbage Papers, British Library; p. 49: National Portrait Gallery; pp. 50, 60: Museum of the History of Science, Oxford; pp. 52, 53, 85, 86: Science Museum/Science & Society Picture Library; p. 54: The Power House Museum, Sydney; p. 68: Lord Lytton, The Bodleian Library; pp. 99–120: Leibniz-Archiv/Leibniz Research Center Hannover, State Library of Lower Saxony; p. 138: Alisa Bokulich; p. 167–170, 178: Cambridge University Library; pp. 192, 193: Trinity College Library; p. 194: Tata Institute of Fundamental Research

Printed by Friesens, Manitoba, Canada. Acid-free paper. First edition.

Preface

I’ve spent most of my life working hard to build the future with science and technology. But two of my other great interests are history and people. This book is a collection of essays I’ve written that indulge those interests. All of them are in the form of personal perspectives on people—describing from my point of view the stories of their lives and of the ideas they created.

I’ve written different essays for different reasons: sometimes to commemorate a historical anniversary, sometimes because of a current event, and sometimes—unfortunately—because someone just died. The people I’ve written about span three centuries in time—and range from the very famous to the little-known. All of them had interests that intersect in some way or another with my own. But it’s ended up a rather eclectic list—that’s given me the opportunity to explore a wide range of very different lives and ideas.

When I was younger, I really didn’t pay much attention to history. But as the decades have gone by, and I’ve seen so many different things develop, I’ve become progressively more interested in history—and in what it can teach us about the pattern of how things work. And I’ve learned that decoding the actual facts and path of history—like so many other areas—is a fascinating intellectual process.

There’s a stereotype that someone focused on science and technology won’t be interested in people. But that’s not me. I’ve always been interested in people. I’ve been fortunate over the course of my life to get to know a very large and diverse set of them. And as I’ve grown my company over the past three decades I’ve had the pleasure of working with many wonderful individuals. I always like to give help and advice. But I’m also fascinated just to watch the trajectories of people’s lives—and to see how people end up doing the things they do.

It’s been great to personally witness so many life trajectories over the past half century. And in this book I’ve written about a few of them. But I’ve also been interested to learn about the life trajectories of those from the more distant past. Usually I know quite a lot about the end of the story: the legacy of their work and ideas. But I find it fascinating to see how these things came to be—and how the paths of people’s lives led to what they did.

Part of my interest is purely intellectual. But part of it is more practical—and more selfish. What can I learn from historical examples about how things I’m involved in now will work out? How can I use people from the past as models for people I know now? What can I learn for my own life from what these people did in their lives?

To be clear: this book is not a systematic analysis of great thinkers and creators through history. It is an eclectic collection of essays about particular people who for one reason or another were topical for me to write about. I’ve tried to give both a sketch of each person’s life in its historical context and a description of their ideas—and then I’ve tried to relate those ideas to my own ideas, and to the latest science and technology.

In the process of writing these essays I’ve ended up doing a considerable amount of original research. When the essays are about people I’ve personally known, I’ve been able to draw on interactions I had with them, as well as on material I’ve personally archived. For other people, I’ve tried when it’s possible to seek out individuals who knew them—and in all cases I’ve worked hard to find original documents and other primary sources. Many people and institutions have been very forthcoming with their help—and also it’s been immensely helpful that in modern times so many historical documents have been scanned and put on the web.

But with all of this, I’m still constantly struck by how hard it is to do history. So often there’s been some story or analysis that people repeat all the time. But somehow something about it hasn’t quite rung true with me. So I’ve gone digging to try to find out the real story. Occasionally one just can’t tell what it was. But at least for the people I’ve written about in this book, there are usually enough records and documents—or actual people to talk to—that one can eventually figure it out.

My strategy is to keep on digging and getting information until things make sense to me, based on my knowledge of people and situations that are somehow similar to what I’m studying. It’s certainly helped that in my own life I’ve seen all sorts of ideas and other things develop over the course of years—which has given me some intuition about how such things work. And one of the important lessons of this is that however brilliant one may be, every idea is the result of some progression or path—often hard-won. If there seems to be a jump in the story—a missing link—then that’s just because one hasn’t figured it out. And I always try to go on until there aren’t mysteries anymore, and everything that happened makes sense in the context of my own experiences.

So having traced the lives of quite a few notable people, what have I learned? Perhaps the clearest lesson is that serious ideas that people have are always deeply entwined with the trajectories of their lives. That is not to say that people always live the paradigms they create—in fact, often, almost paradoxically, they don’t. But ideas arise out of the context of people’s lives. Indeed, more often than not, it’s a very practical situation that someone finds themselves in that leads them to create some strong, new, abstract idea.

When history is written, all that’s usually said is that so-and-so came up with such-and-such an idea. But there’s always more to it: there’s always a human story behind it. Sometimes that story helps illuminate the abstract idea. But more often, it instead gives us insight about how to turn some human situation or practical issue into something intellectual—and perhaps something that will live on, abstractly, long after the person who created it is gone.

This book is the first time I’ve systematically collected what I’ve written about people. I’ve written more generally about history in a few other places—for example in the hundred pages or so of detailed historical notes at the back of my 2002 book A New Kind of Science. I happened to start my career young, so my early colleagues were often much older than me—making it demographically likely that there may be many obituaries for me to write. But somehow I find it cathartic to reflect on how a particular life added stones—large or small—to the great tower that represents our civilization and its achievements.

I wish I could have personally known all the people I write about in this book. But for those who died long ago it feels like a good second best to read so many documents they wrote—and somehow to get in and understand their lives. My greatest personal passion remains trying to build the future. But I hope that through understanding the past I may be able to do it a little better—and perhaps help build it on a more informed and solid basis. For now, though, I’m just happy to have been able to spend a little time on some remarkable people and their remarkable lives—and I hope that we’ll all be able to learn something from them.

Richard Feynman

April 20, 2005 *

I first met Richard Feynman when I was 18, and he was 60. And over the course of ten years, I think I got to know him fairly well. First when I was in the physics group at Caltech. And then later when we both consulted for a once-thriving Boston company called Thinking Machines Corporation.

I actually don’t think I’ve ever talked about Feynman in public before. And there’s really so much to say, I’m not sure where to start.

But if there’s one moment that summarizes Richard Feynman and my relationship with him, perhaps it’s this. It was probably 1982. I’d been at Feynman’s house, and our conversation had turned to some kind of unpleasant situation that was going on. I was about to leave. And Feynman stopped me and said, “You know, you and I are very lucky. Because whatever else is going on, we’ve always got our physics.”

Feynman loved doing physics. I think what he loved most was the process of it. Of calculating. Of figuring things out. It didn’t seem to matter to him so much if what came out was big and important. Or esoteric and weird. What mattered to him was the process of finding it. And he was often quite competitive about it.

Some scientists (myself probably included) are driven by the ambition to build grand intellectual edifices. I think Feynman—at least in the years I knew him—was much more driven by the pure pleasure of actually doing the science. He seemed to like best to spend his time figuring things out, and calculating. And he was a great calculator. All around perhaps the best human calculator there’s ever been.

Here’s a page from my files: quintessential Feynman. Calculating a Feynman diagram:

It’s kind of interesting to look at. His style was always very much the same. He always just used regular calculus and things. Essentially nineteenth-century mathematics. He never trusted much else. But wherever one could go with that, Feynman could go. Like no one else.

I always found it incredible. He would start with some problem, and fill up pages with calculations. And at the end of it, he would actually get the right answer! But he usually wasn’t satisfied with that. Once he’d gotten the answer, he’d go back and try to figure out why it was obvious. And often he’d come up with one of those classic Feynman straightforward-sounding explanations. And he’d never tell people about all the calculations behind it. Sometimes it was kind of a game for him: having people be flabbergasted by his seemingly instant physical intuition, not knowing that really it was based on some long, hard calculation he’d done.

He always had a fantastic formal intuition about the innards of his calculations. Knowing what kind of result some integral should have, whether some special case should matter, and so on. And he was always trying to sharpen his intuition.

You know, I remember a time—it must have been the summer of 1985—when I’d just discovered a thing called rule 30. That’s probably my own all-time favorite scientific discovery. And that’s what launched a lot of the whole new kind of science that I’ve spent 20 years building (and wrote about in my bookA New Kind of Science).

Well, Feynman and I were both visiting Boston, and we’d spent much of an afternoon talking about rule 30. About how it manages to go from that little black square at the top to make all this complicated stuff. And about what that means for physics and so on.

Well, we’d just been crawling around the floor—with help from some other people—trying to use meter rules to measure some feature of a giant printout of it. And Feynman took me aside, rather conspiratorially, and said, “Look, I just want to ask you one thing: how did you know rule 30 would do all this crazy stuff?” “You know me,” I said. “I didn’t. I just had a computer try all the possible rules. And I found it.” “Ah,” he said, “now I feel much better. I was worried you had some way to figure it out.”

Feynman and I talked a bunch more about rule 30. He really wanted to get an intuition for how it worked. He tried bashing it with all his usual tools. Like he tried to work out what the slope of the line between order and chaos is. And he calculated. Using all his usual calculus and so on. He and his son Carl even spent a bunch of time trying to crack rule 30 using a computer.

And one day he calls me and says, “OK, Wolfram, I can’t crack it. I think you’re on to something.” Which was very encouraging.

Feynman and I tried to work together on a bunch of things over the years. On quantum computers before anyone had ever heard of those. On trying to make a chip that would generate perfect physical randomness—or eventually showing that that wasn’t possible. On whether all the computation needed to evaluate Feynman diagrams really was necessary. On whether it was a coincidence or not that there’s an e –H t in statistical mechanics and an e iH t in quantum mechanics. On what the simplest essential phenomenon of quantum mechanics really is.

I remember often when we were both consulting for Thinking Machines in Boston, Feynman would say, “Let’s hide away and do some physics.” This was a typical scenario. Yes, I think we thought nobody was noticing that we were off at the back of a press conference about a new computer system talking about the nonlinear sigma model. Typically, Feynman would do some calculation. With me continually protesting that we should just go and use a computer. Eventually I’d do that. Then I’d get some results. And he’d get some results. And then we’d have an argument about whose intuition about the results was better.

I should say, by the way, that it wasn’t that Feynman didn’t like computers. He even had gone to some trouble to get an early Commodore PET personal computer, and enjoyed doing things with it. And in 1979, when I started working on the forerunner of what would become Mathematica, he was very interested. We talked a lot about how it should work. He was keen to explain his methodologies for solving problems: for doing integrals, for notation, for organizing his work. I even managed to get him a little interested in the problem of language design. Though I don’t think there’s anything directly from Feynman that has survived in Mathematica. But his favorite integrals we can certainly do.

You know, it was sometimes a bit of a liability having Feynman involved. Like when I was working on SMP—the forerunner of Mathematica—I organized some seminars by people who’d worked on other systems. And Feynman used to come. And one day a chap from a well-known computer science department came to speak. I think he was a little tired, and he ended up giving what was admittedly not a good talk. And it degenerated at some point into essentially telling puns about the name of the system they’d built. Well, Feynman got more and more annoyed. And eventually stood up and gave a whole speech about how “If this is what computer science is about, it’s all nonsense....” I think the chap who gave the talk thought I’d put Feynman up to this. And has hated me for 25 years...

You know, in many ways, Feynman was a loner. Other than for social reasons, he really didn’t like to work with other people. And he was mostly interested in his own work. He didn’t read or listen too much; he wanted the pleasure of doing things himself. He did used to come to physics seminars, though. Although he had rather a habit of using them as problem-solving exercises. And he wasn’t always incredibly sensitive to the speakers. In fact, there was a period of time when I organized the theoretical physics seminars at Caltech. And he often egged me on to compete to find fatal flaws in what the speakers were saying. Which led to some very unfortunate incidents. But also led to some interesting science.

One thing about Feynman is that he went to some trouble to arrange his life so that he wasn’t particularly busy—and so he could just work on what he felt like. Usually he had a good supply of problems. Though sometimes his long-time assistant would say: “You should go and talk to him. Or he’s going to start working on trying to decode Mayan hieroglyphs again.” He always cultivated an air of irresponsibility. Though I would say more towards institutions than people.

And I was certainly very grateful that he spent considerable time trying to give me advice—even if I was not always great at taking it. One of the things he often said was that “peace of mind is the most important prerequisite for creative work.” And he thought one should do everything one could to achieve that. And he thought that meant, among other things, that one should always stay away from anything worldly, like management.

Feynman himself, of course, spent his life in academia—though I think he found most academics rather dull. And I don’t think he liked their standard view of the outside world very much. And he himself often preferred more unusual folk.

Quite often he’d introduce me to the odd characters who’d visit him. I remember once we ended up having dinner with the rather charismatic founder of a semi-cult called EST. It was a curious dinner. And afterwards, Feynman and I talked for hours about leadership. About leaders like Robert Oppenheimer. And Brigham Young. He was fascinated—and mystified—by what it is that lets great leaders lead people to do incredible things. He wanted to get an intuition for that.

You know, it’s funny. For all Feynman’s independence, he was surprisingly diligent. I remember once he was preparing some fairly minor conference talk. He was quite concerned about it. I said, “You’re a great speaker; what are you worrying about?” He said, “Yes, everyone thinks I’m a great speaker. So that means they expect more from me.” And in fact, sometimes it was those throwaway conference talks that have ended up being some of Feynman’s most popular pieces. On nanotechnology. Or foundations of quantum theory. Or other things.

You know, Feynman spent most of his life working on prominent current problems in physics. But he was a confident problem solver. And occasionally he would venture outside, bringing his “one can solve any problem just by thinking about it” attitude with him. It did have some limits, though. I think he never really believed it applied to human affairs, for example. Like when we were both consulting for Thinking Machines in Boston, I would always be jumping up and down about how if the management of the company didn’t do this or that, they would fail. He would just say, “Why don’t you let these people run their company; we can’t figure out this kind of stuff.” Sadly, the company did in the end fail. But that’s another story.

* A talk given on the occasion of the publication of Feynman’s collected letters.

Kurt Gödel

May 1, 2006

When Kurt Gödel was born—one hundred years ago today—the field of mathematics seemed almost complete. Two millennia of development had just been codified into a few axioms, from which it seemed one should be able almost mechanically to prove or disprove anything in mathematics—and, perhaps with some extension, in physics too.

Twenty-five years later things were proceeding apace, when at the end of a small academic conference, a quiet but ambitious fresh PhD involved with the Vienna Circle ventured that he had proved a theorem that this whole program must ultimately fail.

In the seventy-five years since then, what became known as Gödel’s theorem has been ascribed almost mystical significance, sowed the seeds for the computer revolution, and meanwhile been practically ignored by working mathematicians—and viewed as irrelevant for broader science.

The ideas behind Gödel’s theorem have, however, yet to run their course. And in fact I believe that today we are poised for a dramatic shift in science and technology for which its principles will be remarkably central.

Gödel’s original work was quite abstruse. He took the axioms of logic and arithmetic, and asked a seemingly paradoxical question: can one prove the statement “this statement is unprovable”?

One might not think that mathematical axioms alone would have anything to say about this. But Gödel demonstrated that in fact his statement could be encoded purely as a statement about numbers.

Yet the statement says that it is unprovable. So here, then, is a statement within mathematics that is unprovable by mathematics: an “undecidable statement”. And its existence immediately shows that there is a certain incompleteness to mathematics: there are mathematical statements that mathematical methods cannot reach.

It could have been that these ideas would rest here. But from within the technicalities of Gödel’s proof there emerged something of incredible practical importance. For Gödel’s seemingly bizarre technique of encoding statements in terms of numbers was a critical step towards the idea of universal computation—which implied the possibility of software, and launched the whole computer revolution.

Thinking in terms of computers gives us a modern way to understand what Gödel did: although he himself in effect only wanted to talk about one computation, he proved that logic and arithmetic are actually sufficient to build a universal computer, which can be programmed to carry out any possible computation.

Not all areas of mathematics work this way. Elementary geometry and elementary algebra, for example, have no universal computation, and no analog of Gödel’s theorem—and we even now have practical software that can prove any statement about them.

But universal computation—when it is present—has many deep consequences.

The exact sciences have always been dominated by what I call computational reducibility: the idea of finding quick ways to compute what systems will do. Newton showed how to find out where an (idealized) Earth will be in a million years—we just have to evaluate a formula—we do not have to trace a million orbits.

But if we study a system that is capable of universal computation we can no longer expect to “outcompute” it like this; instead, to find out what it will do may take us irreducible computational work.

And this is why it can be so difficult to predict what computers will do—or to prove that software has no bugs. It is also at the heart of why mathematics can be difficult: it can take an irreducible amount of computational work to establish a given mathematical result.

And it is what leads to undecidability—for if we want to know, say, whether any number of any size has a certain property, computational irreducibility may tell us that without checking infinitely many cases we may not be able to decide for sure.

Working mathematicians, though, have never worried much about undecidability. For certainly Gödel’s original statement is remote, being astronomically long when translated into mathematical form. And the few alternatives constructed over the years have seemed almost as irrelevant in practice.

But my own work with computer experiments suggests that in fact undecidability is much closer at hand. And indeed I suspect that quite a few of the famous unsolved problems in mathematics today will turn out to be undecidable within the usual axioms.

The reason undecidability has not been more obvious is just that mathematicians—despite their reputation for abstract generality—like most scientists, tend to concentrate on questions that their methods succeed with.

Back in 1931, Gödel and his contemporaries were not even sure whether Gödel’s theorem was something general, or just a quirk of their formalism for logic and arithmetic. But a few years later, when Turing machines and other models for computers showed the same phenomenon, it began to seem more general.

Still, Gödel wondered whether there would be an analog of his theorem for human minds, or for physics. We still do not know the complete answer, though I certainly expect that both minds and physics are in principle just like universal computers—with Gödel-like theorems.

One of the great surprises of my own work has been just how easy it is to find universal computation. If one systematically explores the abstract universe of possible computational systems one does not have to go far. One needs nothing like the billion transistors of a modern electronic computer, or even the elaborate axioms of logic and arithmetic. Simple rules that can be stated in a short sentence—or summarized in a three-digit number—are enough.

And it is almost inevitable that such rules are common in nature—bringing with them undecidability. Is a solar system ultimately stable? Can a biochemical process ever go out of control? Can a set of laws have a devastating consequence? We can now expect general versions of such questions to be undecidable.

This might have pleased Gödel—who once said he had found a bug in the US Constitution, who gave his friend Einstein a paradoxical model of the universe for his birthday—and who told a physicist I knew that for theoretical reasons he “did not believe in natural science”.

Even in the field of mathematics, Gödel—like his results—was always treated as somewhat alien to the mainstream. He continued for decades to supply central ideas to mathematical logic, even as “the greatest logician since Aristotle” (as John von Neumann called him) became increasingly isolated, worked to formalize theology using logic, became convinced that discoveries of Leibniz from the 1600s had been suppressed, and in 1978, with his wife’s health failing, died of starvation, suspicious of doctors and afraid of being poisoned.

He left us the legacy of undecidability, which we now realize affects not just esoteric issues about mathematics, but also all sorts of questions in science, engineering, medicine and more.

One might think of undecidability as a limitation to progress, but in many ways it is instead a sign of richness. For with it comes computational irreducibility, and the possibility for systems to build up behavior beyond what can be summarized by simple formulas. Indeed, my own work suggests that much of the complexity we see in nature has precisely this origin. And perhaps it is also the essence of how from deterministic underlying laws we can build up apparent free will.

In science and technology we have normally crafted our theories and devices by careful design. But thinking in the abstract computational terms pioneered by Gödel’s methods we can imagine an alternative. For if we represent everything uniformly in terms of rules or programs, we can in principle just explicitly enumerate all possibilities.

In the past, though, nothing like this ever seemed even faintly sensible. For it was implicitly assumed that to create a program with interesting behavior would require explicit human design—or at least the efforts of something like natural selection. But when I started actually doing experiments and systematically running the simplest programs, what I found instead is that the computational universe is teeming with diverse and complex behavior.

Already there is evidence that many of the remarkable forms we see in biology just come from sampling this universe. And perhaps by searching the computational universe we may find—even soon—the ultimate underlying laws for our own physical universe. (To discover all their consequences, though, will still require irreducible computational work.)

Exploring the computational universe puts mathematics too into a new context. For we can also now see a vast collection of alternatives to the mathematics that we have ultimately inherited from the arithmetic and geometry of ancient Babylon. And for example, the axioms of basic logic, far from being something special, now just appear as roughly the 50,000th possibility. And mathematics, long a purely theoretical science, must adopt experimental methods.

The exploration of the computational universe seems destined to become a core intellectual framework in the future of science. And in technology the computational universe provides a vast new resource that can be searched and mined for systems that serve our increasingly complex purposes. It is undecidability that guarantees an endless frontier of surprising and useful material to find.

And so it is that from Gödel’s abstruse theorem about mathematics has emerged what I believe will be the defining theme of science and technology in the twenty-first century.

Alan Turing

June 23, 2012

I never met Alan Turing; he died five years before I was born. But somehow I feel I know him well—not least because many of my own intellectual interests have had an almost eerie parallel with his.

And by a strange coincidence, Mathematica’s “birthday” (June 23, 1988) is aligned with Turing’s—so that today is not only the centenary of Turing’s birth, but is also Mathematica’s 24th birthday.

I think I first heard about Alan Turing when I was about eleven years old, right around the time I saw my first computer. Through a friend of my parents, I had gotten to know a rather eccentric old classics professor, who, knowing my interest in science, mentioned to me this “bright young chap named Turing” whom he had known during the Second World War.

One of the classics professor’s eccentricities was that whenever the word “ultra” came up in a Latin text, he would repeat it over and over again, and make comments about remembering it. At the time, I didn’t think much of it—though I did remember it. Only years later did I realize that “Ultra” was the codename for the British cryptanalysis effort at Bletchley Park during the war. In a very British way, the classics professor wanted to tell me something about it, without breaking any secrets. And presumably it was at Bletchley Park that he had met Alan Turing.

A few years later, I heard scattered mentions of Alan Turing in various British academic circles. I heard that he had done mysterious but important work in breaking German codes during the war. And I heard it claimed that after the war, he had been killed by British Intelligence. At the time, at least some of the British wartime cryptography effort was still secret, including Turing’s role in it. I wondered why. So I asked around, and started hearing that perhaps Turing had invented codes that were still being used. (In reality, the continued secrecy seems to have been intended to prevent it being known that certain codes had been broken—so other countries would continue to use them.)

I’m not sure where I next encountered Alan Turing. Probably it was when I decided to learn all I could about computer science—and saw all sorts of mentions of “Turing machines”. But I have a distinct memory from around 1979 of going to the library and finding a little book about Alan Turing written by his mother, Sara Turing.

And gradually I built up quite a picture of Alan Turing and his work. And over the 30+ years that have followed, I have kept on running into Alan Turing, often in unexpected places.

In the early 1980s, for example, I had become very interested in theories of biological growth—only to find (from Sara Turing’s book) that Alan Turing had done all sorts of largely unpublished work on that.

And for example in 1989, when we were promoting an early version of Mathematica, I decided to make a poster of the Riemann zeta function—only to discover that Alan Turing had at one time held the record for computing zeros of the zeta function. (Earlier he had also designed a gear-based machine for doing this.)

Recently I even found out that Turing had written about the “reform of mathematical notation and phraseology”—a topic of great interest to me in connection with both Mathematica and Wolfram|Alpha.

And at some point I learned that a high school math teacher of mine (Norman Routledge) had been a friend of Turing’s late in his life. But even though my teacher knew my interest in computers, he never mentioned Turing or his work to me. And indeed, 35 years ago, Alan Turing and his work were little known, and it is only fairly recently that Turing has become as famous as he is today.

Turing’s greatest achievement was undoubtedly his construction in 1936 of a universal Turing machine—a theoretical device intended to represent the mechanization of mathematical processes. And in some sense, Mathematica is precisely a concrete embodiment of the kind of mechanization that Turing was trying to represent.

In 1936, however, Turing’s immediate purpose was purely theoretical. Indeed it was to show not what could be mechanized in mathematics, but what could not. In 1931, Gödel’s theorem had shown that there were limits to what could be proved in mathematics, and Turing wanted to understand the boundaries of what could ever be done by any systematic procedure in mathematics.

Turing was a young mathematician in Cambridge, England, and his work was couched in terms of mathematical problems of his time. But one of his steps was the theoretical construction of a universal Turing machine capable of being “programmed” to emulate any other Turing machine. In effect, Turing had invented the idea of universal computation—which was later to become the foundation on which all of modern computer technology is built.

At the time, though, Turing’s work did not make much of a splash, probably largely because the emphasis of Cambridge mathematics was elsewhere. Just before Turing published his paper, he learned about a similar result by Alonzo Church from Princeton, formulated not in terms of theoretical machines, but in terms of the mathematics-like lambda calculus. And as a result Turing went to Princeton for a year to study with Church—and while he was there, wrote the most abstruse paper of his life.

The next few years for Turing were dominated by his wartime cryptanalysis work. I learned a few years ago that during the war Turing visited Claude Shannon at Bell Labs in connection with speech encipherment. Turing had been working on a kind of statistical approach to cryptanalysis—and I am extremely curious to know whether Turing told Shannon about this, and potentially launched the idea of information theory, which itself was first formulated for secret cryptanalysis purposes.

After the war, Turing got involved with the construction of the first actual computers in England. To a large extent, these computers had emerged from engineering, not from a fundamental understanding of Turing’s work on universal computation.

There was, however, a definite, if circuitous, connection. In 1943 Warren McCulloch and Walter Pitts in Chicago wrote a theoretical paper about neural networks that used the idea of universal Turing machines to discuss general computation in the brain. John von Neumann read this paper, and used it in his recommendations about how practical computers should be built and programmed. (John von Neumann had known about Turing’s paper in 1936, but at the time did not recognize its significance, instead describing Turing in a recommendation letter as having done interesting work on the central limit theorem.)